REPUTATION MANAGEMENT SERVICE FOR PEER-TO-PEER

ENTERPRISE ARCHITECTURES

M. Amoretti, M. Bisi, M. C. Laghi, F. Zanichelli, G. Conte

Distributed Systems Group - Dip. di Ingegneria dell’Informazione

Universit

`

a degli Studi di Parma

Keywords:

Service Oriented Architectures, Peer-to-Peer, Security, Reputation Management.

Abstract:

The high potential of P2P infrastructures for enterprise services and applications both on the intranet (e.g.

project workgroups) and on the Internet (e.g. B2B exchange) can be fully achieved provided that robust trust

and security management systems are made available.

This paper presents the reputation system we have devised for our P2P framework which supports secure role-

based peergroups and service interactions. Our system includes decentralized trust and security management

able to cope with several threats. In the paper the underlying analytical model is presented together with a

simulation-based evaluation of the robustness against malicious negative feedbacks.

1 INTRODUCTION

Ubiquitous access to networks is deeply changing the

ways enterprises organize and perform their business

both internally and externally. Intranets and the global

Internet allow for seamless and almost instantaneous

information and knowledge sharing within organiza-

tions thus enabling more efficient processes and activ-

ities and giving rise to novel forms of interaction and

supporting applications.

Peer-to-peer (P2P) technologies have gained

world-wide popularity due to the success of file-

sharing applications (and the reactions of several

copyright holders) and their decentralized nature ap-

pears promising also to the purposes and applications

of enterprises. P2P-based instant messaging and file-

sharing can be effectively exploited on an intranet to

support for example projects workgroups, distributed

offices and distributions chains for documents and

archives. Internet-enabled inter-firm collaboration

can benefit from a P2P approach as well. Business-to-

business exchanges are becoming increasingly impor-

tant and many B2B communities organize themselves

to be more competitive in specialized industry sectors

and to increase the efficiency of their procurement

and supply chains. By leveraging upon P2P technolo-

gies, the common tasks of searching for new business

partners and exchanging transaction information (e.g.

quotations) can be improved in terms of instant infor-

mation, control over shared data (mantained at each

P2P node) and reduced infrastructure costs.

The vision of unmediated, instantaneous trading

as well as more realistic P2P-based B2B communi-

ties can be approached only if enterprise-level solu-

tions are made available to cope with the fundamental

trust and security issues. While identity trust, namely

the belief that an entity is what it claims to be, can

be assessed by means of an authentication scheme

such as X.509 digital identity certificates, provision

trust, that is the relying party’s trust in a service or

resource provider, appears more critical as users re-

quire protection from malicious or unreliable service

providers. Unlike B2B exchanges based on central-

ized, third party UDDI directories which offer trust-

worthy data of potential trading partners (i.e. service

providers), P2P decentralized interaction lends itself

to trust and reputation systems mainly based on first

hand experience and second-hand referrals. This in-

formation can be combined by a peer into an overall

rating or reputation value for a service provider and

should influence further interaction with it.

In this paper we present the reputation system we

have devised for our P2P framework which supports

secure role-based peergroups and service interactions.

Our system includes decentralized trust and security

management able to cope with several threats, start-

ing from impersonification, which refers to the threat

caused by a malicious peer posing as another in order

265

Amoretti M., Bisi M., C. Laghi M., Zanichelli F. and Conte G. (2006).

REPUTATION MANAGEMENT SERVICE FOR PEER-TO-PEER ENTERPRISE ARCHITECTURES.

In Proceedings of the International Conference on e-Business, pages 265-272

DOI: 10.5220/0001427902650272

Copyright

c

SciTePress

to misuse that peer’s privileges and reputation. Digital

signatures and message authentication are typical so-

lutions for this kind of attack. As malicious peers can

engage in fraudolent actions, such as advertising false

resources or services and not fulfilling commitments,

a consistent reputation management system has been

introdued in our P2P framework which also forbids

trust misrepresentation attempts. In a peer-to-peer

system, the most difficult threat to discover and neu-

tralize is collusion, which refers to a group of mali-

cious peers working in concert to actively subvert the

system. To face this danger, the default policy pro-

vided by our security framework is role-based group

membership based on secure credentials (SC policy).

The paper is organized as follows. Next section 2

outlines the issues of reputation management systems

and the choices available for centralized and decen-

tralized implementation. The analytical model under-

lying our reputation management is described in sec-

tion 3. A simulation scenario is then presented, first

describing a four roles configuration example (section

4) and then discussing the obtained results (section 5).

Section 6 reports on some relevant work in the area of

reputations systems for P2P systems. Finally, a few

conclusive remarks and an indication of further work

conclude the paper.

2 REPUTATION MANAGEMENT

IN ROLE-BASED PEERGROUPS

In our view, a role-based peergroup can achieve sta-

bility only if each participant (which is supposed to be

authenticated and authorized) bases its actions on pre-

vious experience and/or recommendations, i.e. which

define the reputation of the other participants. Rep-

utation and trust are orthogonal concepts, which re-

quire, in a peer-to-peer context, complex management

mechanisms such as node identification and digitally

signed certificates exchange (Ye et al., 2004).

Our model considers both peer reputation and ser-

vice reputation. Peer reputation is important for the

reputation manager, which could store service evalu-

ations weighted by the advisor’s reputation. Service

reputation is important because a peer which has to

choose between two apparently identical services, se-

lects the most reputed one. A peer can provide more

than one service, each of them with its own reputation

which contributes to the overall reputation of the peer.

Peer reputation values are expressed by (+, −)

couples, e.g. (12, 4) which means that peer services

have globally received 12 appreciations and 4 neg-

ative feedbacks. Peer reputations could have non-

zero initial value, fixed by a trusted third party. Af-

ter each remote service interaction, consumers eval-

uate the service, assigning +1 or −1 to the provider.

The reputation manager stores the global reputation

of each peer, but also the reputation of each service a

peer provides.

When a peer enters the group as newbie, or is pro-

moted to an higher rank, it receives an initial repu-

tation value; based on peer’s behaviour, the reputa-

tion value changes with time, and represents the trust-

worthiness of peers on the basis of their transaction

with other nodes. Each peer, at the end of an interac-

tion with another member of the group, can provide

its feedback about each consumed service; the feed-

back is used to update the reputation of the provider

peer. The reputation manager should weight the re-

ceived feedback, considering the reputation value of

the sender peer.

Two problems arise: where to store the reputation

information, and how to guarantee its integrity. Sev-

eral solutions can be adopted:

1. a stable and recognized peer stores and manages

the reputation information of all group members

(centralized solution);

2. each peer stores its experience against other peers,

and when others ask for reputation information of a

particular peer, it answers them based on its stored

information (local solution);

3. the reputation storage is partitioned into several

small parts, which are stored in all peers; that is,

every peer equally manages some part of the whole

reputation information (global solution);

4. only stable, recognized and highly-reputed peers

are reputation collectors (mediated solution).

Not all these solutions are equally scalable, efficient

and robust.

Solution 1 is easy to implement as a Centralized

Reputation Management Service (CRMS), but it does

not scale, i.e. it could work only for small peergroups.

Solutions 2, 3 and 4 require a Distributed Reputation

Management Service (DRMS).

The local solution is slightly efficient and lacks of

robustness. If a peer wants more objective reputation

about another peer, it should ask many peers. This

would generate a lot of messages in the peer-to-peer

network. Moreover, if the reputation information is

concentrated in few very active customer peers, when

these are not online the reputation system is broken.

The global solution is very attractive, in particular

if the reputation management system is implemented

as a Distributed Hash Table (DHT). In that case, the

peer which is responsible for a specific reputation in-

formation is determined with a hash function within

O(1) time, and its location is found within O(log N)

time.

The mediated solution should be good for unstruc-

tured networks, in particular if they have few highly

connected and stable nodes, e.g. scale-free topolo-

gies (Barab

´

asi and Albert, 1999). In our role-based

ICE-B 2006 - INTERNATIONAL CONFERENCE ON E-BUSINESS

266

scheme, admin peers are the candidates for the real-

ization of the DRMS.

3 ANALYTICAL MODEL

In this section we illustrate an analytical model which

describes the fundamental parameters which are in-

volved in the evolution of the reputation, for each role

which can be taken in a peergroup based on our SC

policy.

The overall reputation value is Rep = n

+

−n

−

, i.e.

the difference between the number of positive feed-

backs and the number of negative feedbacks. When

a peer joins the secure peergroup, and each time it

is promoted or degraded, it receives an initial repu-

tation value which is stored by the reputatation man-

agement service. The temporal evolution of the repu-

tation value depends on the following parameters:

• Total votes T = n

+

+n

−

, which represents the sum

of all received feedbacks;

• good Ratio R =

n

+

n

+

+n

−

, which is the number of

positive feedbacks, versus T ; R is a fundamental

parameter for the analytical model, because its in-

stantaneous value allows to define the dependabil-

ity degree of the peer.

The dominium of R and T can be easily obtained

from their definitions:

0 ≤ R ≤ 1, T ≥ 0

Depending on values of R and T , we can consider

four different conditions for each role a peer can take,

which are listed below.

• T ≥ T

th

, where T

th

≥ 0 is the confidence thresh-

old, evaluated on all received feedbacks. The rea-

son of considering such a threshold is that the de-

pendability of the reputation value of a peer de-

pends on the total number of performed transac-

tions (the more they are, the more the value is de-

pendable).

• T < T

th

means that the peer has recently joined the

group, thus the reputation value must be weighted

to consider a potentially less dependability.

• R ≥ R

th

, where 0 ≤ R

th

≤ 1 is the trust thresh-

old. In this case, the peer can be trusted.

• R < R

th

, on the other side, means that the peer

cannot be trusted and should be degraded.

From the definitions of T and R, we obtain

Rep = (2R − 1)T (1)

from which we can derive the role preservation con-

dition

Rep ≥ (2R

th

− 1)T (2)

Moreover, by retrieving T and R values of a peer, it

is possible to compute the probability that next feed-

back will be positive (P

g ood

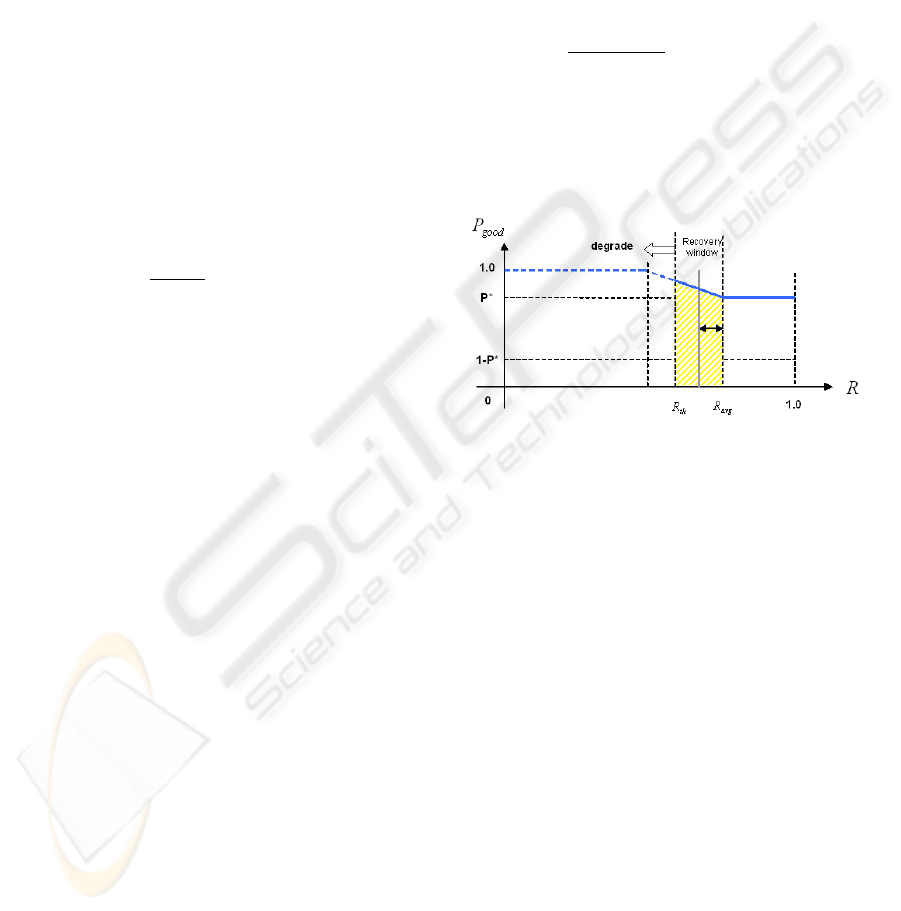

) for that peer. Figure 1 il-

lustrates P

g ood

versus the good ratio value R. We can

observe that if R is greater or equal than the average

value R

av g

, which is an assigned parameter, P

g ood

’s

value is constant (P

∗

). If the good ratio is included

between R

av g

and R

th

, a mechanism named recov-

ery window allows the peer to increase its good ratio.

In details, a probability bonus P

b

is conceded, defined

by:

P

b

=

R

av g

− R

R

av g

− R

th

[P

g ood

(R

th

) − P

∗

]

The value of P

g ood

(R

th

) is an assigned paramater.

This mechanism is general, but the recovery win-

dow size should be different for each role, decreasing

with the importance of the peer.

Figure 1: Relation between the probability for next feed-

back to be positive and the good ratio.

It is possible to compute, from previous parame-

ters, the maximum rate max{f

bad

} with which one or

more malicious peers (maybe cooperating) can pro-

vide negative feedbacks without affecting the peer’s

role. Over this rate value, the peer is degraded for

insufficient good ratio, according to the rules which

have been illustrated above.

We first consider the case of null recovery window,

i.e. P

g ood

= P

∗

for all R ≥ R

th

. In the time unit ∆t,

the average number of received feedbacks is defined

as

∆T = ∆n

+

+ ∆n

−

+ ∆n

bad

where

∆n

+

= P

∗

∆n

and

∆n

−

= (1 − P

∗

)∆n

are justified feedbacks, while ∆n

bad

represents the

average number of unjustified negative feedbacks,

sent by malicious peers. The reputation changes by

∆Rep = ∆n

+

− ∆n

−

− ∆n

bad

(3)

REPUTATION MANAGEMENT SERVICE FOR PEER-TO-PEER ENTERPRISE ARCHITECTURES

267

Suppose the peer is at the trust threshold, thus (from

eq. 2)

Rep = (2R

th

− 1)T

Next ∆t provides

∆Rep = (2R

th

− 1)∆T

= (2R

th

− 1)(∆n

+

+ ∆n

−

+ ∆n

bad

)

With the latter and with eq. 3, we can obtain the maxi-

mum value of ∆n

bad

over which the peer is degraded,

and then

max{f

bad

} =

∆n

bad

∆T

(4)

If there is the recovery window, the average number

of positive feedbacks per time unit is

∆n

+

= (P

∗

+ P

b

)∆n

thus the maximum value of ∆n

bad

over which the

peer is degraded is not constant but depends on R.

In the following section, we show how this model

can be applied to a real system.

4 FOUR-ROLE CONFIGURATION

EXAMPLE

In this section we consider a four-role system, and for

each role we set the initial values for the parameters

we illustrated in section 3. In details, the list of roles

is:

• admin - the peer is highly-reputed, and trusted by

the group founder, or it is the group founder it-

self; the actions it is allowed to perform are: ser-

vice sharing/discovery, group monitoring, voting

for changing member ranks, store reputation infor-

mation (if the mediated solution is adopted);

• newbie - the peer is a new member; it only can

search for an admin peer, to ask for a promotion;

• searcher - the peer is allowed to search for ser-

vices and to interact with them;

• publisher - the peer can search for services but also

publish its own services in the peergroup.

Each admin peer has the following initial configu-

ration:

n

+,init

= 50

n

−,init

= 10

T

init

= n

+,init

+ n

−,init

= 60

R

init

=

n

+,init

n

+,init

+ n

−,init

= 0.83

Rep

init

= n

+,init

− n

−,init

= 40

R

av g

= 0.8

R

th

= 0.6

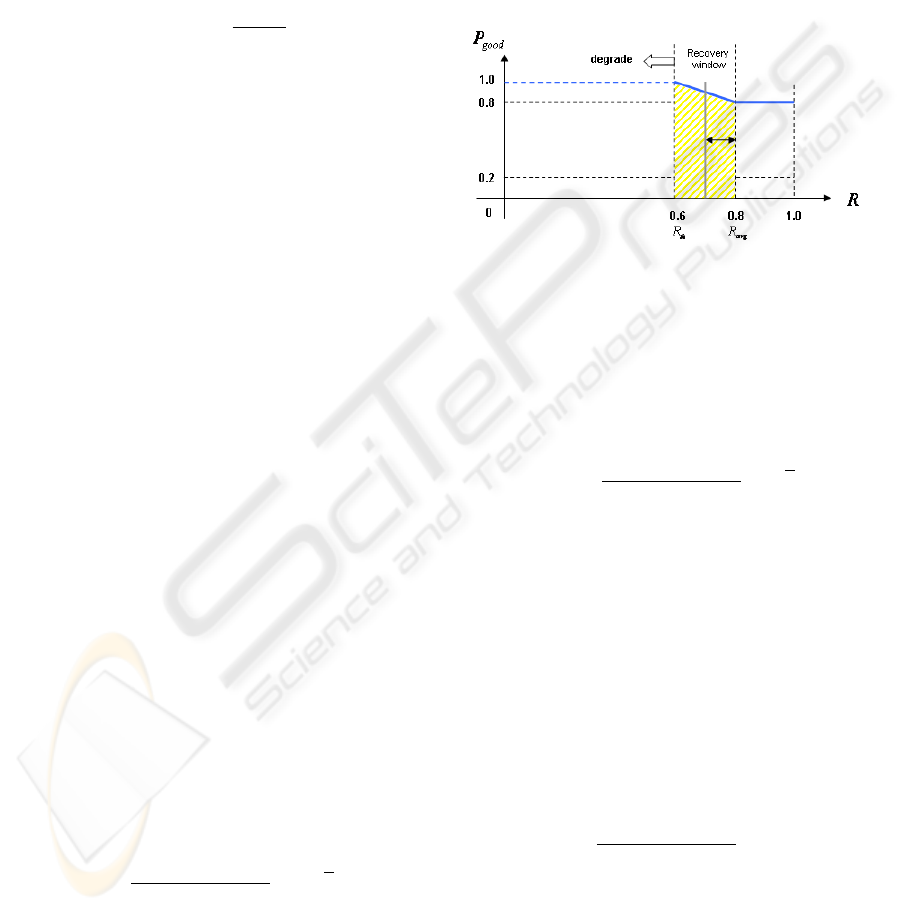

Figure 2 illustrates the probability P

g ood

for next

feedback to be positive, versus the good ratio value

R of an admin peer. Assuming that ∆n = 12, we

have ∆n

+

= 9.6 and ∆n

−

= 2.4. Without recov-

ery window, the maximum value of malicious neg-

ative feedbacks per time unit, over which the peer

is degraded, is ∆n

bad

= 4. Thus, the maximum

malicious feedbacks rate which can be accepted is

max{f

bad

} = 25%. If there is the recovery win-

dow, ∆n

bad

depends on R. In particular, consid-

ering the worst case R = R

th

= 0.6, we obtain

max{f

bad

} = 40%.

Figure 2: Relation between the probability for next feed-

back to be positive and the good ratio.

Each publisher peer has the following initial con-

figuration:

n

+,init

= 35

n

−,init

= 10

T

init

= n

+,init

+ n

−,init

= 45

R

init

=

n

+,init

n

+,init

+ n

−,init

= 0.7

Rep

init

= n

+,init

− n

−,init

= 25

R

av g

= 0.75

R

th

= 0.6

Figure 3 illustrates that, compared with previ-

ous case, the recovery window for a publisher is

smaller: 0.15 versus 0.2. With this window, the max-

imum tolerable rate of malicious negative feedbacks

is max{f

bad

} = 33.3%. Without the recovery win-

dows, it would be 20%.

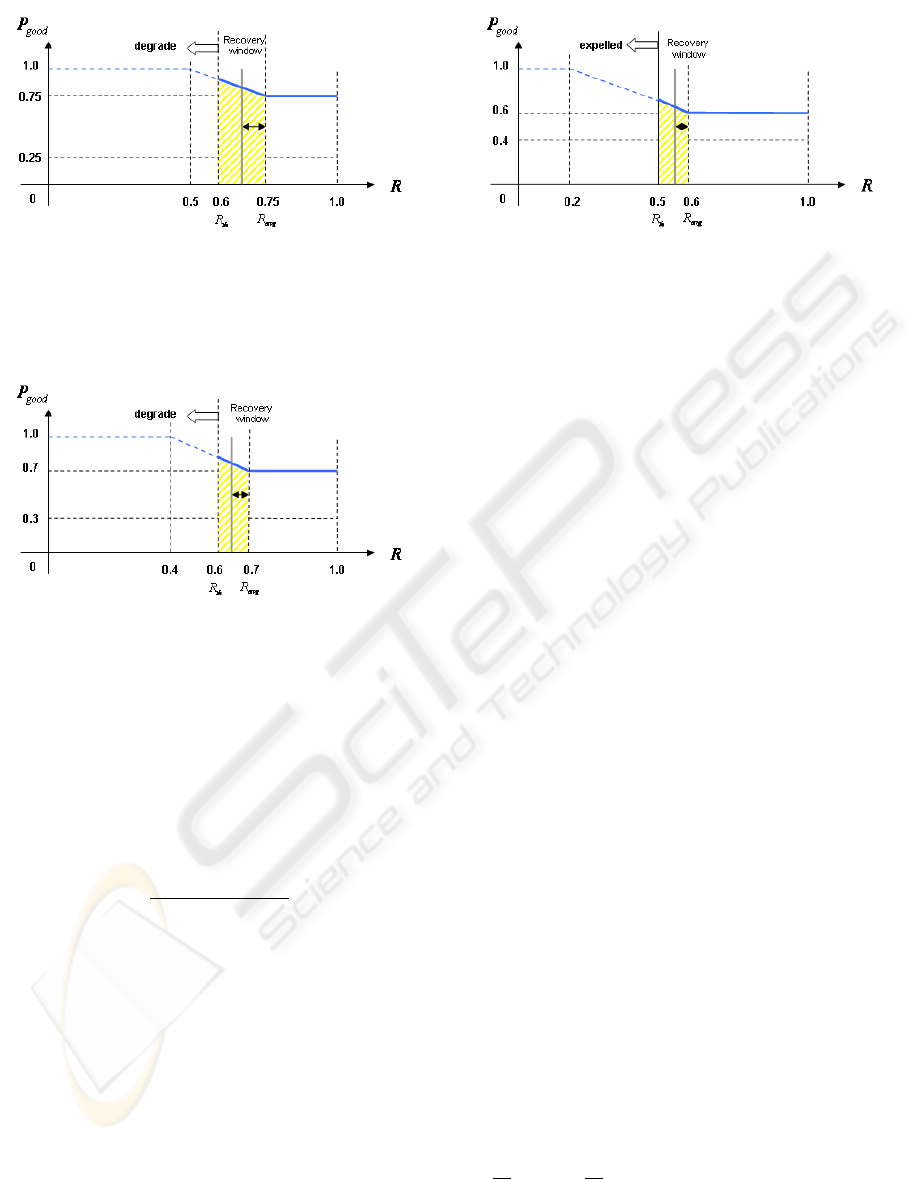

Each searcher peer has the following initial con-

figuration:

n

+,init

= 25

n

−,init

= 10

T

init

= n

+,init

+ n

−,init

= 35

R

init

=

n

+,init

n

+,init

+ n

−,init

= 0.714

Rep

init

= n

+,init

− n

−,init

= 15

R

av g

= 0.7

R

th

= 0.6

For a searcher, whose recovery window is 0.1 large

(see figure 4), the maximum tolerable rate of mali-

cious negative feedbacks is max{f

bad

} = 25%. It

would be 14.3%, without the recovery window.

ICE-B 2006 - INTERNATIONAL CONFERENCE ON E-BUSINESS

268

Figure 3: Relation between the probability for next feed-

back to be positive and the good ratio, in the case of a pub-

lisher peer.

Figure 4: Relation between the probability for next feed-

back to be positive and the good ratio, in the case of a

searcher peer.

Finally, each newbie peer has the following initial

configuration:

n

+,init

= 15

n

−,init

= 10

T

init

= n

+,init

+ n

−,init

= 25

R

init

=

n

+,init

n

+,init

+ n

−,init

= 0.6

Rep

init

= n

+,init

− n

−,init

= 5

R

av g

= 0.6

R

th

= 0.52

Thus a newbie, which is characterized by the small-

est recovery window (0.08), has max{f

bad

} = 23%,

which would be 13% without the recovery window.

5 SIMULATION RESULTS

By means of SP2A (Amoretti et al., 2005), our

middleware for the development and deployment of

service-oriented peer-to-peer architectures, we real-

ized a centralized reputation management service, and

Figure 5: Relation between the probability for next feed-

back to be positive and the good ratio, in the case of a n ew-

bie peer.

we simulated the interaction of that service with an

hypothetical network of peers which provide positive

and negative feedbacks. We emphasize that our pur-

pose was not to investigate reputation retrieval and

maintainance performance, which is obviously differ-

ent from centralized to distributed services. The de-

ployed testbed allowed us to verify the correctness of

the proposed analytical model, and to tune the para-

mater values for the four-role secure group configura-

tion.

The reputation management service maintains a

reputation table, and randomly assigns feedbacks to

peers, with ∆n = 12 as assumed in section 4. All

simulations started with each peer having R = R

av g

,

and lasted the time necessary to observe significant

results (we set ∆t = 1 minute). We tracked the evo-

lution of the reputation value Rep for each peer, and

we computed the average behaviour for each role. We

firstly simulated a peergroup of righteous peers, in

which positive and negative feedbacks per unit time

are distributed according to R

av g

. Then we per-

formed several simulations of a system in which some

peers provided unjustified negative feedbacks, with

increasing rate. For each role, we found the maximum

tolerable rate of malicious negative feedbacks, over

which the target peer is degraded, or banned from the

peergroup if its role is newbie.

In general, to know if the role preservation condi-

tion Rep ≥ (2R

th

− 1)T will be fulfilled, in a stable

condition with fixed f

bad

and R

av g

, we must compare

the average derivative of the current reputation curve,

with the average derivative of the minimum reputation

curve Rep(R

th

) (using, for example, the least squares

method). There are two possible situations:

•

d

dt

Rep ≥

d

dt

Rep

th

: the curves diverge, i.e. the

reputation of the peer increases more quickly than

the minimum reputation, under which the peer is

degraded;

REPUTATION MANAGEMENT SERVICE FOR PEER-TO-PEER ENTERPRISE ARCHITECTURES

269

•

d

dt

Rep <

d

dt

Rep

th

: the curves converge, i.e. in a

non-infinite time the peer will be degraded.

Also note that both curves depend on f

bad

, because

∆T = ∆n

+

+ ∆n

−

+ ∆n

bad

.

Starting from R = R

av g

, the good ratio decreases

if the number of negative feedbacks per time unit is

higher than the number of positive feedbacks. In par-

ticular, this eventuality can arise if f

bad

> 0. In our

simulations, for each role we set a recovery window

(according to the parameters illustrated in section 4),

which enters the game when R < R

av g

, and con-

tributes to maintain the reputation value over R

th

, i.e.

d

dt

Rep ≥

d

dt

Rep

th

.

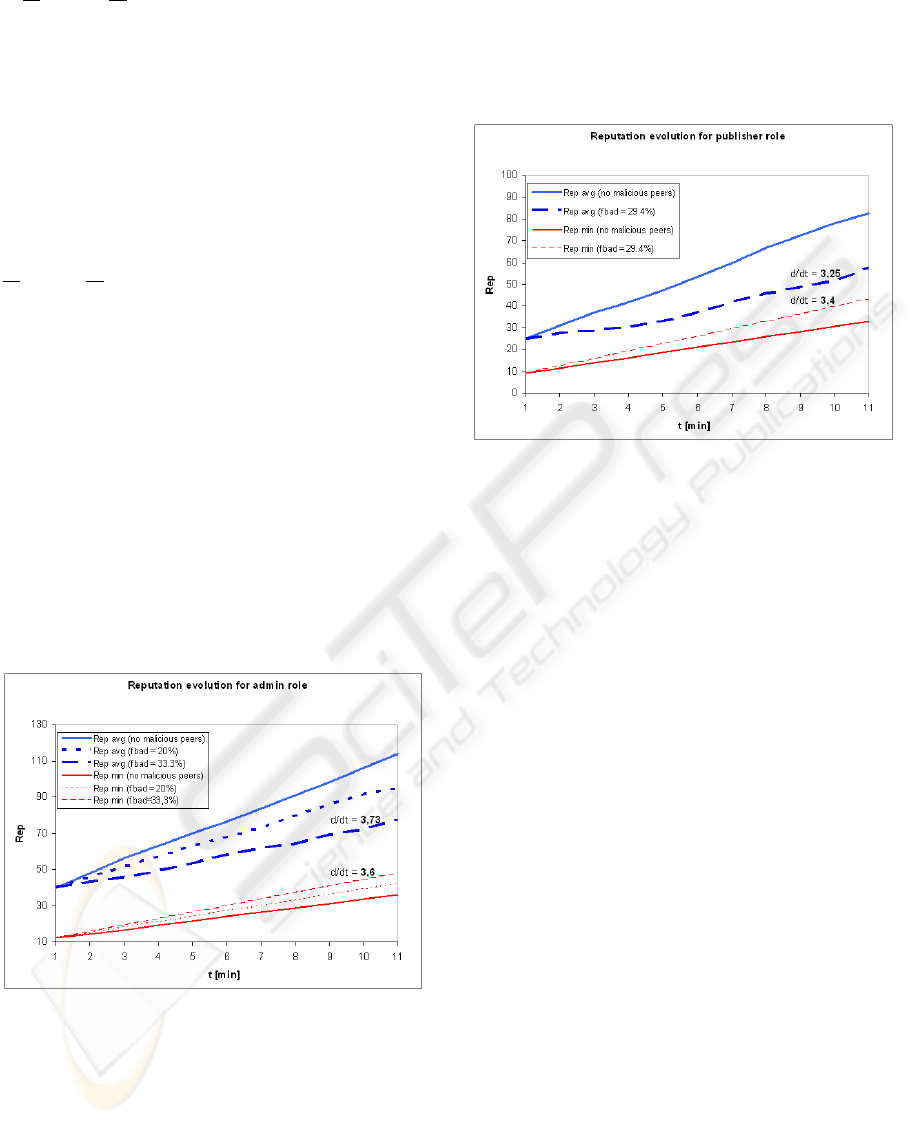

Figure 6 illustrates the average evolution of an ad-

min peer’s reputation over the simulation time inter-

val. If no malicious peers provide unjustified neg-

ative feedbacks, the measured reputation of the tar-

get peer is represented by the fat continuous curve.

Comparing this curve with the graph of the reputa-

tion which we obtain if R = R

th

(the thin contin-

uous curve in the figure), we can observe that they

diverge, thus we expect that the peer will not be de-

graded unless f

bad

= 0. In the same figure, dotted

curves refer to the case of f

bad

= 20%; they still

diverge. Finally, dashed curves show the limit over

which the role preservation condition is not fulfilled,

i.e. f

bad

' 35%. This result is compatible with the

analytical model, for which max{f

bad

} is 40% (in

the worst case R = R

th

).

Figure 6: Average and mimunum reputation dynamics, for

an admin peer, for different rates of malicious negative feed-

backs.

The most interesting simulation results for the pub-

lisher role are illustrated in figure 7, which compares

the case of no malicious peers (continuous lines) with

the case of unjustified negative feedbacks with f

bad

=

29.4% rate (dashed line). We can observe that, in

the latter case, the derivatives demonstrate that the

curves converge. We measured max{f

bad

} = 29%,

over which the publisher is degraded in a non-infinite

time. Also this result is compatible with the analytical

model, for which max{f

bad

} is 33.3% (in the worst

case R = R

th

).

Figure 7: Average and mimunum reputation dynamics, for

a publisher peer, for different rates of malicious negative

feedbacks.

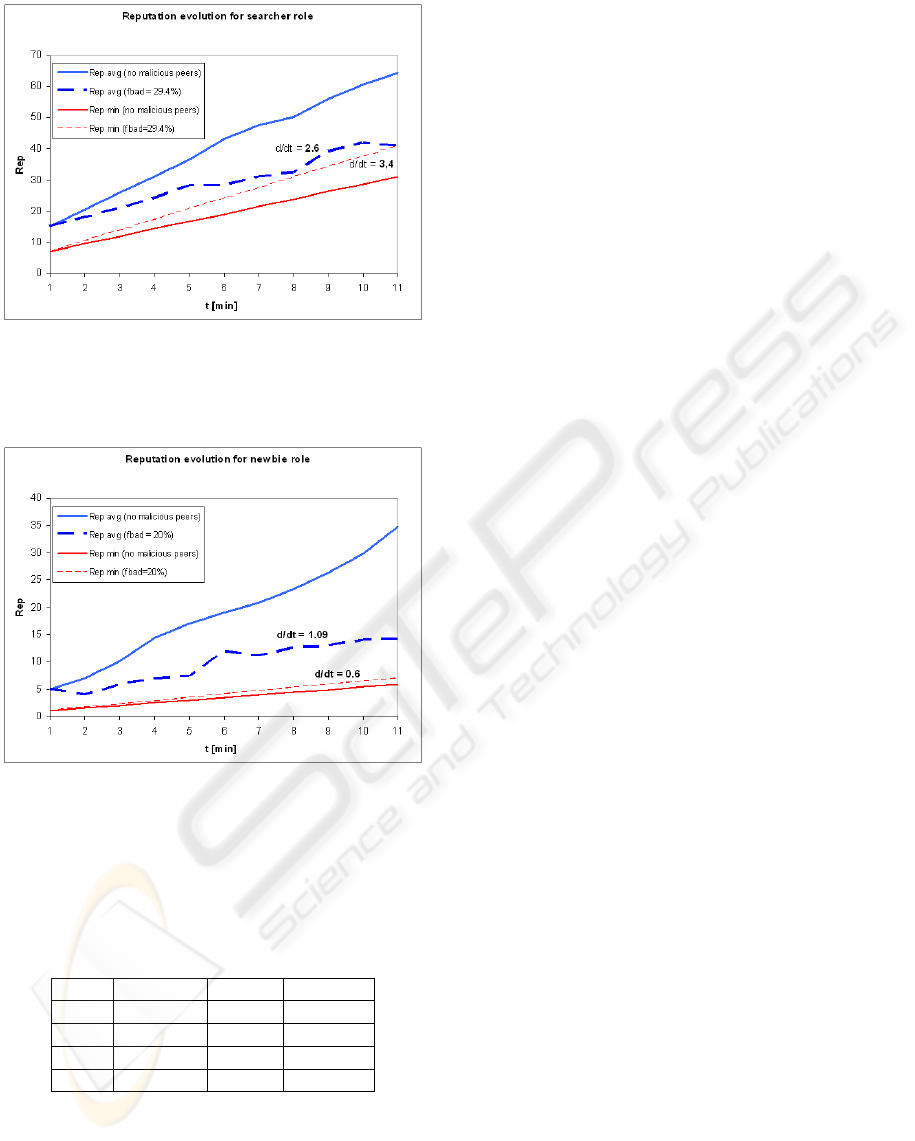

Figures 8 and 9 illustrates, respectively, simula-

tion results for the searcher and the newbie roles.

We measured a maximum malicious feedbacks rate

max{f

bad

} = 25%, for a searcher peer. The fig-

ure illustrates what happens when this rate is over-

thrown, i.e. the reputation curves (average and min-

imum) converge. For a newbie peer, the measured

maximum rate of unjustified negative feedbacks is

max{f

bad

} = 21%. The figure illustrates a less dan-

gerous situation. Both these simulations gave sat-

isfactory results, which respect the numerical con-

straints obtained with the analytical model.

All results are summarized in table 1. The first

and second columns report the analytical results, re-

spectively for a reputation management service with-

out and with recovery window. The third column re-

ports the simulation results, which refer to a reputa-

tion management service with recovery window and

target peer with righteous behaviour, i.e. a peer which

would maintain the initially assigned good ratio R

av g

in abscence of malicious negative feedbacks.

6 RELATED WORK

There have been several studies about managing rep-

utation in P2P networks, most of them related to con-

tent sharing, which has been the killer application for

these architectures. A reputation management system

in DHT-based structured P2P networks is proposed

ICE-B 2006 - INTERNATIONAL CONFERENCE ON E-BUSINESS

270

Figure 8: Average and mimunum reputation dynamics, for a

searcher peer, for different rates of malicious negative feed-

backs.

Figure 9: Average and mimunum reputation dynamics, for

a newbie peer, for different rates of malicious negative feed-

backs.

Table 1: Maximum tolerable malicious feedbacks rate

max{f

bad.

}: analytical results without and with recovery

window, and simulated results.

Role th

norec

th

rec

sim

rec

A 25% 40% 35%

P 20% 33,3% 28%

S 14,3% 25% 25%

N 13% 23% 21%

in (Lee et al., 2005); this model uses file reputation in-

formation as well as peer reputation information, and

the system uses a global storage for reputation infor-

mation, that is available when evaluator is not on-line.

The reputation information consists, as in our model,

of two values representing the number of positive and

negative feedbacks.

In (Mekouar et al., 2004b) a reputation manage-

ment system for partially-decentralized P2P systems

is described, in which the reputation information is

managed by supernodes. The authors assume that the

supernodes are selected from a set of trusted peers,

and they share a secret key used to digitally sign the

reputation data. Good reputation is obtained by hav-

ing consistent good behaviour through several trans-

actions. The proposed scheme is based on four values

associated to each peer and stored at the supernode

level; two of them are used to provide an idea about

the satisfaction of users, and the others express the

amount of uploads provided by the peer. The reputa-

tion information is updated according with the peer

transactions of upload and download. In a succes-

sive work (Mekouar et al., 2004a) the same authors

propose an algorithm to detect malicious peers which

are sending inauthentic files or are lying in their feed-

backs. They distinguish righteous peers from those

which share inauthentic files and provide false feed-

backs about other peers. The model introduces the

concept of suspicious transaction, that is a transac-

tion whose appreciation depends on the reputation of

the sender and the concept of credibility behaviour,

as an indicator of the liar behaviour of peers. These

schemes are able to detect malicious peers and isolate

them from the system, but do not consider the tolera-

ble rate of malicious negative feedbacks, and suppose

that supernodes are always trustworthy.

A distributed method to compute global trust val-

ues, based on power iteration, is illustrated in (Kam-

var et al., 2003). The reputation system aggregates lo-

cal trust values of all users, by means of an approach

based on transitive trust: a peer will have a high opin-

ion of those peers which have provided authentic files

and it is likely to trust the opinions of those peers,

since peers which are honest about the files they pro-

vide are also likely to be honest in reporting their lo-

cal trust values. The idea of transitive trust leads to

a system where global trust values correspond to the

left principal eigenvector of a matrix of normalized

local trust values. All peers in the network cooperate

to compute and store the global trust vector, taking

into consideration the system history with each single

peer. The scheme is reactive, i.e. it requires reputa-

tions to be computed on-demand, through the coop-

eration of a large number of peers. This introduces

additional latency and requires a lot of time to collect

statistics and compute the global rating.

To identify malicious peers and to prevent the

spreading of malicious content, a reputation-based ar-

chitecture is proposed in (Selcuk et al., 2004). The

protocol aims to distinguish malicious responses from

benign ones, by using the reputation of the peers

which provide them. The protocol relies on the P2P

REPUTATION MANAGEMENT SERVICE FOR PEER-TO-PEER ENTERPRISE ARCHITECTURES

271

infrastructure to obtain the necessary reputation infor-

mation when it is not locally available at the querying

peer. The outcomes of past transactions are stored in

trust vectors; every peer maintains a trust vector for

every other peer it has dealt with in the past. The trust

query process is similar to the file query process ex-

cept that the subject of the query is a peer about whom

trust information is inquired. The responses are sorted

and weighted by the credibility rating of the respon-

der, derived from the credibility vectors maintained

by the local peer, which are similar to the trust vec-

tors.

In (Garg et al., 2005), the use of a scheme named

ROCQ (Reputation, Opinion, Credibility and Quality)

in a collaborative content-distribution system is ana-

lyzed. ROCQ computes global reputation values for

peers on the basis of first-hand opinions of transac-

tions provided by participants. Global reputation val-

ues are stored in a decentralized fashion using multi-

ple score managers for each individual peer. When a

peer wishes to interact with another peer, it retrieves

the reputation values for that peer from its score man-

agers. The final average reputation value is formed by

two aggregations, first at the score managers and sec-

ond at the requesting peer; if a peer has had interac-

tions with the prospective partner before, it may wish

to prefer its own first-hand experience to the informa-

tion being provided by the trust management system

or to use a combination of the global reputation and

its first hand experience.

All these works consider the situation in which a

peer with a bad reputation is simply isolated from the

system, while the analytical model we are proposing

describes different roles for peers, associated with dif-

ferent actions. So a peer with a suspect malicious be-

haviour can be first degradated, and eventually iso-

lated from the system. It is also possible to compute

the probability that the next feedback will be positive

for a peer, that allows a peer to increase its good ratio,

and the maximum rate with which one or more ma-

licious peer can provide negative feedbacks without

affecting the peer’s role.

7 CONCLUSIONS

In this work we have illustrated the analytical model

of a reputation management service for role-based

peergroups. The model defines some parameters and

indicators, such as the maximum tolerable rate of ma-

licious negative feedbacks. We applied the reputation

model to a four-role security policy, giving a param-

eter set for each role, and computing the theoretical

values for the main indicators. These results have

been confirmed by those we obtained from several

simulations, which we realized using a centralized

reputation management service.

Further work will follow two directions. To com-

plete the analytical model, we must consider also ma-

licious positive feedbacks. For example, we could

check for suspiciously rapid increasing of good ratios,

and introduce a recovery window not only to prevent

unjustified degradations, as in current model, but also

to contrast malicious promotion attempts. Once the

model is completed, and all parameters are tuned, we

can search for the best distributed solution for reputa-

tion storage and retrieval.

ACKNOWLEDGEMENTS

This work has been partially supported by the “STIL”

regional project, and by the “WEB-MINDS” FIRB

project of the National Research Ministry.

REFERENCES

Amoretti, M., Zanichelli, F., and Conte, G. (2005). SP2A: a

Service-oriented Framework for P2P-based Grids. In

3rd International Workshop on Middleware for Grid

Computing, Co-located with Middleware 2005.

Barab

´

asi, A. and Albert, R. (1999). Emergence of Scaling

in Random Networks. Science, 286(5439):509–512.

Garg, A., Battiti, R., and Cascella, R. (2005). Reputation

Management: Experiments on the Robustness of rocq.

Kamvar, S. D., Schlosser, M., and Garcia-Molina, H.

(2003). The Eigentrust Algorithm for Reputation

Management in peer-to-peer Networks. In The 12th

International World Wide Web Conference.

Lee, S. Y., Kwon, O.-H., Kim, J., and S.J.Hong (2005). A

Reputation Management System in Structured peer-

to-peer Networks. In The 14th International Work-

shops on Enabling Technologies:Infrastruture for

Collaborative Enterprise.

Mekouar, L., Iraqui, Y., and Boutaba, R. (2004a). Detecting

Malicious Peers in a Reputation-based peer-to-peer

System.

Mekouar, L., Iraqui, Y., and Boutaba, R. (2004b). A Reputa-

tion Management and Selection Advisor Schemes for

peer-to-peer Systems. In The 15th IFIP/IEEE Interna-

tional Workshop on Distributed Systems: Operations

and Management.

Selcuk, A. A., Uzun, E., and Pariente, M. R. (2004).

A Reputation-Based Trust Management System for

peer-to-peer peer-to-peer Networks. In IEEE Interna-

tional Symposium on Cluster Computing and the Grid.

Ye, S., Makedon, F., and Ford, J. (2004). Collaborative Au-

tomated Trust Negotiation in Peer-to-Peer Systems.

In Fourth International Conference on Peer-to-Peer

Computing (P2P’04).

ICE-B 2006 - INTERNATIONAL CONFERENCE ON E-BUSINESS

272