PERFORMANCE OF ADAPTIVE TRACKING ALGORITHMS

Janeth Cruz, Leopoldo Altamirano and Josu

´

e Pedroza

National Institute of Astrophysics, Optics and Electronics

Enrique Erro No. 1, Sta. Mar

´

ıa Tonantzintla, Puebla, M

´

exico

Keywords:

Adaptive tracking algorithms, Estimation filters, Performance evaluation.

Abstract:

This paper compares the performance of adaptive trackers based on multiple algorithms. The aim of using

multiple algorithms is to increase the robustness of the trackers under varying conditions. We perform two es-

timation algorithms UKF and IMM to measure the performance of tracking on outdoor scenes with occlusions.

The purpose of this paper is to measure and evaluate tracker reliability for be able to determine the position of

a target. The performance is evaluated using metrics related to truth track. We give a positional evaluation and

statistics values of the performance of visual tracking systems, which adapt to changing environments.

1 INTRODUCTION

Automatic tracking algorithms are employed in many

industrial and military applications. In practice, auto-

matic target tracking systems need to operate around

the dynamic environments and require high accurate

determination of target position, velocity, acceleration

and other parameters to increase the detection proba-

bility and reduce false alarms and missed targets pro-

babilities.

There are many algorithms for tracking, such as,

correlation trackers (Kishore and Rao, 2001; Ronda

and Shue, 2000) that perform well with structured

targets, even in highly cluttered background con-

ditions. However correlation walk-off and false

peak problems are critical in these trackers. The

centroid-based tracking algorithms are also used in

surveillance systems (Jae-Soo Cho and Park, 2000).

These trackers are especially susceptible to changes

in object shape and orientation between successive

images. Another approach used is edge tracking,

which presents drawbacks in low contrast images.

In most cases tracking algorithms fail due to low

contrast, noise, scale and illumination changes. Se-

veral approaches have been developed to improve the

tracking of a moving target based on multiple trackers

(Ronda and Shue, 2000; Kishore and Rao, 2001;

Tao Yang and Li, 2005). However, these proposals

have not solved the occlusion problem that an object

presents in its trajectory. For the solution is necessary

to estimate the target position, by means of motion

models and estimation filters.

The aim of these approaches is to increase the

adaptability of the tracking to varying conditions; the

adaptability degree of the algorithms can be obtained

with the measurement of the uncertainty. Therefore,

several techniques have been proposed to measure

and compare different tracking algorithms.

Some papers evaluate the performance of full

tracking algorithms through occlusions. In (J. Black

and P., 2003) a methodology for evaluating the perfor-

mance of tracking systems is presented. They test the

performance of a tracking algorithm that employs a

partial-observation tracking model for occlusion rea-

soning. Needham and Boyle (Needham and Boyle,

2003) compare two tracking systems for different ob-

jects by using a set of metrics for positional eva-

luation. The work in (L. M. Brown and Lu, 2005)

presents a comparison of two background subtraction

algorithms with indoor/outdoor scenes. The number

of false negatives and false positives of each algo-

rithm is obtained for comparison. Lefebvre et. al.

(Tine Lefebvre and Shutter, 2004) compares the qua-

lity of the estimates of the common Kalman filter va-

riants for nonlinear systems. This quality is expressed

in terms of consistency and information content. Hall,

et. al. (D. Hall and Crowley, 2005) present and eva-

luate five adaptive background subtraction techniques

with background models of different complexity.

In this paper, we compare three trackers based on

229

Cruz J., Altamirano L. and Pedroza J. (2006).

PERFORMANCE OF ADAPTIVE TRACKING ALGORITHMS.

In Proceedings of the First International Conference on Computer Vision Theory and Applications, pages 229-236

DOI: 10.5220/0001377702290236

Copyright

c

SciTePress

multiple algorithms in order to maintain the target

trajectory. To track objects through occlusions we

used UKF and IMM filters. The trackers are eva-

luated on the same benchmark data set which allows

a more objective comparison. The metrics for com-

paring trackers evaluate the positions estimated and

the detection’s reliability.

The paper is structured as follows: Section 2

presents a brief review of tracking algorithms. Sec-

tion 3 describes the motion models and estimation

filters used in order to increase the robustness of the

algorithms. In section 4 we describe the process used

to obtain the truth track and evaluation performance

metrics applied. Section 5 describes the data set used

and shows the performance results on data set. Fi-

nally, we give our conclusion of the comparative ana-

lysis in section 6.

2 TRACKING ALGORITHMS

Tracking algorithms are divided mainly in two main

categories: region trackers and edge trackers. For

a region tracker, a region of the image is selected

as search pattern and one similarity measure is used

to decide on the best matching region in the next

image. These algorithms fails in the case of changes

in in object size, illumination changes, and surface re-

flectance. Their challenges are to make possible a gra-

dual adaptability to different conditions in the images

and to avoid to slowly drifting from the tracked region

into the background.

On the other hand, edge trackers follow edges pro-

duced by changes in the reflected light (variation in

colour or illumination). However, an edge detection

algorithm requires smoothing and differentiation of

the image. Differentiation is an ill-conditioned pro-

blem and smoothing results in a loss of information.

Therefore, it is difficult to design a general edge de-

tection algorithm which performs well in many con-

texts.

2.1 Correlation

Correlation is performed by overlapping a correlation

window holding a reference image at the location of

each pixel in a search region from the current frame.

A correlation metric is used to define the best mat-

ching of the correlation window in the current image.

The portion of image most similar is registered as a

new reference image for correlation at the next frame.

The correlation process might give an incorrect

registration because low contrast of the reference

pattern, sensor noise, occlusion, etc. In applications,

where the reference has to be updated in each frame

to reduce the effect of magnification, occlusion, etc,

a single incorrect registration will lead to false track.

For these applications, it is necessary confidence mea-

sures in order to validate a correct registration. Ronda

et al. in (Ronda and Shue, 2000) define a confidence

measure, to prevent false updating of the reference

pattern. They present a mean-subtracted fully nor-

malized correlation algorithm (MSFNCA) that is an

improvement to simple correlation. The objective of

this approach is the robustness to variations in image

intensity. The confidence measure is the following:

MSF NCA(i, j) =

PP

(R(l, m) −

¯

R)

(S

ij

(l, m) −

¯

S

i,j

)

PP

(R(l, m) −

¯

R)

2

PP

(S

ij

(l, m) −

¯

S

ij

)

2

1

2

=

PP

[R(l, m)S

ij

(l, m)−

M

2

¯

S

i,j

¯

R

PP

R

2

(l, m) − M

2

¯

R

2

PP

S

2

ij

(l, m) − M

2

¯

S

2

ij

(1)

where R is the reference image, of size M × M . And

the M ×M window of the search image at pixel (i, j)

is denoted by S

ij

.

The target might drift out of the reference image

due to the discrete pixel size and the target motion,

even using the MSFNCA confidence measure. This

drift has to be corrected locally using others detection

algorithms.

2.2 Centroid Tracking

Centroid tracker is one of the most common algo-

rithms used (Jae-Soo Cho and Park, 2000), which

determines a target aim point by computing the in-

tensity or geometric centroid of the target object

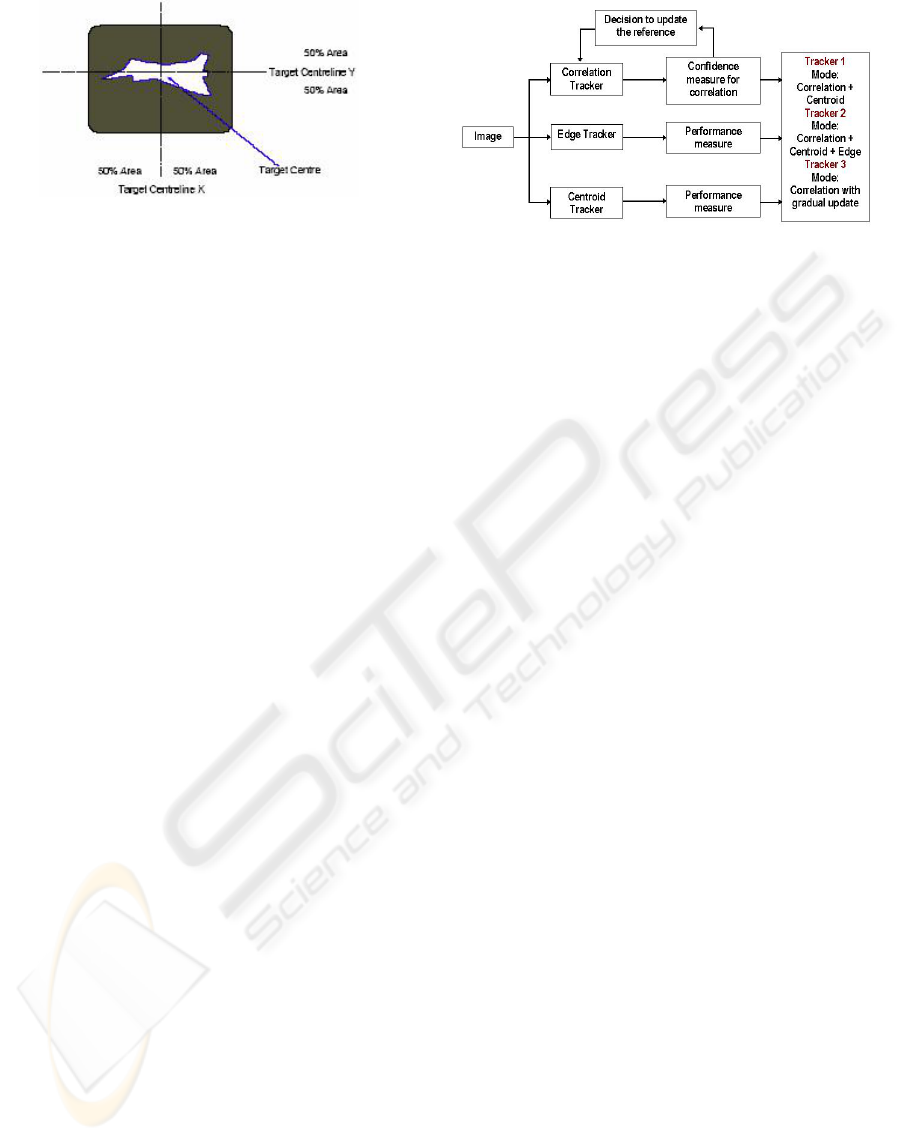

based on target segmentation process. The Figure 1

shows the target’s centroid. The performance of cen-

troid tracker is largely dependent on the segmentation

algorithm which needs to extract the moving tar-

get even in complex background conditions. There

are many algorithms for image segmentation (Sezgin

and Sankur, 2004), such as histogram-based meth-

ods, clustering-based methods and local methods that

adapt the threshold value to local image characteris-

tics. In this work, we define a threshold for each se-

quence.

2.3 Edge Tracking

Edge detection concerns the localization of varia-

tions of the grey level image and the identifica-

tion of the physical phenomena that originated them

(Djemel Ziou, 1998). To detect the target edges was

implemented the SUSAN algorithm, which performs:

VISAPP 2006 - MOTION, TRACKING AND STEREO VISION

230

Figure 1: Centroid of a target.

edge and corner detection and structure preserving

noise reduction.

This algorithm is a type of neighborhood voting

method to enhance the edges and corners of 2D

images. The speed and localization are quite good.

The SUSAN algorithm is implemented using digital

approximation of circular masks (Smith and Brady,

1997). If the brightness of each pixel within a mask

is compared with the brightness of that nucleus of

the mask, then an area of the mask can be defined

which has the same brightness as the nucleus. In this

approach no image derivatives are used and no noise

reduction is needed. However, the method produces

incorrect results with noisy images.

2.4 Adaptive Trackers

In tracking applications where a target changes shape

and size frequently, conventional tracking algorithms

as correlation are also applied. However, if a false re-

gistration occur and is not detected, the system could

fail due to the reference pattern is updated in each

frame.

False registration problem occurs when the refe-

rence pattern drifts away from target area. In order

to solve this problem, some works use correlation

algorithms as MSFNCA with a confidence measure

which ensures a better registration than a simple cor-

relation. This correlation performs well in many sit-

uations. However the drift problem and incorrect re-

gistration still persist.

In these cases, where the information of one algo-

rithm is not sufficient for maintaining the target tra-

jectory, we can apply multiple tracking algorithms in

order to increase the reliability of the system.

Figure 2 shows the three algorithms used in this

paper. Tracker 1 (Correlation + Centroid) performs

a correlation tracking and if coefficient correlation is

greater than a threshold then centroid algorithm is

performed. We set the threshold of the confidence

measure to 0.3. Tracker 2 (Correlation + Centroid +

Edges) uses three trackers to maintain the truth tra-

jectory, this tracker integrates edge detection algo-

rithm to increase the performance. Tracker 3 (Corre-

Figure 2: Tracking with multiple algorithms.

lation with gradual updating) performs a correlation

algorithm where the reference image is updated at the

next frame, replacing each pixel (i, j) of the reference

image by using equation 2.

R

ij

= (t + 1) = R

ij

(t)α + (1 − α)S

ij

(t) (2)

where α was set to 0.01.

3 ROBUST TRACKING

Occlusion is one of the problems for maintaining the

trajectories of the targets. Target features are lost

during an occlusion. Therefore, in the absence of in-

formation about the target, the state prediction can be

useful. In this paper, robust tracking is achieved by an

Unscented Kalman filter (UKF) and Interacting Mul-

tiple Model filter (IMM).

For predicting object state is necessary a motion

model, which represents the kinematic of the ob-

ject. For this comparative analysis we are using two

mathematical models (Li, 2000): acceleration cons-

tant model (AC) and velocity constant model (VC).

The state space for the models is of four dimen-

sions, defined by x− and y−position and x− and

y−velocity.

3.1 Estimation Filters

The Unscented Kalman filter (UKF) is a minimum

mean squared error (MMSE) state estimator for a

nonlinear system (Julier and Uhlmann, 1997). To esti-

mate the effect of the nonlinear and non-Gaussian mo-

dels, this filter uses a deterministic sample based ap-

proximation. The basic component of this filter is the

unscented transformation which uses a set of appro-

priately chosen weighted points to parameterize the

means and covariances of probability distributions.

Moreover, the IMM approach estimates a target

state when the target maneuver is unsure and it is sub-

ject to changes (Bar-Shalom and Blair, 2000). The

PERFORMANCE OF ADAPTIVE TRACKING ALGORITHMS

231

Figure 3: IMM filter.

IMM includes a finite set M of different maneuver

models that cover the possible maneuver spectrum.

Each model m ∈ M describes a different dynamical

system.

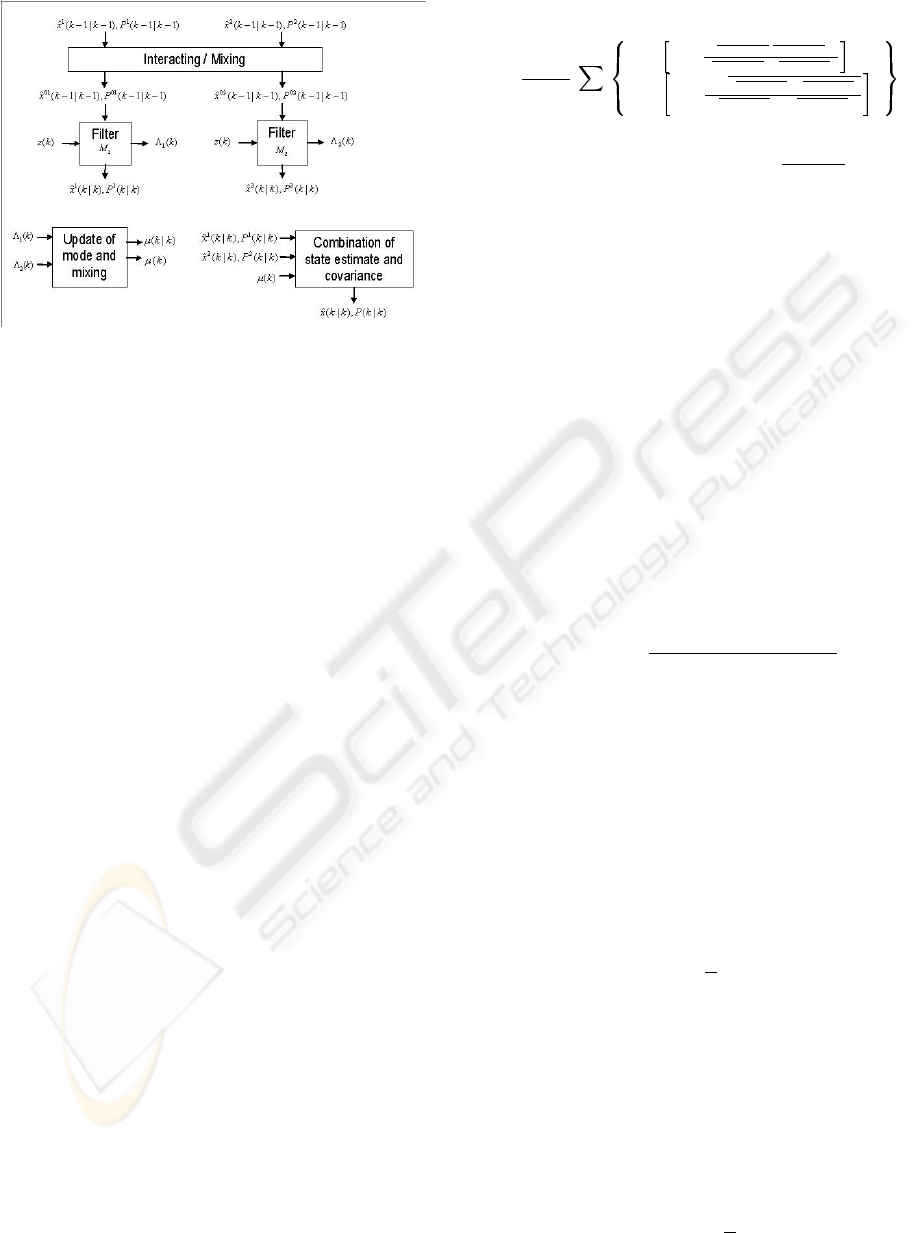

One cycle of IMM estimator includes the following

steps (Figure 3): interaction/mixing, filter M

r

, update

of mode and mixing probabilities, and combination

of state estimate and covariance. The IMM mode-set

was designed with two linear Kalman filters and two

motion models (AC and VC).

4 CONFIDENCE MEASURES

In order to achieve reliable performance, it is ne-

cessary to have confidence measures of the results.

There are many error metrics for tracking. They can

be divided into two categories: (1) statistical methods

that compare the measurement data obtained from the

tracker with the truth data, and (2) accuracy measures.

This analysis lies in comparing the tracking al-

gorithms, their applicability on different scenes and

the error obtained from prediction process. First, we

compare the trajectories using positional metrics, and

then we assure the performance of the robust trackers

with accuracy measures.

4.1 Truth Track

In order to identify trajectories of poor quality for the

tracking, the truth tracks are checked for consistency

with respect to path coherence (Xu and Ellis, 2002;

J. Black and P., 2003). The objective of applying this

measure is to consider the complexity of the target

motion in the scenes.

The path coherence is given by:

ε

pc

=

1

N − 2

N−1

k=2

w

1

1 −

x

k−1

x

k

·x

k

x

k+1

kx

k−1

x

k

kkx

k

x

k+1

k

+

w

2

1 −

2

√

kx

k−1

x

k

kkx

k

x

k+1

k

kx

k−1

x

k

k+kx

k

x

k+1

k

(3)

where N is the number of frames, x

k−1

x

k

is the

vector that represent the positional shift of the tracked

target between k and k − 1. w

1

and w

2

are weighting

factors that define the contribution of the components

(direction and speed) of the measure. These weights

were set to 0.5.

4.2 Comparison of Trajectories

For comparing trajectories, we apply some metrics

used in (Tine Lefebvre and Shutter, 2004). One of

the measures consists to know which is the displace-

ment and distance between the target trajectory from

the tracker T

E

and the truth trajectory T

T

with posi-

tions (x

i

, y

i

) and (p

i

, q

i

) respectively. The displace-

ment d

i

between trajectories at time i is calculated

using equation 4.

d

i

= (p

i

, q

i

) − (x

i

, y

i

) = (p

i

− x

i

, q

i

− y

i

) (4)

Therefore, the distance between the positions at

time i is given in the equation 5.

d

i

= |d

i

| =

q

(p

i

− x

i

)

2

+ (q

i

− y

i

)

2

(5)

Another metric is to calculate the optimal spatial

translation d (shift) between T

E

and T

T

. This me-

tric show a closer relationship between two trajecto-

ries (Needham and Boyle, 2003). They define this

metric by equation 6.

µ

D

T

E

+

ˆ

d, T

T

(6)

where

ˆ

d is the average displacement of two trajecto-

ries, calculated by equation 7:

ˆ

d = µ(d

i

) =

1

n

n

X

i=1

d

i

(7)

Finally, to describe the data obtained previously

and evaluate the tracker we are using statistics applied

to displacement, distance and shift between two tra-

jectories. These statistics provide quantitative infor-

mation about distribution of data, such as, mean, me-

dian, standard deviation and, minimum and maximum

values.

The mean is calculated as follows:

µ(D(T

T

, T

E

)) =

1

n

n

X

i=1

d

i

(8)

VISAPP 2006 - MOTION, TRACKING AND STEREO VISION

232

where n is the number of frames. Besides, the median

is obtained by equation 9 and the standard deviation

by equation 10.

median(D(T

T

, T

E

)) =

(

d

n+1

2

if n odd

d

n

2

+d

n

2

+1

2

if n even

(9)

σ(D(T

T

, T

E

)) =

r

P

n

i=1

(d

i

− µ(d

i

))

2

n

(10)

The equations 11 and 12 correspond to the mini-

mum and maximum distance values respectively.

min(D(T

T

, T

E

)) = the small est d

i

(11)

max(D(T

T

, T

E

)) = the largest d

i

(12)

4.3 Surveillance Metrics

We also use the metrics described in (J. Black and P.,

2003), to measure the tracking performance. We ob-

tain the tracker detection rate (TRDR) for each image

sequence. TRDR is obtained by equation 13.

T RDR =

T otal T rue P ositives

T otal N umber of T ruth P oints

(13)

The false alarm rate (FAR) and the TRDR charac-

terize the tracking performance. FAR is given in the

equation 14.

T RDR =

T otal F alse P ositives

T otal T ruth P ositives +

T otal F alse P ositives

(14)

The object tracking error (OTE) is another metric

that indicates the mean distance between real and es-

timated trajectories. OTE is obtained by equation 15.

OT E =

1

N

rg

X

∃i g(t

i

)∧r(t

i

)

q

(p

i

− x

i

)

2

+ (q

i

− y

i

)

2

(15)

5 EXPERIMENTS

We compared the performance of three adaptive

tracking algorithms. These algorithms are robust to

occlusions by using estimation filters. Experiments

were performed on six real image sequences and two

synthetic sequences. We have considered infrared and

visible images for testing. The size of the each frame

is 640x480 for real sequences and 752x512 for syn-

thetic sequences.

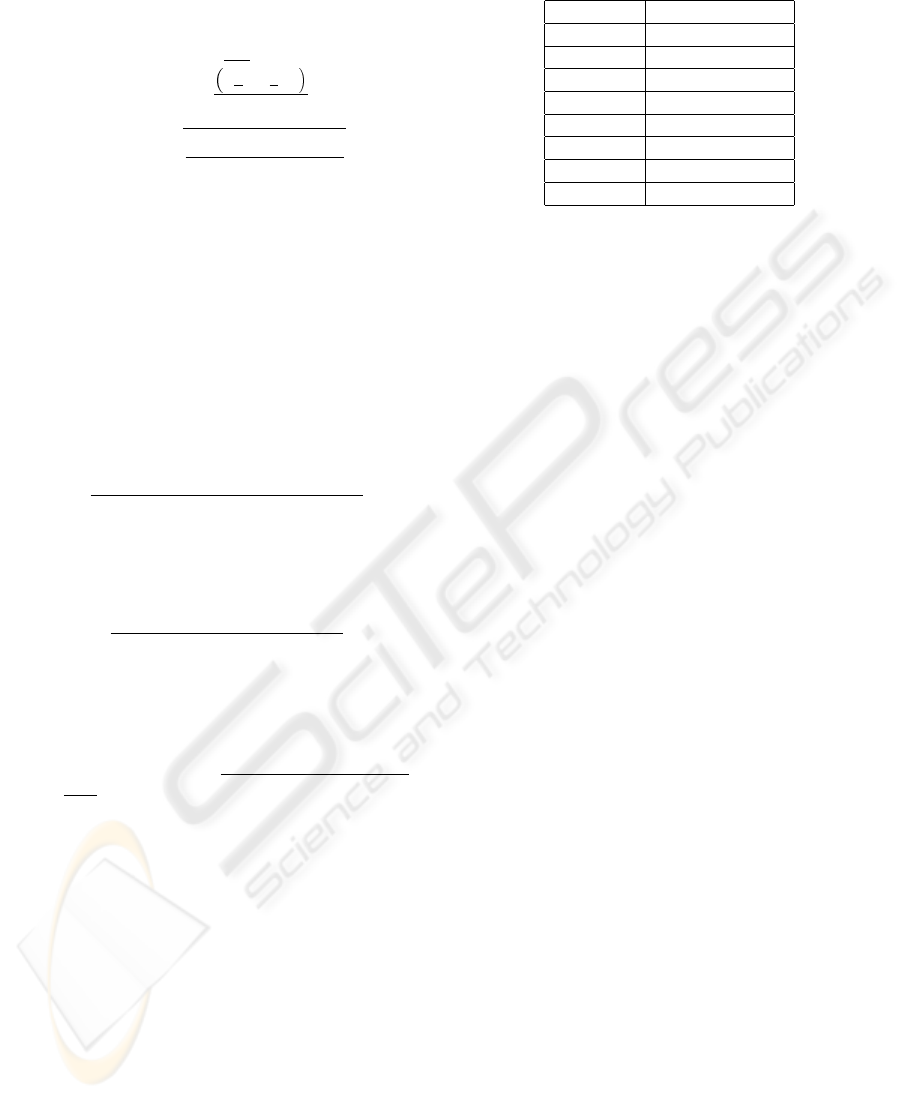

Figure 4(a) corresponds to infrared sequence where

a ship is the target (it will be referenced as sequence

1). Target has been tracked on 422 frames and

presents 40 occlusions during its trajectory. In the

Table 1: Coherence path.

Sequence Coherence Path

1 0.157287

2 0.11906

3 0.499223

4 0.500005

5 0.332765

6 0.206209

7 0.045832

8 0.03558

Figure 4(b) (sequence 2) a car is the interest object

for tracking under occlusions in infrared sequence,

the car is occluded in 75 frames of 575 that composes

this sequence. Figure 4(c) (sequence 3) shows a vi-

sible frame from a sequence where the interest object

is a boat that has an horizontal motion, beginning in

the left top corner of the image. This sequence has

474 frames, in this sequence the boat is occluded in

75 frames. Figure 4(d) presents a synthetic sequence,

the pattern size is 35 pixels, the sequence includes 500

frames where 45 frames were used to simulate occlu-

sions.

5.1 Performance and Results

The coherence path obtained of each sequence is pre-

sented in the Table 1. The half of the all sequences

has a high value in the coherence path.

The metrics between two trajectories were applied.

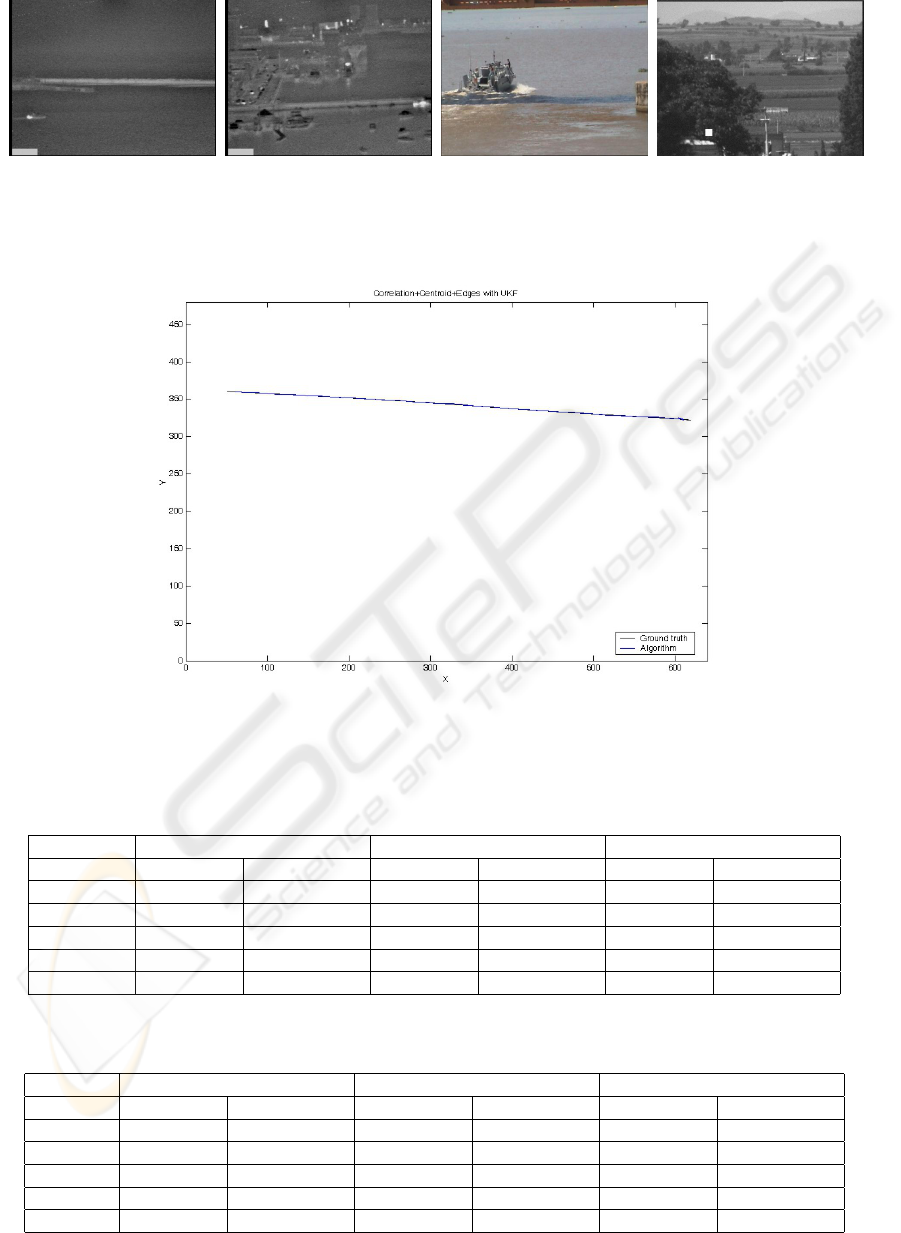

The Table 2 presents results from sequence 1 with

UKF algorithm. Tracker 2 provides the best results

in the evaluation of the tracked trajectory. The truth

trajectory and tracked trajectory are depicted in the

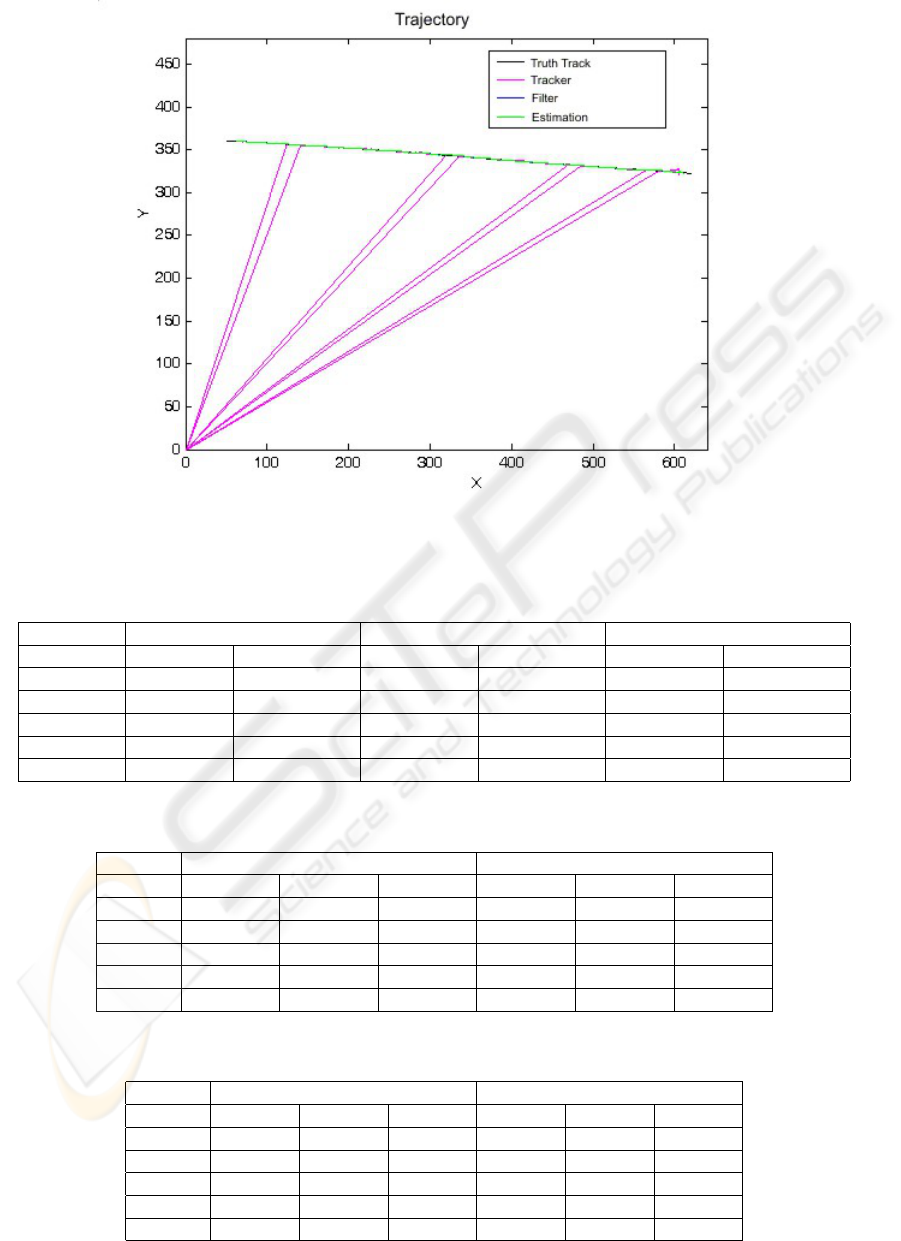

Figure 5. The Figure 6 shows the trajectory of the

sequence 1 with the simulated occlusions. The trajec-

tory obtained with the tracker (Correlation + Centroid

+ Edges) does not maintain a correct tracking of the

truth trajectory. However when the estimation filter is

used, the problem occlusion is solved.

Table 3 and 4 resumes the results obtained on all

sequences using trackers with UKF and IMM filter

respectively. In both evaluations, correlation with gra-

dual update has the best performance.

In the same way, we have applied the surveillance

metrics for sequences. The results for the first se-

quence are showed in the Table 5. Trackers with

UKF filter have high detection rate in comparison

with IMM-based algorithms.

Finally in Table 6, we show the mean of the va-

lues obtained from all sequences. We can observe that

algorithms with UKF filter presents better values of

TRDR even when detect more false alarms.

PERFORMANCE OF ADAPTIVE TRACKING ALGORITHMS

233

(a) Infrared image (b) Infrared image (c) Visible image (d) Synthetic visible image

Figure 4: Examples used for tracking.

Figure 5: Trajectory of the sequence 1.

Table 2: Results of trajectory evaluation 1 using UKF filter.

Tracker 1 2 3

Metric D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT)

Mean 5.387765 2.128756 3.073779 1.859341 5.336042 2.060513

Median 5.974945 1.178952 3.206711 0.931958 5.974945 1.148738

Std. Dev 2.104545 2.370386 1.744103 1.96903 1.979448 2.103242

Minimum 0.03705 0.022712 0.03705 0.094509 0.03705 0.051058

Maximum 14.296198 18.806309 13.806778 16.029772 13.788579 12.84164

Table 3: Mean of the results of trajectory evaluation using UKF filter.

Tracker 1 2 3

Metric D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT)

Mean 4.9655835 3.8103235 4.7905565 3.8972085 4.4031985 2.99079

Median 3.683516 2.6102135 3.630965 2.588816 3.683516 2.05372

Std. Dev 5.368305 5.4243535 5.2744075 5.5185885 3.1629285 3.07067

Min 0.157494 0.0891545 0.2175505 0.140762 0.157494 0.08424

Max 31.596568 32.6933673 31.0513235 32.2474888 17.2029045 16.5863

VISAPP 2006 - MOTION, TRACKING AND STEREO VISION

234

Figure 6: Trajectory of the sequence 1 with occlusions.

Table 4: Mean of the results of trajectory evaluation using IMM filter.

Tracker 1 2 3

Metrics D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT) D(TE,TT) D(TE+d,TT)

Mean 4.71262 3.886235 4.659543 3.985114 4.1161075 3.16768925

Median 3.2919788 2.59886025 3.54485625 2.69846925 3.42166425 2.27020875

Std. Dev 5.661622 5.61342775 5.52120525 5.6409165 3.59892 3.4811025

Minimum 3.092654 0.183317 0.2427665 0.22414025 0.05414675 0.09454175

Maximum 29.32515 30.695778 29.211101 29.919655 16.681788 18.091328

Table 5: Performance results on sequence 1.

Filter UKF IMM

Tracker 1 2 3 1 2 3

TP 416 419 420 414 416 415

FP 7 4 3 9 7 8

TRDR 0.983452 0.990544 0.992908 0.978723 0.983452 0.981087

FAR 0.016548 0.009456 0.007092 0.021277 0.016548 0.018913

OTE 5.745404 3.246693 5.635883 5.658996 3.258433 5.579214

Table 6: Tracking performance.

Filter UKF IMM

Tracker 1 2 3 1 2 3

TP 685.75 684.25 691.75 687.5 687.5 688

FP 60.75 62.25 54.75 59 59 58.5

TRDR 0.92566 0.92119 0.93981 0.92009 0.92009 0.92111

FAR 0.74364 0.07882 0.06019 0.07992 0.07992 0.07881

OTE 5.41712 5.28336 4.73812 5.07317 5.07317 4.51656

PERFORMANCE OF ADAPTIVE TRACKING ALGORITHMS

235

6 CONCLUSION

In this paper we present three adaptive tracking algo-

rithms. The trackers are: (1) MSFNCA + Centroid,

(2) MSFNCA + Centroid + Edges, and (3) Correla-

tion with gradual update. Trackers adapt to different

conditions by means of performance metrics, which

indicates the best correlation, reducing the possibility

that drift problem occurs.

Moreover, these algorithms have the capability to

follow a target trajectory even under occlusions using

UKF and IMM filters and using a constant accelera-

tion and constant velocity motion models. We ob-

tain the coherence path for each sequence assessing

its complexity before apply the tracking algorithms.

The tracking performance was measured on real and

synthetic sequences. We used metrics that compare

the truth trajectory and the trajectory tracked. Further-

more, we evaluate the algorithms by calculating both

false alarms and correct detections. Correlation with

gradual update improves the tracking and increase the

adaptability to changing environments. The UKF fil-

ter had a slightly best behavior than IMM estimator

even when an occlusion problem occurs.

REFERENCES

Bar-Shalom, Y. and Blair, W. D. (2000). Multitarget Multi-

sensor Tracking, Applications and Advances. Artech

House, Norwood, Ma., volume iii edition.

D. Hall, J. Nascimento, P. R. E. A. P. M. S. P. T. L.-R.

E. R. F. J. S. and Crowley, J. (2005). Comparison

of target detection algorithms using adaptive back-

ground models. In Proceedings of International Work-

shop on Performance Evaluation of Tracking and Sur-

veillance.

Djemel Ziou, S. T. (1998). Edge detection techniques an

overview. In International Journal of Pattern Recog-

nition and Image Analysis.

J. Black, E. T. and P., R. (2003). A novel method for video

tracking performance evaluation. In Joint IEEE Int’l

Workshop on Visual Surveillance and Performance

Evaluation of Tracking and Surveillance (VS-PETS),

pp. 125-132. IEEE.

Jae-Soo Cho, D.-J. K. and Park, D.-J. (2000). Robust cen-

troid target tracker based on new distance features in

cluttered image sequences. In IEICE Trans. Inf. &

Syst, Vol. E83-D, No. 12.

Julier, S. J. and Uhlmann, J. K. (1997). A new extension of

the kalman filter to nonlinear systems. In Proceedings

of SPIE AeroSense Symposium. SPIE.

Kishore, M. S. and Rao, K. V. (2001). Robust correla-

tion tracker. In Academy Proceedings in Engineering

Sciences, Sadhana, Vol. 26, Part 3, pp. 227-236.

L. M. Brown, A. W. Senior, Y.-l. T. J. C. A. H. C.-f. S.

H. M. and Lu, M. (2005). Performance evaluation of

surveillance systems under varying conditions. In Int’l

Workshop on Performance Evaluation of Tracking and

Surveillance. IEEE.

Li, X. R. (2000). A survey of maneuvering target tracking:

Dynamic models. In Proceedings of SPIE Conference

on Signal and Data Processing of Small Targets. Vol.

4048, p. 212-235. SPIE.

Needham, C. J. and Boyle, R. D. (2003). Performance eva-

luation metrics and statistics for positional tracker eva-

luation. In Proceedings of ICVS 2003, LNCS 2626, pp.

278-289. Springer-Verlag.

Ronda, V. and Shue, L. (2000). Multi-mode signal proce-

ssor for imaging infrared seeker. In Proceedings of the

SPIE, Acquisition, tracking and pointing. Vol. 4025.

pp. 142-150. SPIE.

Sezgin, M. and Sankur, B. (2004). Survey over image

thresholding techniques and quantitative performance

evaluation. In Journal of Electronic Imaging. Vol. 13,

Number 1, pp. 146165. SPIE.

Smith, S. M. and Brady, J. (1997). Susan- a new approach to

low level image processing. In International Journal

of Computer Vision, Vol. 23, No. 1.

Tao Yang, Stan Z. Li, Q. P. and Li, J. (2005). Real-time

multiple objects tracking with occlusion handling in

dynamic scenes. In IEEE Computer Society Confe-

rence on Computer Vision Pattern Recognition (CVPR

2005). Vol. 1, 20-25. pp. 970-975. IEEE.

Tine Lefebvre, H. B. and Shutter, J. D. (2004). Kalman

filters for nonlinear systems: a comparison of perfor-

mance. In International Journal of Control. Vol. 77,

Number 7, pp. 639-653(15).

Xu, M. and Ellis, T. (2002). Partial observation vs. blind

tracking through occlusion. In Proceeding of the 13th

British Machine Vision Conference (BMVC 2002).

VISAPP 2006 - MOTION, TRACKING AND STEREO VISION

236