MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS

Fotis Liarokapis, David Mountain, Stelios Papakonstantinou, Vesna Brujic-Okretic, Jonathan Raper

giCentre, Department of Information Science, School of Informatics, City University, London EC1V 0HB

Keywords: Mobile Interfaces, Augmented and Virtual Environments, Virtual Tours, Human-computer interaction.

Abstract: In this paper we propose the use of specific mobile system architecture for navigation in urban

environments. The aim of this work is to evaluate how virtual and augmented reality interfaces can provide

location and orientation-based services using different technologies. The virtual reality interface is entirely

based on sensors to detect the location and orientation of the user while the augmented reality interface uses

computer vision techniques to capture patterns from the real environment. The knowledge obtained from the

evaluation of the virtual reality experience has been incorporated into the augmented reality interface. Some

initial results in our experimental augmented reality navigation are presented.

1 INTRODUCTION

Navigating in urban environments is one of the most

compelling challenges of wearable and ubiquitous

computing. Recent advances in positioning

technologies - as well as virtual reality (VR),

augmented reality (AR) and user interfaces (UIs) -

pose new challenges to researchers to create

effective wearable navigation environments.

Although a number of prototypes have been

developed in the past few years there is no system

that can provide a robust solution for unprepared

urban navigation. There has been significant

research in position and orientation navigation in

urban environments. Experimental systems that have

been designed range from simple location-based

services to more complicated virtual and augmented

reality interfaces.

An account of the user's cognitive environment is

required to ensure that representations are not just

delivered on technical but also usability criteria. A

key concept for all mobile applications based upon

location is the 'cognitive map' of the environment

held in mental image form by the user. Studies have

shown that cognitive maps have asymmetries

(distances between points are different in different

directions), that they are resolution-dependent (the

greater the density of information the greater the

distance between two points) and that they are

alignment-dependent (distances are influenced by

geographical orientation) (Tversky, 1981). Thus,

calibration of application space concepts against the

cognitive frame(s) of reference (FORs) is vital to

usability. Reference frames can be divided into the

egocentric (from the perspective of the perceiver)

and the allocentric (from the perspective of some

external framework) (Klatzky, 1998). End-users can

have multiple egocentric and allocentric FORs and

can transform between them without information

loss (Miller and Allen, 2001). Scale by contrast is a

framing control that selects and makes salient

entities and relationships at a level of information

content that the perceiver can cognitively

manipulate. Whereas an observer establishes a

‘viewing scale’ dynamically, digital geographic

representations must be drawn from a set of

preconceived map scales. Inevitably, the cognitive

fit with the current activity may not always be

acceptable (Raper, 2000).

Alongside the user's cognitive abilities,

understanding the spatio-temporal knowledge users

have is vital for developing applications. This

knowledge may be acquired through landmark

recognition, path integration or scene recall, but will

generally progress from declarative (landmark lists),

to procedural (rules to integrate landmarks) to

configurational knowledge (landmarks and their

inter-relations) (Siegel and White, 1975). There are

quite significant differences between these modes of

knowledge, requiring distinct approaches to

application support on a mobile device. Hence,

research has been carried out on landmark saliency

208

Liarokapis F., Mountain D., Papakonstantinou S., Brujic-Okretic V. and Raper J. (2006).

MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS.

In Proceedings of the First International Conference on Computer Graphics Theory and Applications, pages 208-215

DOI: 10.5220/0001356702080215

Copyright

c

SciTePress

(Michon and Denis, 2001) and on the process of

self-localisation (Sholl, 2002) in the context of

navigation applications.

This work demonstrates that the cognitive value

of landmarks is in preparation for the unfamiliar and

that self-localisation proceeds by the establishment

of rotations and translations of body coordinates

with landmarks. Research has also been carried out

on spatial language for direction-giving, showing,

for example, those paths prepositions such as along

and past is distance-dependent (Kray, 2001). These

findings suggest that mobile applications need to

help users add to their knowledge and use it in real

navigation activities. Holl et al (Holl et al., 2003)

illustrate the achievability of this aim by

demonstrating that users who pre-trained for a new

routing task in a VR environment made fewer errors

than those who did not. This finding encourages us

to develop navigational wayfinding and commentary

support on mobile devices accessible to the

customer.

The objectives of this research include a number

of urban navigation issues ranging from mobile VR

to mobile AR. The rest of the paper is structured as

follows. In section 2, we present background work

while in section 3 we describe the architecture of our

mobile solution and explain briefly the major

components. Sections 4 and 5 present the most

significant design issues faced when building the VR

interface, together with the evaluation of some initial

results. In section 8, we present the initial results of

the development towards a mobile AR interface that

can be used as a tool to provide location and

orientation-based services to the user. Finally, we

present our future plans.

2 BACKGROUND WORK

There are a few location-based systems that have

proposed how to navigate into urban environments.

Campus Aware (Burrell, et al., 2002) demonstrated a

location-sensitive college campus tour guide which

allows users to annotate physical spaces with text

notes. However, user-studies showed that navigation

was not well supported. The ActiveCampus project

(Griswold et al., 2004) tests whether wearable

technology can be used to enhance the classroom

and campus experience for a college student. The

project also illustrates ActiveCampus Explorer,

which provides location aware applications that

could be used for navigation. The latest application

is EZ NaviWalk, a pedestrian navigation service

launched in Japan in October 2003 by KDDI (DTI,

2004) but in terms of visualisation it offers the

‘standard’ 2D map.

On the contrary, many VR prototypes have been

designed for geo-visualisation and navigation. A

good overview of the potentials and challenges for

geographic visualisation has been previously

documented (MacEachren et al., 1999). LAMP3D is

a system for the location-aware presentation of

VRML content on mobile devices applied in tourist

mobile guides (Burigat and Chittaro, 2005).

Although the system provides tourists with a 3D

visualization of the environment they are exploring,

synchronized with the physical world through the

use of GPS data, there is no orientation information.

For route guidance applications 3D City models

have been demonstrated for mobile navigation

(Kulju and Kaasinen, 2002) but studies pointed out

the need for detailed modelling of the environment

and additional route information. To enhance the

visualisation and navigation, a combination of a 3D

representation of a map with a digital map were

previously presented in a single interface

(Rakkolainen and Vainio, 2001, Laakso et al., 2003).

In terms of augmented reality navigation a few

experimental systems have been presented. One of

the first wearable navigation systems is MARS

(Mobile Augmented Reality Systems) (Feiner et al,

1997) which aimed at exploring the synergy of two

promising fields of user interface research: including

AR and mobile computing. Thomas et al, (Thomas

et al., 1998) proposed the use of a wearable AR

system with a GPS and a digital compass as a new

way of navigating into the environment. Moreover,

the ANTS project (Romão et al., 2004) proposes an

AR technological infrastructure that can be used to

explore physical and natural structures, namely for

environmental management purposes. Finally,

Reitmayr, et al., (Reitmayr and Schmalstieg, 2004)

demonstrated the use of AR for collaborative

navigation and information browsing tasks in an

urban environment.

Although the presented experimental systems

focus on some of the issues involved in navigation,

they can not deliver a functional system that can

combine accessible interfaces; consumer devices;

and web metaphors. The motivation of this research

is to address the above issues. In addition, we

compare potential solutions for detecting the user

location and orientation in order to provide

appropriate urban navigation applications and

services. To achieve this we have designed a mobile

platform based on both VR and AR interfaces. To

understand in depth all the issues that relate to

location and orientation-based services, first a VR

MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS

209

interface was designed and tested as a navigation

tool. Then we have incorporated the user feedback

into an experimental AR interface. Both prototypes

require the precise calculation of the user position

and orientation for registration. The VR interface

relies on a combination of GPS and digital compass

while the AR interface is only dependent on

detecting features belonging to the environment.

3 MOBILE PLATFORM

One of the motivations for this research was to

investigate the technical issues behind virtual and

augmented navigation. At present, we are modelling

the 3D scene around the user and presenting it on

both the VR and AR interfaces. A partner on the

project the GeoInformation Group, Cambridge

(GIG) - provides a unique and comprehensive set of

data, in the form of the building height/type and

footprint data, for the entire City of London. The

urban 3D models are extruded up from Mastermap

building footprints to heights, held in the GIG City

heights database for the test sites in London, and

textures are manually captured using a digital

camera with five mega pixel accuracy. The project

has also access to the unique building height/type

dataset developed for London by GIG and in use

with a range of public and private organisations, e.g.

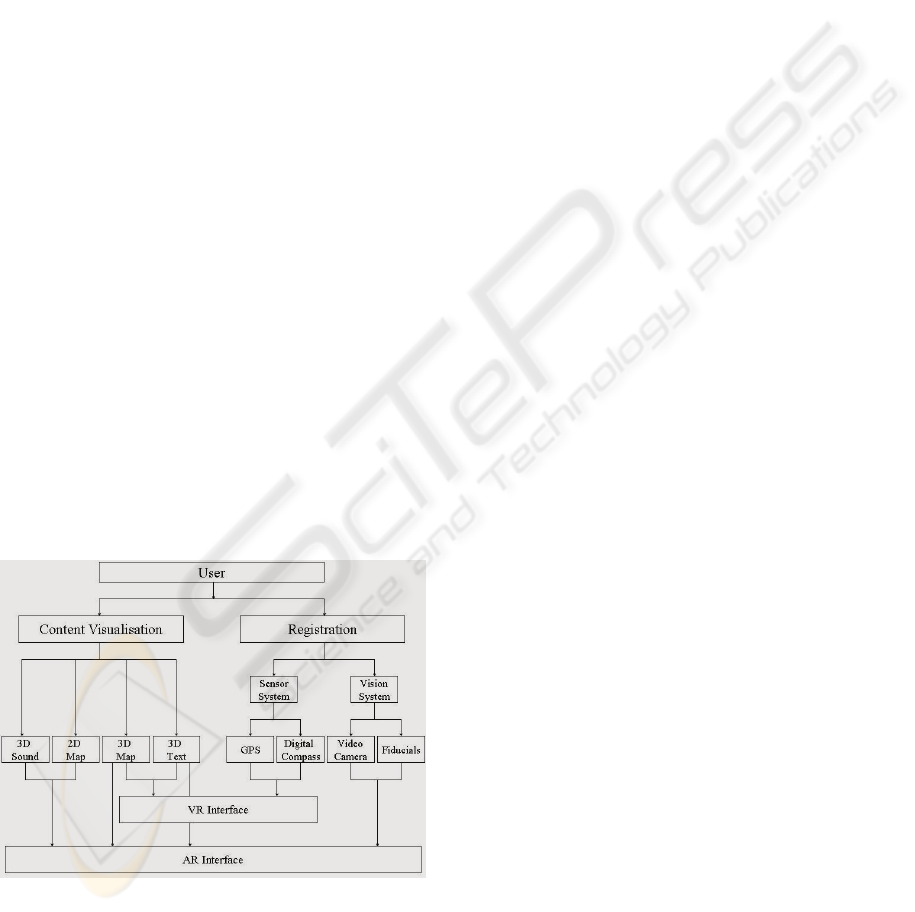

Greater London Authority. Based on this, a generic

mobile platform for urban navigation applications

and services is prototyped and the architecture is

presented in Figure 1.

Figure 1: Architecture of our mobile interfaces.

Figure 1 illustrates how a user can navigate, using

intelligent data retrieval, inside an urban

environment, and what types of digital information,

appropriately visualised, can be provided in the form

of a service. Information visualisation techniques

adopted depends on the digital content used during

navigation. Registration, in this context, includes the

two most significant pieces of information for

calculating the user’s location and orientation: a

sensor system and a vision system which are used as

input to the VR and AR interfaces. The VR interface

uses GPS and digital compass information for

locating and orientating the user. In terms of the

content used for visualisation, the VR interface can

present only 3D maps and textual information. On

the other hand, the AR interface uses the calculated

user’s position and orientation coordinates from the

vision methods to superimpose 2D and 3D maps and

text, on the ‘spatially aware’ framework.

In terms of the software infrastructure used in this

project, both interfaces are implemented based on

Microsoft Visual C++ and Microsoft Foundation

Classes (MFC). The graphics libraries used are

based on OpenGL and VRML. Video operations are

supported by the DirectX SDK (DirectShow

libraries). Originally the mobile software prototype

was tested on a mobile hardware prototype

consisting of a Toshiba laptop computer (equipped

with 2.0 GHz M-processor, 1GB RAM and a

GeForce FXGo5200 graphics card), a Pharos GPS

and a Logitech web-camera. Currently, we are in the

process of porting the mobile platform to Personal

Digital Assistants (PDAs). The final prototype will

build on Mastermap data, stored in GML, with

simple shading applied to the building outlines. The

geographical models will acquire both the

orientation information and the location through a

client API on the mobile device, which will be sent

to the server in the packet-based message

transmitted over the used network. The server will

build and render the scene graph associated with the

location selected and return it to the client for

portrayal.

4 VR NAVIGATION

Navigation within our virtual environment (the

spatial 3D map) can take place in two modes:

automatic and manual. In the automatic mode, GPS

automatically feeds and updates the spatial 3D map

with respect to the user’s position in the real space.

This mode is designed for intuitive navigation. In the

manual mode, the control is fully with the user, and

it was designed to provide alternative ways of

navigating into areas where we cannot obtain a GPS

signal. Also users might want to stop and observe

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

210

parts of the environment – in which case control is

left in their hands.

During navigation, there are minor modifications

obtained continuously from the GPS to improve the

accuracy, which results in minor adjustments in the

camera position information. This creates a feeling

of instability in user, which can be avoided by

simply restricting minor positional adjustments. The

immersion provided by GPS navigation is

considered as pseudo-egocentric because

fundamentally the camera is positioned at a height

which does not represent a realistic scenario. If,

however, the user switches to manual navigation,

any perspective can be obtained, which is very

helpful for decision-making purposes. While in a

manual mode, any model can be explored and

analysed, therefore additional enhancements of the

graphical representation are of vital importance. An

illustrative screenshot of a user testing our prototype

in automatic mode is shown in Figure 2.

Figure 2: User’s view during VR navigation.

One of the problems that quickly surfaced during

the system evaluation is the viewing angle during

navigation which can make it difficult to position the

user. After a series of trial and error exercises, an

altitude of fifty meters over the surface was finally

adopted as adequate. In this way, the user can

visualise a broader area plus the tops of the

buildings, and acquire richer knowledge about their

location, in the VR environment. The height

information is hard-coded when the navigation is in

the automatic mode because user testing (section 6)

showed that it can be extremely useful in cases

where a user tries to navigate between tall buildings,

having low visibility.

5 PRELIMINARY EVALUATION

The aims of the evaluation of the VR prototype

included assessment of the user experience with

particular focus on interaction via movement,

identification of specific usability issues with this

type of interaction, and to stimulate suggestions

regarding future directions for research and

development. A ‘thinking aloud’ evaluation strategy

was employed (Dix et al, 2004); this form of

observation involves participants talking through the

actions they are performing, and what they believe

to be happening, whilst interacting with the system.

This qualitative form of evaluation is highly

appropriate for small numbers of participants testing

prototype software: Dix et al (Dix et al, 2004)

suggest that the majority of usability problems can

be discovered from testing in this way.

The method used for the evaluation of our VR

prototype was based on the Black Box technique

which offers the advantage that it does not require

the user to hold any low-level information about the

design and implementation of the system. The user-

testing took place at City University campus which

includes building structures similar to the

surrounding area with eight users in total (testing

each one individually). For each test, the user

followed a predetermined path represented by a

highlighted line. Before the start of the walk, the

GPS receiver was turned on and flow of data was

guaranteed between it and the ‘Registration’ entity

of the system. The navigational attributes that were

qualitatively measured include the: user perspective,

movement with device and decision points.

5.1 User Perspective

The main point of investigation, was to test whether

the user can understand where they are located in the

VR scene, in correspondence to the real world

position. An examination of the initial orientation

and level of immersion was also evaluated after

minimum interaction with the application and

understanding of the available options. The

information that was obtained by the users was

concerning mainly four topics including: level-of-

detail (LOD), user-perspective, orientation and

field-of-view (FOV).

Most of the participants agreed that the LOD is

not sufficiently high for a prototype navigational

application. Some concluded that texture based

models would be a lot more appropriate but others

expressed the opinion that more abstract, succinct

annotations would help. Both groups of answers can

MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS

211

fit in the same context, if all interactions could be

visualised from more than one perspective. A

suggested improvement was to add geo-bookmarks

that would embed information about the nature of

the structures or even the real world functionality.

As far as the ‘user-perspective’ attribute is

concerned, each user expressed a different optimal

solution. Some concluded that more than one

perspective is required to fully comprehend their

position and orientation. Both perspectives, the

egocentric and the allocentric, are useful during

navigation for different reasons (Liarokapis et al.,

2005) and under different circumstances. During the

initial registration, it would be more appropriate to

view the model from an allocentric point of view

(which would cover a larger area) and by

minimising the LOD just to include annotations over

buildings and roads. This proved easier to get some

level of immersion with the system but not being

directly exposed to particular information such as

the structure of the buildings. An egocentric

perspective is considered productive only when the

user was in constant movement. When in movement,

the VR interface retrieves many updates and the

number of decision points is increased. Further

studies should be made on how the system would

assist an everyday user, but a variation on the user

perspective is considered useful in most cases.

The orientation mechanism provided by the

application consists of two parts. The first maintains

the user’s previous orientation whilst the second

restores the camera to the predefined orientation.

Some users preferred a tilt angle that points towards

the ground over oblique viewing angles.

Furthermore, all participants appreciated the user-

maintained FOV. They agreed that it should be wide

enough to include as much information, on the

screen, as possible. They added that in the primary

viewing angle, there should be included recognisable

landmarks that would aid the user comprehend the

initial positioning. One mentioned that the

orientation should stay constant between consecutive

decision points, and hence should not be gesture-

based. Most users agreed that the functionality of the

VR interface provides a wide enough viewing angle

able to recognise some of the surroundings even

when positioned between groups of buildings with

low detail level.

5.2 Movement with Device

The purpose of this stage was to explore how

respondents interpreted their interaction with the

device, whilst moving. The main characteristics

include the large number of updates, as well as the

change of direction followed by the user. These are

mainly considered with the issues of making the

navigation easier, the use of the most appropriate

perspective, and the accuracy of the underlying

system as well as the performance issues that drive

the application. Some participants mentioned the

lack of accurate direction waypoints that would

assist route tracking. A potential solution is to

consider the adoption of a user-focused FOV during

navigation using a simple line on the surface of the

model. However, this was considered partially

inadequate because the user expects more guidance

when reaching a decision point. Some participants

suggested to use arrows on top of the route line

which would be either visible for the whole duration

of the movement or when a decision point was

reached.

Moreover, it was positively suggested that the

route line should be more distinct, minimising the

probability of missing it while moving. Some

expressed the opinion that the addition of

recognisable landmarks would provide a clearer

cognitive link between the VR environment and the

real world scene. However, the outcomes of this

method are useful only for registering the users in

the scene and not for navigation purposes. A couple

of participants included in their answers that the

performance of the system was very satisfactory.

The latency that the system supports is equal to the

latency the H\W receiver obtains meaning that the

performance of the application is solely dependent

on the quality of operating hardware. The adaptation

to a mobile operating system (i.e. PocketPC) would

significantly increase the latency of the system.

Moreover, opinions, about the accuracy of the

system, differ. One of respondents was convinced

that the accuracy, provided by the GPS receiver, was

inside the acceptable boundaries, which reflected the

GPS specifications supporting that the level of

accuracy between urban canyons was reflecting the

correspondence to reality, in a good manner. A

second test subject revealed that the occlusion

problem was in effect due to GPS inaccuracy

reasons underlining that when the GPS position was

not accurate enough, the possibility to miss the route

line or any developed direction system increased.

Both opinions are equally respected and highlighted

the need for additional feedback.

5.3 Decision Points

The last stage is concerned with the decision points

and the ability of the user to continue the interaction

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

212

with the system when it reaches them. A brief

analysis of the users’ answers will try to name the

current disadvantages as well as proposed solutions.

As described previously, the user has the feeling of

full freedom to move at any direction, without being

restricted by any visualisation limitations of the

computer-generated environment. Nonetheless, this

intention may provide the exactly opposite result.

The user may feel overwhelmed by the numerous

options that may have and be confused about what

action should take next. At this point, we have to

take under consideration that most users do not have

relevant experience in 3D navigational systems and

after spending some time to understand the

application functionality, they would enhance their

ability to move in the VR environment. To access

user responses more effectively we plan to perform

more testing in the future.

Some users commented that when a decision

point or an area close to it is reached, the application

should be able to manipulate their perspective. This

should help resolving more information about the

current position as well as supporting the future

decision making process. Another interesting point

is that under ordinary circumstances, users should

follow the predefined route. Nevertheless, in

everyday situations the user may want to change

route, in response to a new external requirement.

Partially some of these requirements would be

fulfilled if the user could manually add geo-

bookmarks in the VR environment that would

actually represent points in space with

supplementary personal context. A well-proposed

solution is to include avatars which would depict the

actual position, orientation and simulation of the real

situation. One participant suggested that a compass

object on the screen would be of great assistance for

navigational purposes. This opinion is very

intriguing because it would help solve the occlusion

problem, by pointing towards the final destination or

waypoint. Besides, the adjustment of perspective

would not be necessary because, except the

predefined route line, the user may become capable

of trusting a more abstract mechanism.

6 AR NAVIGATION

The AR interface is the alternative way of

navigating in the urban environment using mobile

systems. Unlike the VR interface which uses the

hardware sensor solution (a GPS component and a

digital compass), the AR interface uses a camera

(with 1.3 megapixels) and computer vision

techniques to calculate position and orientation.

Based on the findings of the previous section and a

previously developed prototypes (Liarokapis, 2005,

Liarokapis et al., 2005), a high-level AR interface

has been designed for outdoor use. The major

difference with other existing AR interfaces, such as

the ones described in (Feiner et al, 1997, Thomas et

al., 1998, Reitmayr and Schmalstieg, 2004, Romão

et al., 2004), is that our approach allows for the

combination of four different types of navigational

information: 3D maps, 2D maps, text and sound. In

addition, two different modes of registration have

been designed and experimented upon, based upon

fudicial and feature recognition. The purpose for this

was to understand two of the most important aspects

of urban navigation: wayfinding and commentary. In

the fiducial recognition mode, the outdoor

environment needs to be populated with fiducials

prior to the navigational experience. Fiducials are

placed in points-of-interest (POIs) of the

environment, such as corners of the buildings, ends

of streets etc, and play a significant role in the

decision making process. In our current

implementation we have adopted ARToolKit’s

template matching algorithm (Kato and Billinghurst,

1999) for detecting marker cards and we try to

extend it for natural feature detection. Features that

we currently detect can come in different shapes,

such as square, rectangular, parallelogram,

trapezium and rhomb (Liarokapis, 2005).

Figure 3: AR navigation using fiducial recognition.

Figure 3, illustrates how virtual navigational tool

(a 3D arrow and a 2D map) can be superimposed on

one of the predefined decision points to aid

navigation. However, user-studies for tour guide

systems showed that visual information could

sometimes distract the user (Burrell, et al., 2002)

while audio information could be used to decrease

the distraction in tour guide systems (Woodruff et

al., 2001). With this in mind, we have introduced a

spatially referenced sound into the interface, to be

used simultaneously with the visual information. For

each POI of our test case scenario, a pre-recorded

MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS

213

sound file is assigned to the corresponding fiducial.

As the user approaches one, commentary

information can be spatially perceived; the closer the

user the louder the volume of the commentary.

Alternatively, in the feature recognition, the user is

‘searching’ to detect natural features of the real

environment to serve as ‘fiducial points’ and POIs

respectively. Distinctive natural features like door

entrances, windows have been experimentally tested

to see whether they can be used as ‘natural markers’.

Figure 6 shows the display a user navigating in City

University’s campus is presented with, to acquire

location and orientation information using ‘natural

markers’.

Figure 4: Feature recognition (a) using window-based

tracking (b) using door-based tracking.

As soon as the user turns the camera towards

these predefined natural markers, audio-visual

information (3D arrows, textual and auditory

information) can be superimposed (Figure 4) on the

real-scene imagery, thus satisfying some of the

requirements identified in section 5. Depending on

the end-user’s preferences, a specific type of digital

information may be selected to be superimposed.

For example, for visual impaired people it may be

preferred to use audio information rather than visual,

or a combination of the two (Liarokapis, 2005). A

comparison between the fiducial and the feature

recognition modes is shown in Table 1.

Table 1: Fiducial vs feature recognition mode.

Recognition

Mode

Range Error Robustness

Fiducial 0.5 ~ 2 m Low High

Feature 2 ~ 10 m High Low

In the feature recognition mode, the advantage is

that the range of operation is much greater, thus it

can be applied better when wayfinding is the focus

of the navigation. However, the natural feature

tracking algorithm, which is used in this scenario,

does require improved accuracy of the position and

orientation information, as it currently works with a

high error. In contrast, the fiducial recgonition mode

offers the advantage very low error during the

tracking process (i.e. detecting fiducial points).

However, the limited range of operation makes it

more appropriate for commentary navigation modes

rather than for wayfinding. Nevertheless, the

combination of fiducial and feature recognition

modes allows users to perceive both wayfinding and

commentary navigation into urban environments.

7 CONCLUSIONS

Our prototype system illustrates two different ways

of providing location-based services for navigation,

through continuous use of position and orientation

information. Users can navigate in urban

environments using either a mobile VR or a mobile

AR interface. Each system calculates the user’s

position and orientation using a different method.

The VR interface relies on a combination of GPS

and digital compass data whereas the AR interface is

only dependent on detecting features of the

immediate environment. In terms of information

visualisation, the VR interface can only present 3D

maps and textual information while the AR interface

can, in addition, handle other relative geographical

information, such as digitised maps and spatial

auditory information. Work on both modes and

interfaces is in progress and we also consider a

hybrid approach, which aims to find a balance

between the use of hardware sensors (GPS and

digital compass) and software techniques (computer

vision) to achieve the best registration results. In

parallel, we are designing a spatial database to store

our geo-referenced urban data, which will feed the

client-side interfaces as well as routing algorithms,

which we are developing to provide more services to

mobile users. The next step in the project will be to

port our platform to a PDA, which will be then

followed by a thorough evaluation process, using

both qualitative and quantitative methods.

ACKNOWLEDGMENTS

The work presented in this paper is conducted within

the LOCUS project, funded by EPSRC through the

GRAPP 2006 - COMPUTER GRAPHICS THEORY AND APPLICATIONS

214

Pinpoint Faraday Partnership. We would also like to

thank our partner on the project, GeoInformation

Group, Cambridge, for making the entire database of

the City of London buildings available to the project.

REFERENCES

Burigat, S., and Chittaro, L., 2005. Location-

awarevisualization of VRML models in GPS-based

mobileguides, In Proc of the 10

th

International

Conferenceon 3D Web Technology, ACM Press, 57-

64.

Burrell, J., Guy, G., Kubo, K., and Farina, N., 2002,

Context-aware computing: A test case. In Proc of

UbiComp, Springer, 1-15.

Dix, A., Finlay, J.E., Abowd, G.D., and Beale, R., 2004.

Human-Computer Interaction, Prentice Hall, Harlow.

DTI, 2004. Location-based services: understanding the

Japanese experience, Department of Trade and

Industry, Global Watch Mission Report, Available at:

(http://www.oti.globalwatchonline.com/online_pdfs/3

6246MR.pdf)

Feiner, S., MacIntyre, B., Höllerer, T., and Webster, T.,

1997. A touring machine: Prototyping 3D mobile

augmented reality systems for exploring the urban

environment. In Proc of the 1rst IEEE Int. Symposium

on Wearable Computers, October 13-14, 74-81.

Griswold, W.G., et al., 2004. ActiveCampus: Experiments

in Community-Oriented Ubiquitous Computing,

Computer, IEEE Computer Society, October, Vol. 37,

No. 10, 73-81.

Holl, D., et al., 2003. Is it possible to learn and transfer

spatial information from virtual to real worlds. In

Freksa et al. (Eds) Spatial cognition III, Berlin,

Springer, 143-56.

Kato, H., and Billinghurst, M., 1999. Marker Tracking and

HMD Calibration for a Video-Based Augmented

Reality Conferencing System. In Proc. of the 2nd

IEEE and ACM International Workshop on

Augmented Reality, IEEE Computer Society, 85-

94.Klatzky, R. L. (1998) Allocentric and egocentric

spatial representations: Definitions, distinctions, and

interconnections. In C. Freksa, C. Habel and K.

Wender (Eds). Spatial cognition - An interdisciplinary

approach to representation and processing of spatial

knowledge. Berlin, Springer-Verlag, pp. 1-18.

Kray, C., 2001. Two path prepositions: along and past. In

Montello, D. (Ed) Spatial Info Theory, Berlin,

Springer, 263-77.

Kulju, M., and Kaasinen, E., 2002. Route Guidance Using

a 3D City Model on a Mobile Device. In Workshop on

Mobile Tourism Support, Mobile HCI 2002

Symposium, Pisa, Italy, 17

th

Sep.

Laakso, K., Gjesdal, O., and Sulebak, J.R., 2003. Tourist

information and navigation support by using 3D maps

displayed on mobile devices. In Workshop on Mobile

Guides, Mobile HCI 2003 Symposium, Udine, Italy.

Liarokapis, F., 2005. Augmented Reality Interfaces—

Architectures for Visualising and Interacting with

Virtual Information, PhD Thesis, University of

Sussex.

Liarokapis, F., Greatbatch, I., et al., 2005. Mobile

Augmented Reality Techniques for GeoVisualisation,

In Proc. of the 9th International Conference on

Information Visualisation, IEEE Computer Society, 6-

8 July, London, 745-751.

MacEachren, A.M., et al., 1999. Virtual environments for

Geographic Visualization: Potential and Challenges,

In Proc of the ACM Workshop on New Paradigms in

Information Visualization and Manipulation, ACM

Press, Nov. 6, 35-40.

Michon, P-E., and Denis, M., 2001. When and why are

visual landmarks used in giving directions. In

Montello, D. (Ed.) Spatial Information Theory, Berlin,

Springer, 292-305.

Miller, C., and Allen, G., 2001. Spatial frames of reference

used in identifying directions of movement: an

unexpected turn. In Montello, D. (Ed.) Spatial

Information Theory, Berlin, Springer, 206-16.

Mountain, D., Liarokapis, F., Raper, J., and Brujic-

Okretic, V., 2005. Interacting with Virtual Reality

models on mobile devices, In 4th International

Workshop on HCI in Mobile Guides, University of

Salzburg, Austria, 19-22 September.

Rakkolainen, I., and Vainio, T., 2001. A 3D City Info for

Mobile Users. Computer & Graphics, 25(4): 619-625.

Raper, J.F., 2000. Multidimensional geographic

information science, Taylor and Francis, London.

Reitmayr, G., and Schmalstieg, D., 2004. Collaborative

Augmented Reality for Outdoor Navigation and

Information Browsing. In Proc. Symposium Location

Based Services and TeleCartography, Vienna, Austria,

January, 31-41.

Romão, T., Correia, N., et al., 2004. ANTS-Augmented

Environments, In Computers & Graphics, 28(5): 625-

633.

Sholl, J., 2002. The role of self-reference system in spatial

navigation. In Montello, D. (Ed.) Spatial Information

Theory, Berlin, Springer, 217-32.

Siegel, A.W., and White, S.H., 1975. The development of

spatial representation of large scale environments. In

H. Reese (ed.): Advances in child development

andBehaviour, New York: Academic Press, 9-55.

Thomas, B.H., Demczuk, V., et al., 1998. A Wearable

Computer System with Augmented Reality to Support

Terrestrial Navigation. In Proc. of the 2nd

International Symposium on Wearable Computers,

October, IEEE and ACM, 168-171.

Tversky, B., 1981. Distortions in memory for maps.

InCognitive Psychology, 13, 407-433.

Woodruff, A., Aoki, P.M., Hurst, A., and Szymanski,

M.H., 2001. Electronic Guidebooks and Visitor

Attention, In Proc of 6

th

Intl Cultural Heritage

Informatics Meeting, Milan, Italy, Sep, 437-454.

MIXED REALITY FOR EXPLORING URBAN ENVIRONMENTS

215