ADMIRE FRAMEWORK: DISTRIBUTED DATA MINING ON DATA

GRID PLATFORMS

Nhien-An Le-Khac, Tahar Kechadi and Joe Carthy

School of Computer Science & Informatics, University College Dublin

Belfield, Dublin 4, Ireland

Keywords:

Data mining, Grid computing, distributed systems, heterogeneous datasets.

Abstract:

In this paper, we present the ADMIRE architecture; a new framework for developing novel and innovative

data mining techniques to deal with very large and distributed heterogeneous datasets in both commercial and

academic applications. The main ADMIRE components are detailed as well as its interfaces allowing the user

to efficiently develop and implement their data mining applications techniques on a Grid platform such as

Globus ToolKit, DGET, etc.

1 INTRODUCTION

Distributed data mining (DDM) techniques have be-

come necessary for large and multi-scenario datasets

requiring resources, which are heterogeneous and dis-

tributed. There are two scenarios of datasets distribu-

tion: homogeneous and heterogeneous. Differences

between them are presented in (Kargupta,2002). Ac-

tually, most of the existing research work on DDM is

dedicated to the first scenario; there exists very little

research in the literature about the second scenario.

Furthermore, today we have a deluge of data from

not only science fields but also industry and com-

merce fields. Massive amounts of data that are being

collected are often heterogeneous, geographically dis-

tributed and owned by different organisations. There

are many challenges concerning not only DDM tech-

niques but also the infrastructure that allows efficient

and fast processing, reliability, quality of service, in-

tegration, and extraction of knowledge from this mass

of data.

To face with large, graphically distributed, high di-

mensional, multi-owner, and heterogeneous datasets,

Grid (Foster,2001) can be used as a data storage and

computing platform to provide an effective compu-

tational support for distributed data mining applica-

tions. Because Grid is an integrated infrastructure that

supports the sharing and coordinated use of resources

in dynamic heterogeneous distributed environments.

Actually, few projects have just been started using

Grid as a platform for distributed data mining (Can-

nataro’a,2002) (Brezany,2003). However, they have

only used some basic Grid services and most of

them are based on Globus Tool Kit (Globus Tool

Kit,website).

In this paper, we present the architecture of AD-

MIRE; a new framework based on Grid infrastructure

for implementing DDM techniques. This framework

is based on a Grid system dedicated to deal with data

issues. Portability is key of this framework. It allows

users to transparently exploit not only standard Grid

architecture (Open Grid Services Architecture/Web

Services Resource Framework-OGSA/WSRF) but

also P2P/Grid architectures (DGET (Kechadi,2005))

for their data mining applications. Moreover, this new

framework allows more dynamic and autonomous in

the mining, integrating and processing phases. The

rest of this paper organised as follows: section 2 deals

with background and related projects; then we will

discuss the ARMIRE architecture in section 3. Sec-

tion 4 presents our preliminary evaluations of this ar-

chitecture. Finally, we conclude on section 5.

2 BACKGROUND

In this section, we present firstly Grid architec-

tures that ADMIRE is based on: OGSA/WSRF

(OGSA,website) and DGET (Data Grid environ-

ment and Tools) (Kechadi,2005). We present

also some common architectures for DDM systems:

67

Le-Khac N., Kechadi T. and Carthy J. (2006).

ADMIRE FRAMEWORK: DISTRIBUTED DATA MINING ON DATA GRID PLATFORMS.

In Proceedings of the First International Conference on Software and Data Technologies, pages 67-72

DOI: 10.5220/0001319100670072

Copyright

c

SciTePress

client/server based and agent-based as well as Grid

data mining.

2.1 OGSA/WSRF

Actually, OGSA is a new-generation of Grid tech-

nologies. It becomes a standard for building service-

oriented for applications that are based on Web ser-

vices.

Web services

Traditional protocols for distributed computing

such as Socket, Remote Procedure Call, Java RMI,

CORBA (CORBA,website), etc. are not based on

standards, so it is difficult to take up in heteroge-

neous environments. Today there are Web services

which are open standards. For instance, XML-based

middleware infrastructure can build and integrate ap-

plications in heterogeneous environments, indepen-

dently of the platforms, programming languages or

locations. It is based on Service Oriented Architec-

ture Protocol (SOAP) that is a communication proto-

col for clients and servers to exchange messages in a

XML format over a transport-level protocol HTTP. So

Web services provide a promising platform for Grid

systems.

OGSA/WSRF

OGSA is an information specification to define a

common, standard and open architecture for Grid-

based applications as well as for building service-

oriented Grid systems. It is developed by Global

Grid Forum and its first released was in June 2004.

OGSA is based on Web-services and standardises al-

most Grid-services such as job and resource manage-

ment, communication and security. These services

are divided in two categories: core services and plat-

form services. The former includes service creation,

destruction, life cycle management, service registra-

tion, discovery and notification. The later deals with

user authentication and authorisation, fault tolerance,

job submission, monitoring and data access.

However, it does not specify how these interfaces

should be implemented. This is provided by Open

Grid Services Infrastructure (OGSI) (OGSI,website)

that is the technical specification to specifically define

how to implement the core Grid services as defined in

OGSA in the context of Web services. It specifies

exactly what needs to be implemented to conform to

OGSA. One of the OGSI disadvantages is that it is too

heavy with everything in one specification, and it does

not work well with the existing Web service and XML

tools. Actually, OGSI is being replaced by Web Ser-

vices Resource Framework (WSRF) (WSRF,website)

which is a set of Web services specifications released

in January 2004. WSRF is a standard for modelling

stateful resources with Web services. One of the ad-

vantages of WSRF is that it partitions the OGSI func-

tionality into a set of specifications and it works well

with Web services tools by comparison with OGSI.

WSRF is now supported by Globus ToolKit 4 (Globus

Tool Kit,website).

2.2 DGET

DGET (Kechadi,2005) is a Grid platform dedicated

to large datasets manipulation and storing. Its struc-

ture is based on peer-to-peer communication system

and entity-based architecture. The principal features

of DGET are: transport independent communication,

decentralised resource discovery, uniform resource

interfaces, fine grained security, minimum adminis-

tration, self-organisation and entity based system. A

DGET entity is the abstraction of all logical or physi-

cal objects existing in the system. DGET communica-

tion model has been designed to operate without any

centralized server as we can found in peer-to-peer sys-

tems. This feature is in contrast to many centralised

grid system. Moreover, DGET also provides job mi-

gration that is not supported by some Grid platforms

(Unicore, for example). Besides, DGET uses a so-

phisticated security mechanism based on Java secu-

rity model.

2.3 DDM System Architectures

Usually, there are two common architectures of

DDM systems: client-server based and agent-based

(Kargupta,2000). In the first one, they have pro-

posed three-tier client-server architecture that in-

cludes client, data server and computing server. The

client allows to create data mining tasks, visualisa-

tion of data, models. Data server deals with data ac-

cess control, task coordination and data management.

The computing server executes data mining services.

This architecture is simple but it is not dynamic, au-

tonomous and does not address to the scalability is-

sue.

The second architecture aims to provide a scalable

infrastructure for dynamic and autonomous mining

over distributed data of large sizes. In this model,

each data site has one or more agents for analysing lo-

cal data and communication with agents at other sites

during the mining. The locally mined knowledge will

be exchanged among data sites to integrate a globally

coherent knowledge.

Today, new DDM projects aim to mine data in a ge-

ographically distributed environment. They are based

on Grid standards (e.g. OGSA) and platforms (e.g.

Globus Toolkit) in order to hide the complexity of

heterogeneous data and lower level details. So, their

architectures are more and more sophisticated to ar-

ticulate with Grid platforms as well as to supply a

user-friendly interface for executing data mining tasks

transparently. Our research also aims to this architec-

ture.

ICSOFT 2006 - INTERNATIONAL CONFERENCE ON SOFTWARE AND DATA TECHNOLOGIES

68

2.4 Related Projects

This section presents two most recent DDM projects

for heterogeneous data and platforms: Knowl-

edge Grid (Cannataro’a,2002) (Cannataro’b,2002)

and Grid Miner (Brezany,2003) (Brezany,2004). All

of them use Globus Toolkit (Globus Tool Kit,website)

as a Grid middleware.

Knowledge Grid

Knowledge Grid (KG) is a framework for im-

plementing distributed knowledge discovery. This

framework aims to deal with multi-owned, heteroge-

neous data. This project is developed by Cannataro

el al. at University ”Magna Graecia” of Catanzaro,

Italy. The architecture of KG is composed of two lay-

ers: Core K-Grid and High level K-Grid.

The first layer includes Knowledge Directory ser-

vice (KDS), Resource allocation and execution man-

agement service (RAEMS). KDS manages metadata:

data sources, data mining (DM) software, results of

computation, etc. that are saved in KDS reposito-

ries. There are three kinds of repositories: Knowledge

Metadata Repository for storing data, software tool,

coded information in XML; Knowledge Base Repos-

itory that stores all information about the knowledge

discovered after parallel and distributed knowledge

discovery (PDKD) computation. Knowledge Execu-

tion Plan Repository stores execution plans describing

PDKD applications over the grid. RAEMS attempts

to map an execution plan to available resource on the

grid. This mapping must satisfy users, data and algo-

rithms requirements as well as their constraints.

High level layer supplies four service groups used

to build and execute PDKD computations: Data Ac-

cess Service (DAS), Tools and Algorithms Access

Service (TASS), Execution Plan Management Service

(EPMS) and Results Presentation Service (RPS). The

first service group is used for the search, selection,

extraction, transformation, and delivery of data. The

second one deals with the search, selection, download

DM tools and algorithms. Generating a set of differ-

ent possible execution plans is the responsible of the

third group. The last one allows to generate, present

and visualize the PDKD results.

The advantage of KG framework that it supports

distributed data analysis and knowledge discovery

and knowledge management services by integrating

and completing the data grid services. However this

framework was the non-OGSA-based approach.

GridMiner

GridMiner is an infrastructure for distributed data

mining and data integration in Grid environments.

This infrastructure is developed at Institue for Soft-

ware Science, University of Vienna. GridMiner is a

OGSA-based data mining approach. In this approach,

distributed heterogeneous data must be integrated and

mediated by using OGSA-DAI (DAI,website) before

passing data mining phase. Therefore, they have di-

vided data distribution in four data sources scenarios:

single, federate horizontal partitioning, federate verti-

cal partitioning and federate heterogeneity.

The structure of GridMiner consists of some el-

ements: Service Factory (GMSF) for creating and

managing services; Service Registry (GMSR) that is

based on standard OGSA registry service; DataMin-

ing Service (GMDMS) that provides a set of data

mining, data analysis algorithms; PreProcessing Ser-

vice (GMPPS) for data cleaning, integration, handling

missing data, etc.; Presentation Service (GMPRS) and

Orchestration Service (GMOrchS) for handling com-

plex and long-running jobs.

The advantages of GridMiner is that it is an integra-

tion of Data Mining and Grid computing. Moreover,

it can take advances from OGSA. However, it depends

on Globus ToolKit as well as OGSA-DAI for control-

ling data mining and other activities across Grid plat-

forms. Besides, distributed heterogeneous data must

be integrated before the mining process. This ap-

proach is not appropriate for complex heterogeneous

scenarios.

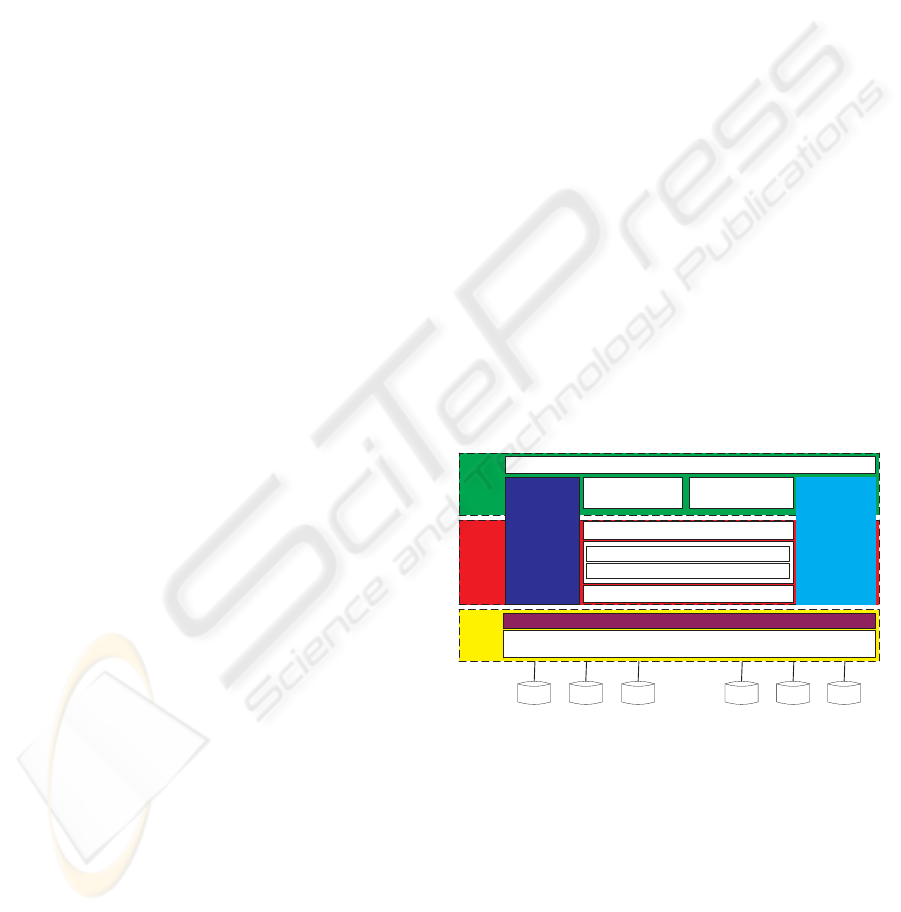

3 ADMIRE ARCHITECTURE

ADMIRE architecture includes three main layers: in-

terface, core and virtual data grid.

Graphic Interface (GUI)

InterpretationEvaluation

Data/

Resource

management

Knowledge map

Data Grid Service: DGET or OGSA/WSFR

Data Preprocessing

Integration / Coordination

Task

management

Interface

Core

Data

Grid

Virtual Data Grid

Distributed Data Mining

Figure 1: ADMIRE Software architecture.

3.1 Interface

The upper of this layer is a graphical user interface

(GUI) allowing the development and the execution of

DDM applications. By using this interface, users can

build an DDM job including one or many tasks. A

task contains either one of the DM techniques such as

classification, association rules, clustering, prediction

or other data operations such as data pre-processing,

data distribution. Firstly, users choose a tasks and

ADMIRE FRAMEWORK: DISTRIBUTED DATA MINING ON DATA GRID PLATFORMS

69

then they browse and choose resources that are repre-

sented by graphic objects, such as computing nodes,

datasets, DM tools and algorithms correspondent to

DM technique chosen. These resources are either

on local site or distributed on different heterogene-

ity sites with heterogenous platforms. However, AD-

MIRE allows users to interact with them transparently

at this level. The second step in the building of a

DDM job is to establish links between tasks chosen,

i.e. the execution order. By checking this order, AD-

MIRE system can detect independent tasks that can

be executed concurrently. Furthermore, users can also

use this interface to publish new DM tools and algo-

rithms.

This layer allows to visualize, represent as well

as to evaluate results of an DDM application too.

The discovered knowledges will be represented in

many defined forms such as graphical, geometric, etc.

ADMIRE supports different visualization techniques

which are applicable to data of certain types (discrete,

continual, point, scalar or vector) and dimensions (1-

D, 2-D, 3-D). It also supports the interactive visual-

ization which allows users to view the DDM results

in different perspectives such as layers, levels of de-

tail and help them to understand these results better.

Besides the GUI, there are four modules in this layer:

DDM task management, Data/Resource management,

interpretation and evaluation.

The first module spans both Interface and Core lay-

ers of ADMIRE. The part in the Interface layer of this

module is responsible for mapping user requirements

via selected DM tasks and their resources to an exe-

cuting schema of tasks correspondent. Another role

of this part is to check the coherence between DM

tasks of this executing schema for a given DDM job.

The purpose of this checking is, as mentioned above,

to detect independent tasks and then this schema is re-

fined to obtain an optimal execution. After verifying

the executing schema, this module stores it in a task

repository that will be used by the lower part of this

task management module in the core layer to execute

this DDM job.

The second module allows to browse necessary re-

sources in a set of resources proposed by ADMIRE.

This module manages the meta-data of all the avail-

able datasets and resources (computing nodes, DM

algorithms and tools) published. The part in the Inter-

face layer of this module is based on these meta-data

that are stored in two repositories: datasets reposi-

tory and resources repository to supply an appropri-

ate set of resources depending on the given DM task.

Data/Resource management module spans both In-

terface and Core layers of ADMIRE. The reason is

that modules in the ADMIRE core layer also need

to interact with data and resources to perform data

mining tasks as well as integration tasks. In or-

der to mask grid platform, data/resource manage-

ment module is based on a data grid middleware, e.g.

DGET(Kechadi,2005).

The third module is for interpreting DDM results

to different ordered presentation forms. Integrat-

ing/mining result models from knowledge map mod-

ule in the ADMIRE core layer is explained and eval-

uated.

The last module deals with evaluation the DDM re-

sults by providing different evaluation techniques. Of

course, measuring the effectiveness or usefulness of

these results is not always straightforward. This mod-

ule also allows experienced users to add new tools or

techniques to evaluate knowledge mined.

3.2 Core Layer

The ADMIRE core layer is composed of three

parts: knowledge discovery, task management and

data/resource management.

The role of the first part is to mine the data, inte-

grate the data and discover knowledge required. It is

the centre part of this layer. This part contains three

modules: data preprocessing; distributed data mining

(DDM) with two sub components: local data min-

ing (LDM) and integration/coordination; knowledge

map.

The first module carries out locally data pre-

processing of a given tasks such as data cleaning,

data transformation, data reduction, data project, data

standardisation, data density analysis, etc. These pre-

processed data will be the input of the DDM mod-

ule. Its LDM component performs locally data min-

ing tasks. The specific characteristic of ADMIRE by

compared with other current DDM system is that dif-

ferent mining algorithms will be used in a local DM

task to deal with different kind of data. So, this com-

ponent is responsible for executing and these algo-

rithms. Then, local results will be integrated and/or

coordinated by the second component of DDM mod-

ule to produce a global model. Algorithms of data-

preprocessing and data-mining are chosen from a set

of pre-defined algorithms in the ADMIRE system.

Moreover, users can publish new algorithms to in-

crease the performance.

The results of local DM such as association rules,

classification, and clustering etc. should be collected

and analysed by domain knowledge. This is the role

of the last module: knowledge map. This module

will generate significant, interpretable rules, models

and knowledge. Moreover, the knowledge map also

controls all the data mining process by proposing dif-

ferent strategies mining as well as for integrating and

coordinating to achieve the best performance.

The task management part plays an important role

in ADMIRE framework. It manages all executing

plan created from the interface layer. This part reads

an executing schema from the task repository and then

ICSOFT 2006 - INTERNATIONAL CONFERENCE ON SOFTWARE AND DATA TECHNOLOGIES

70

schedules and monitors the execution of tasks. Ac-

cording to the scheduling, this task management part

carries out the resource allocation and then finds the

best and appropriate mapping between resources and

task requirements. This part is based on services sup-

plied by Data Grid layer (e.g. DGET) in order to find

the best mapping. Next, it will activate these tasks

(local or distribution). Because ADMIRE framework

is for DDM, this part is also responsible for the co-

ordination of the distributed execution that is, it man-

ages communication as well as synchronisation be-

tween tasks in the case of cooperation during the pre-

processing, mining or integrating stage.

The role of data/resource management part is to fa-

cilitate the entire DDM process by giving an efficient

control over remote resources in a distributed environ-

ment. This part creates, manages and updates infor-

mation about resources in the dataset repository and

the resource repository. The data/resource manage-

ment part goes with the data grid layer to provide an

transparent access to resources across heterogeneous

platforms.

3.3 Data Grid Layer

The upper part of this layer, called virtual data grid,

is a portable layer for data grid environments. Ac-

tually, most of the Grid Data Mining Projects in

literature are based on Globus ToolKit (GT) (Can-

nataro’a,2002)(Brezany,2003). The use of a set of

Grid services provided by this middleware gives some

benefits. For instance, the developer do not waste

time for dealing with heterogeneous of organizations,

platforms, data sources, etc.; software distributing is

more easier because GT is the most widely used mid-

dleware in Grid community. However, as we men-

tioned above, this approach depends on GT with secu-

rity overhead and GT’s organisation of system topol-

ogy and those services configuration are manual.

In order to make ADMIRE more portable, and

more flexible with regards to many existing data grid

platform developed today, we build this portable layer

as an abstract of virtual Grid platform. It supplies a

general services operations interface to upper layers.

It homogenises different grid middleware by map-

ping of DM tasks from upper layer to grid services

according to OGSA/WSRF standard or to entities

(Kechadi,2005) in DGET model. For instance, AD-

MIRE is carried on DGET middleware. The portable

layer implements two groups of entities: data and re-

source entities. The first group deals with data and

meta-data used by upper layers and second one deals

with resources used. The DGET system guarantees

the transparent access of data and resource across a

heterogeneous platform.

By using this portable layer, ADMIRE can be car-

ried easily on many kind of Data Grid platforms such

as GT and DGET.

4 EVALUATION

Interface

By using a graphic interface, ADMIRE aims to pro-

vide a user-friendly interaction with DDM users who

can operate transparently with resources in distributed

heterogeneous environment via graphic objects. The

choosing of resources for each DM task in a DDM job

makes more convenient for users and for systems in

checking the consistency of an executing schema. Be-

sides, the DM tools and algorithms are grouped into

different groups depending on DM tasks (classifica-

tion, association, clustering, pre-processing, data dis-

tribution, evaluation, etc.) chosen. This approach will

help user to build a DDM application more easier than

to do it in KG approach (Cannataro’a,2002) where

users have to choose separately resources. Moreover,

KG system has to check the consistency of a DM ap-

plication built to avoid, e.g. an execution link between

a node object and a dataset object.

Furthermore, users can also publish new DM tools

and DM algorithms as well as evaluation algorithms

and tools via this interface. It also allows users to

control better the mining and evaluation process.

Core layer

In this layer, ADMIRE tries to use efficient dis-

tributed algorithms and techniques and facilitate

seamless integration of distributed resources for com-

plex problem solving. Furnishing dynamic, flexible

and efficient DDM approaches and DDM algorithms

in ADMIRE, users or system may choose different

algorithms for the same DDM task according to the

type or characteristics of data. This is an distin-

guish feature of ADMIRE by compare with current

related project. This feature allows ADMIRE to deal

with distributed and different level of heterogeneous

of datasets.

By providing local preprocessing, ADMIRE could

avoid the complexity in federation or global integra-

tion of heterogeneous data (or data schema) that nor-

mally need the intervention of experienced user as in

GridMiner (Brezany,2003).

Data/resource management and task management

modules are not only to support all of the data min-

ing activities but also to support heterogeneous pro-

cess. The coordination of these modules with data

mining modules (preprocessing, DDM, Knowledge

Map) makes ADMIRE system more robust than other

knowledge grid frameworks that have not proposed

any data mining approach.

Data Grid layer

As mentioned above, ADMIRE is based on this

layer to cope with various Grid environments. By us-

ADMIRE FRAMEWORK: DISTRIBUTED DATA MINING ON DATA GRID PLATFORMS

71

ing OGSA/WSRF services, Data Grid platform layer

allows ADMIRE to be ported on whatever Data Grid

platform based on this standard which is chosen by

almost recent Grid projects. For example, this feature

allows users to port ADMIRE on an Globus Toolkit

platform which is the most widely used grid middle-

ware. Users do not have to waste time to re-install the

grid platform for this new DDM framework.

Moreover, this layer makes ADMIRE to articu-

late well with DGET to benefit from the advan-

tages of this data grid environment presented in

(Kechadi,2005). Firstly, the dynamic, self-organizing

topology of DGET allows ADMIRE to realize DM

tasks on dynamic and autonomic platforms. Next,

ADMIRE can deal with a very large and highly dis-

tributed datasets, e.g. medical data or meteorologi-

cal data, by the decentralized resource discovery ap-

proach of DGET. This approach does not apply on any

specialized servers. We can, therefore, avoid the bot-

tleneck problem in intensive DDM tasks. Moreover,

this feature allows DDM applications to execute ef-

fectively in integration and coordination between dif-

ferent sites. Thirdly, DGET approach does not require

users to have individual user account on the resources

and it uses XACML (Moses,2005) to define access

control policies. This feature allows a very large num-

ber of users participating in DDM activities in AD-

MIRE. Last but not least, DM tasks in ADMIRE is

more flexible in the distributed environment by sup-

porting the strong migration provided in DGET. In

addition, the implementation of ADMIRE on DGET

platform is straightforward.

The virtual data grid layer is the only modifica-

tion to carry ADMIRE on new Grid platform. It also

makes our approach more portable.

5 CONCLUSION

In this paper we have proposed a new framework

based on Grid environments to execute new dis-

tributed data mining techniques on very large and dis-

tributed heterogeneous datasets. The architecture and

motivation for the design have been presented. We

have also discussed some related projects and com-

pared them with our approach.

The ADMIRE project is still in its early stage. So,

we are currently developing a prototype for each layer

of ADMIRE to evaluate the system features, test each

layer as well as whole framework and building simu-

lation and DDM test suites.

REFERENCES

Brezany P., Hofer J. , Tjoa A., Wohrer A. (2003). Grid-

miner: An Infrastructure for Data Mining on Com-

putational Grids. In Data Mining on Computational

Grids APAC’03 Conference. Gold Coast, Australia,

October.

Brezany P., Janciak I., Woehrer A. and Tjoa A. (2004).

GridMiner: A Framework for Knowledge Discovery

on the Grid - from a Vision to Design and Implemen-

tation. In Cracow Grid Workshop. Cracow. December

12-15.

Cannataro M. et al. (2002). A data mining toolset for dis-

tributed high performance platforms. In Proc. of the

3rd International Conference on Data Mining Meth-

ods and Databases for Engineering, Finance and Oth-

ers Fields Southampton, UK, September 2002. Pages

41–50, WIT Press,

Cannataro M., Talia D., Trunfio P. (2002). Distributed Data

Mining on the Grid. In Future Generation Computer

Systems.North-Holland vol. 18, no. 8, pp. 1101-1112.

CORBA website: http://www.corba.org.

OGSA-DAI website: http://www.ogsadai.org.uk/.

Foster I., C. Kesselman C. (2001). The anatomy of the grid:

Enabling scalable virtual organizations. In Int. J. Su-

percomputing Application. vol. 15, no. 3

Globus Tool Kit website: http://www.globus.org

Kargupta H., and Chan P. (2000). Advances in distributed

and Parallel Knowledge Discovery. AAAI Press/The

MIT Press, London, 1st edition.

Park B., and Kargupta H. (2002). Distributed Data Min-

ing: Algorithms, Systems, and Applications. In Data

Mining Handbook. N. Ye (Ed.), 341–358. IEA, 2002.

Hudzia B., McDermott L., Illahi T.N., and Kechadi M-T.

(2005) Entity Based Peer-to-Peer in a Data Grid En-

vironment. In the 17th IMACS World Congress Scien-

tific Computation, Applied Mathematics and Simula-

tion. Paris, France, July, 11-15.

Moses T. 2005 Extensible access control markup language

(xacml) version 2.0 In OASIS Standard. February

2005.

Foster, I., Kesselman, C., Nick, J. and Tuecke, S. The

Physiology of the Grid: An Open Grid Services

Architecture for Distributed Systems Integration.

http://www.globus.org/research/papers/ogsa.pdf.

OGSI-WG website: http://www.Gridforum.org/ogsi-wg/.

Czajkowski K. et al. The WS-Resource Frame-

work, Version 1.0 website:. http://www-

106.ibm.com/developerworks/library/ws-

resource/ws-wsrf.pdf.

ICSOFT 2006 - INTERNATIONAL CONFERENCE ON SOFTWARE AND DATA TECHNOLOGIES

72