METHODS OF ELECTRONIC EXAMINATION APPLIED

TO STUDENTS OF ELECTRONICS

Comparison of Results with the Conventional (Paper-and-pencil) Method

Panagiotis Tsiakas, Charalampos Stergiopoulos, Maria Kaitsa, Dimos Triantis, Ioannis Fragoulis,

Konstantinos Ninos

E-learning Support Team, Technological Educational Institute of Athens,28 Ag. Spyridonos, Athens, Greece

Keywords: New technologies in education, e-learning, computerized testing systems, electronic examination, electronic

evaluation, self evaluation, “e-examination”, “e-education”.

Abstract: Electronic examination is of great interest from both the educational and pedagogical points of view.

Different methods have been applied during three examination periods at the Technological Educational

Institute (T.E.I) of Athens for “Electronic Physics”, which is one of the core modules of the Department of

Electronics. For this purpose, an application named “e-examination” has been developed. The selection of

the module was based on certain criteria concerning the applicability of the methods. Preliminary

preparations have been made for the conversion of the available educational material into an appropriate

form for the creation of question sets for the “e-examination”. To avoid bias and ensure objectivity of the

methods and therefore the reliability of the results extra caution was taken. Thereafter, the results of the

electronic and the conventional examinations were statistically processed and compared. The comparison

indicated that the performance of students electronically examined was, in some of the cases, better than that

of students who were examined conventionally. The percentage of knowledge assimilation and the

efficiency of the teaching process were also investigated.

1 INTRODUCTION

Today it is widely accepted that new technologies

can radically alter the educational practices and

enhance the learning procedures (Dede, 2000, Fox,

2002). Their incorporation in the academic practice

is a key element of modern educational process

(DeBord et al, 2004). On this concept, new

technologies have been used in the frame of

improving the quality and the efficiency of the

provided education (Bigum, 1997, Howard et al,

2004). Today, user friendly applications are

available to students, supporting the teaching

process through the use of polymorphic educational

material (Ali et al, 2004, Fox and Herrmann, 1997).

A type of these applications is computerized testing

systems used for evaluating students (Buchanan,

2002).

Usefulness of electronic evaluation is still under

investigation (Bull et al, 2002). At the T.E.I. of

Athens, an application named “e-examination” has

been developed for the examination of students

(Tsiakas et al, 2005). A number of case studies have

indicated that it can be used in the academic

environment of the T.E.I. The results have been

quite encouraging for further research and

investigation(Triantis et al, 2004, Tsiakas et al,

2005).

The capabilities of electronic evaluation

methods, let educators go beyond the limits of

multiple choice tests and make possible alternative

assessments. This work presents a comparison

among the implemented methods and the paper-and-

pencil one. The purpose was to study their

appropriateness, feasibility and effectiveness

(Thomas, 2003) and to investigate how the

examination process can become more productive

and accurate.

In order to ensure that the results of the case

studies would be realistic, reliable and comparative,

it was essential to meet some basic requirements.

Students that participated in the pilot program

should be familiarised with new technologies, the

nature of the evaluation method and the use of the

305

Tsiakas P., Stergiopoulos C., Kaitsa M., Triantis D., Fragoulis I. and Ninos K. (2006).

METHODS OF ELECTRONIC EXAMINATION APPLIED TO STUDENTS OF ELECTRONICS - Comparison of Results with the Conventional

(Paper-and-pencil) Method.

In Proceedings of WEBIST 2006 - Second International Conference on Web Information Systems and Technologies - Society, e-Business and

e-Government / e-Learning, pages 305-311

DOI: 10.5220/0001244103050311

Copyright

c

SciTePress

“e-examination” application. Finally, they should

have access to a series of self-evaluation tests for

practicing and to polymorphic study material for

studying (Tsiakas et al, 2005).

After conducting the examinations in the three

periods, the results were stored in a database for

statistical processing and indicated that students who

were electronically examined, in some of the cases,

performed better than those who were examined

conventionally (Triantis et al, 2004). This led us to

the conclusion that electronic examination can be

used as an alternative method of evaluating students

and can eventually improve the teaching process.

2 “E-EXAMINATION”

APPLICATION

“E-examination” is a stand alone application

developed at the T.E.I. of Athens. It is mainly a

managing and editing tool which can help the

teacher to build and deploy assessment tests in a

suitable form so as to be displayed in a web browser.

In this way, it is assured that each test is portable

and cross-platform.

The tests can then be used either for examining

students or for self-evaluation purposes. The

examinee has to answer a series of questions through

a user-friendly interface. “E-examination” tests

support four categories of questions:

− True or false.

− Multiple choice.

− Questions that require short calculations. In this

case, students must type their answer in the

corresponding field.

− Problems or exercises that require multiple steps

for their solution. These steps include questions

which belong to the previous categories.

Figure 1: A multiple choice sample question of the

electronic examination.

Figure 1 shows a sample screen of a multiple

choice question. The user interface is divided into

two areas. The wider one displays the question, the

possible answers and the navigation panel. The other

area, displays real time information such as the

remaining time, the total number of questions

included in the test, the current question number and

the chapter it refers to.

3 REQUIREMENTS AND

PREPARATION

“E-examination” was used for the evaluation of

students who have been attending the module

“Electronic Physics”, with the following outline:

Semiconductors, pn junctions and diode circuits,

bipolar, field effect transistors and bias circuits.

According to the current curriculum provided by the

Department of Electronics of the T.E.I. of Athens, it

is a core module and is taught during the first

semester. The selection of the module was based on

the following criteria:

− The teacher should be able to create sets of

questions comprised of the four types supported

by the application.

− Students from the specific department were more

or less accustomed to new technologies and the

use of computers.

− Their everyday contact with new technologies

made them willing to try new examination

methods.

Besides the scheduled module lectures and the

companion book, polymorphic educational material

should be available to every student. This material

could be found on a web platform named “e-

education” that contains web pages referring to

taught modules. This platform acts supportively,

operating as a digital library and a free, instant

information provider. Students can access the web

pages and download:

− Lecture notes.

− Theory questions answered or not.

− Problems and exercises with exemplary solutions

or just hints.

− A series of questions of past examination periods

with their solutions.

− Self-evaluation practice tests produced by the “e-

examination” application.

Successive sessions were also organized in order

to get the students accustomed to the “e-

examination” user interface. They also took a

WEBIST 2006 - E-LEARNING

306

sample electronic test which was a simulation of the

final one and had no effect on the final assessment.

The teacher should also prepare the subjects in

which students would be electronically examined.

The challenge was to fragmentise big problems and

exercises into questions that could be edited and

managed by “e-examination”, covering at the same

time a wide range of the module topics (Epstein et

al, 2001).

Another issue was the equal distribution of

students in two large groups at a time. One of the

groups would be examined conventionally through

the paper-and-pencil method and the other group

would be examined electronically. During the

semesters, students participated in three ordinary

paper-and-pencil multiple choice tests. The score

achieved in these tests had no effect on the students’

final grade of the module. The score distribution was

grouped into scales and students belonging to each

of these scales were randomly and equally divided

into the two groups mentioned above.

4 THE APPLIED METHODS

Four types of electronic examination took place in

the last three semesters. Each semester, a different

method was applied and the results were compared

with those of the corresponding conventional

method. The methods described in sections 4.2 and

4.4 were both applied during the third semester and

on the same group of students. The final grade for

the module in that semester was the average of the

two examinations. In all cases the questions did not

necessarily cover all of the module topics and their

difficulty level varied. The examination topics for

the methods implemented were of the same

difficulty level, they covered the same range of

module content and the available time was realistic

and adequate. Every correct answer added certain

points to the final result. The range of grades used

for marking students was 0.0-10 and successful

grades were considered those ranking higher than

5.0. The comparison was based on the following

parameters:

− Percentage of students who passed the

examination

− Percentage of students who received an excellent

score (>7.5/10)

− Average score of students who succeeded

− Average score of students who participated

4.1 The Conventional

(Paper-and-pencil) Method

The conventional method is the one currently used

for the evaluation of students for most of the

modules in the Department of Electronics. In

particular, the teacher prepares four subjects which

cover as many of the module topics as possible. The

subjects consist of theory questions and problems

and their difficulty level varies. Students are trying

to cover all subjects as better as they can and the

teacher evaluates their effort.

4.2 The “Classic” Method

In this case, all wrong answers along with any

unanswered questions count for zero. The final score

of the examination is the sum of the points given by

correctly answered questions. In Table 1, the results

of the two types of examination are presented.

Table 1: The results of the examination methods applied.

e-examination

Conventional

examination

Number of students 44 45

Succeeded

(>5.0/10)

28 23

% Succeeded

(>5.0/10)

64% 51%

% Excellent score

(>7.5/10)

50% 43%

Average score of

students who

succeeded

6.6 6.4

Average score of

students who

participated

5.4 5.0

4.3 The Method of Negative Score

The difference of this method from the previous one

is that whenever an examinee gives a wrong answer,

certain points will be subtracted from the final result.

If the examinee does not answer the question, no

points will be subtracted. Likewise, students are

discouraged from just guessing the answers. In

Table 2, the results are presented along with those of

the conventional method.

METHODS OF ELECTRONIC EXAMINATION APPLIED TO STUDENTS OF ELECTRONICS - Comparison of

Results with the Conventional (Paper-and-pencil) Method

307

Table 2: The results of the examination methods applied.

e-examination

Conventional

examination

Number of students 49 48

Succeeded (>5.0/10) 19 24

% Succeeded

(>5.0/10)

39% 50%

% Excellent score

(>7.5/10)

26% 42%

Average score of

students who

succeeded

5.7 6.3

Average score of

students who

participated

4.4 5.3

4.4 The Method of Multiple Paths

(First Variation)

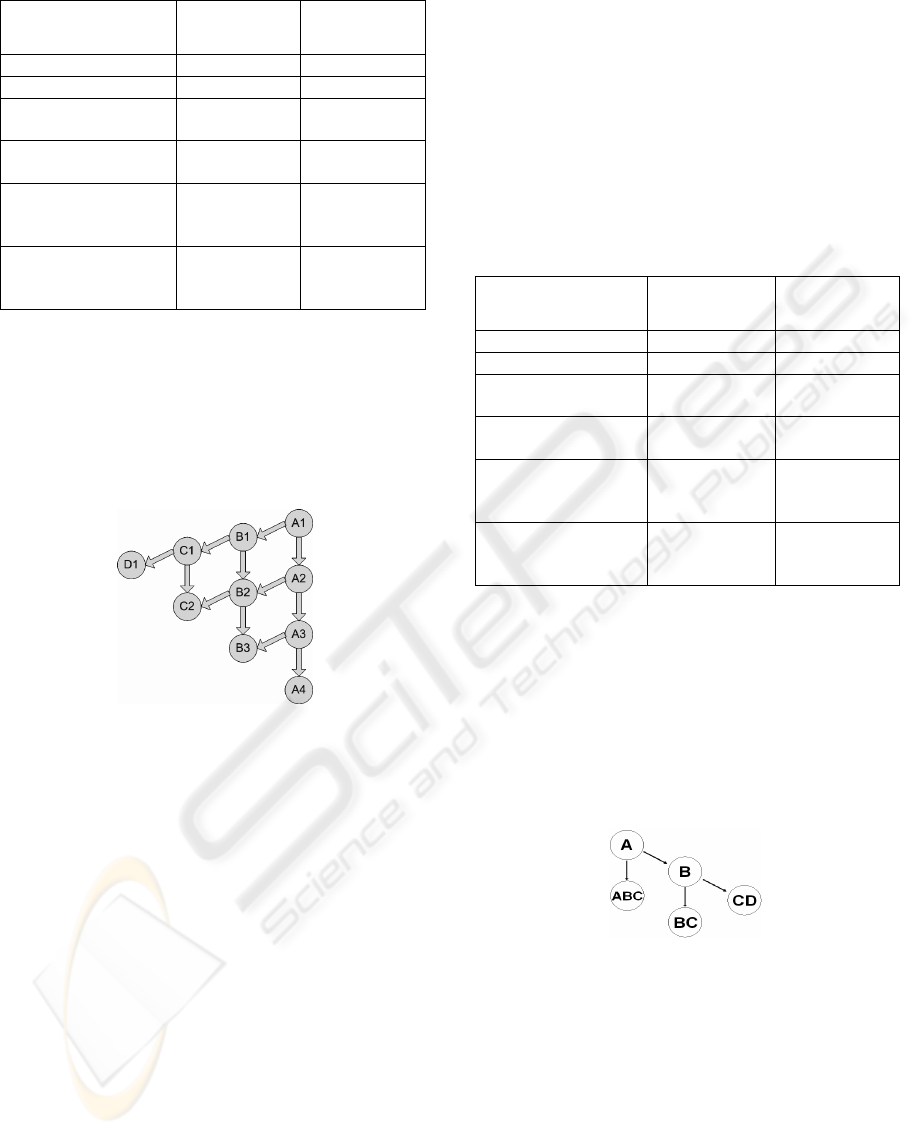

In this method, as shown in Figure 2, there are

multiple paths which lead to the completion of the

test.

Figure 2: All possible paths the system leads the student to

follow during the examination.

The test is divided into groups of questions of

different difficulty level. Groups A1 - A4 contain

basic questions through which teachers can

investigate whether students are familiar with basic

concepts of the module. Groups B1 - B3 are used to

check whether students have adequately

comprehended fundamental concepts. Groups C1

and C2 contain questions of medium difficulty and

are used for helping teachers to verify if students

have fully understood the main topics. Finally, group

D1 is a collection of more sophisticated and

specialized questions which investigate students’

comprehension of various module topics and

whether they have developed their judgment and

analytical way of thinking. Accordingly, questions

belonging to groups A1 - A4 have the minimum

weight in the final score, while questions belonging

to group D1 have the maximum weight.

In the beginning of the test, all students begin

with A1 group. They can proceed to a group of

questions belonging to the higher difficulty level if

they have achieved the minimum score required. If

not, the system automatically leads them to the next

group of questions which belongs to the same

difficulty level. This process is repeated until the end

of the test. The final mark is the sum of all marks of

the groups that have formed the path the student has

followed. If the examinee cannot reach groups of

high difficulty level it is obvious that the final mark

will be low. In all cases, students have to answer

four sets of questions. Table 3 presents the results of

the current electronic and conventional examination.

Table 3: The results of the examination methods applied.

e-examination

Conventional

examination

Number of students 41 40

Succeeded (>5.0/10) 25 22

% Succeeded

(>5.0/10)

61% 55%

% Excellent score

(>7.5/10)

48% 41%

Average score of

students who

succeeded

6.5 6.3

Average score of

students who

participated

5.3 5.1

4.5 The Method of Multiple Paths

(Second Variation)

This method is similar to the previous one. The

difference is, as shown in Figure 3, that students will

necessarily proceed to more difficult questions.

There are only three possible routes which lead to

the completion of the test.

Figure 3: All possible paths the system leads the student to

follow during the examination.

All students begin with the same set of

questions. Once again the system decides which

group of questions the examinee will confront

depending on the achieved score of the current

group. If the score is lower than the minimum

required, the system leads the student to a final set of

selected questions of mixed difficulty. This way, the

examinee has the opportunity to answer questions

which belong, at least, to C-level of difficulty. The

final mark is the sum of all marks of the groups that

have formed the path the student has followed. In

WEBIST 2006 - E-LEARNING

308

Table 4, the results of this variation of examination

are presented.

Table 4: The results of the examination methods applied.

e-examination

Conventional

examination

Number of students 41 40

Succeeded (>5.0/10) 27 22

% Succeeded

(>5.0/10)

66% 55%

% Excellent score

(>7.5/10)

59% 41%

Average score of

students who

succeeded

6.7 6.3

Average score of

students who

participated

5.6 5.1

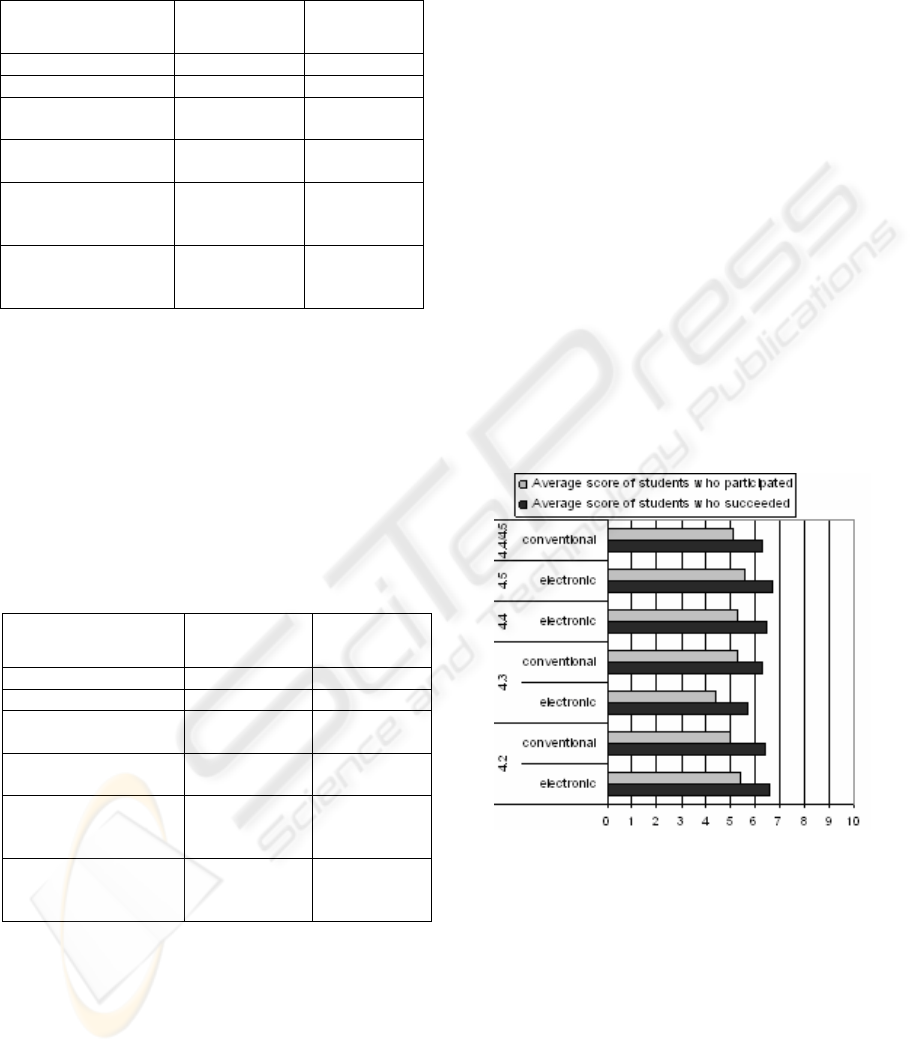

5 RESULTS

In all cases of electronic examination, except the one

with the negative marking, students had a better

overall performance compared to the conventional

method and the scores achieved were also higher.

This is summed up in Table 5 and is clearly shown

in Figure 4.

Table 5: The overall results of the examination methods

applied.

e-examination

Conventional

examination

Number of students 175 133

Succeeded (>5.0/10) 99 69

% Succeeded

(>5.0/10)

57% 52%

% Excellent score

(>7.5/10)

46% 42%

Average score of

students who

succeeded

6.4 6.3

Average score of

students who

participated

5.2 5.1

The difference in the performance is mainly due

to the following factors:

− The electronic examinations contain more

questions of all levels of difficulty which cover

all of the module topics. In this way, students can

find more questions that are easier for them to

answer.

− Complex problems can be broken down into

simpler ones. Thereby, students have the

opportunity to answer some questions and score

some points even if the complete solution is

unknown to them. In the conventional method,

only the final result is usually marked and

students get no points for unsuccessful attempts.

− The above mentioned method of presenting

complex problems works as a guide for students

towards the final solution. It is also a good

method for students to practice the way of

thinking they have been taught throughout the

semester for solving complex problems.

Some of the electronic examination methods that

were implemented are rather strict. Such an example

is the method of negative score. Students who took

this exam did not achieve high scores and the

percentage of success was low (Figures 4, 5 - case

4.3). This is due to students’ hesitation to give an

answer if they are not sure about it. Thereby, the

factor “sheer luck” is eliminated. Additionally, there

are no rewarding points for students that know how

to solve a problem or exercise unless all calculations

and the final result are correct since the system is

unaware of how students think while taking the test.

Thus, the answers must be accurate and correct.

Figure 4: Average scores of students.

The implementation of the classic method shows

an improvement of the results in relation with the

conventional one (Figures 4, 5 - case 4.2). In both

cases, the subjects were of equal difficulty and did

not necessarily cover all topics of the module. The

difference in the performance is mainly due to the

fragmentation of complex questions and exercises.

The methods of multiple paths were applied in

the same group of students. In both cases the

students performed better than those who were

examined conventionally. It is obvious that the first

variation is stricter than the second one (Figures 4, 5

case 4.4, 4.5). If students fail to get the minimum

METHODS OF ELECTRONIC EXAMINATION APPLIED TO STUDENTS OF ELECTRONICS - Comparison of

Results with the Conventional (Paper-and-pencil) Method

309

score in group A1, they are offered another

opportunity as the system guides them to a group

comprised of the same type of questions (A2). If

students fail once again to achieve the minimum

score required, they are “locked” in groups of low

difficulty level and they will eventually fail to pass

the exam. In order to succeed in the examination,

even with a minimum score, a student must reach, at

least, level C groups. If students cannot reach D1,

they will never achieve a score greater than 7.5/10, a

score that is considered “excellent”.

Figure 5: Percentages of success and excellence.

The second variation is less strict. If students fail

to pass the first group of type A questions, they are

directed by the system to a group which consists of

A, B and C type of questions. Hence, even in this

case there is a chance for an examinee to pass the

test since they reach C-level questions. The results

have shown that by implementing this method,

students who could not pass the test when examined

with the first variation but were close to achieving it,

they managed to achieve the minimum score

required when examined with the second variation.

In addition, students who passed the examination,

performed better and many of them managed to pass

the limit of 7.5/10.

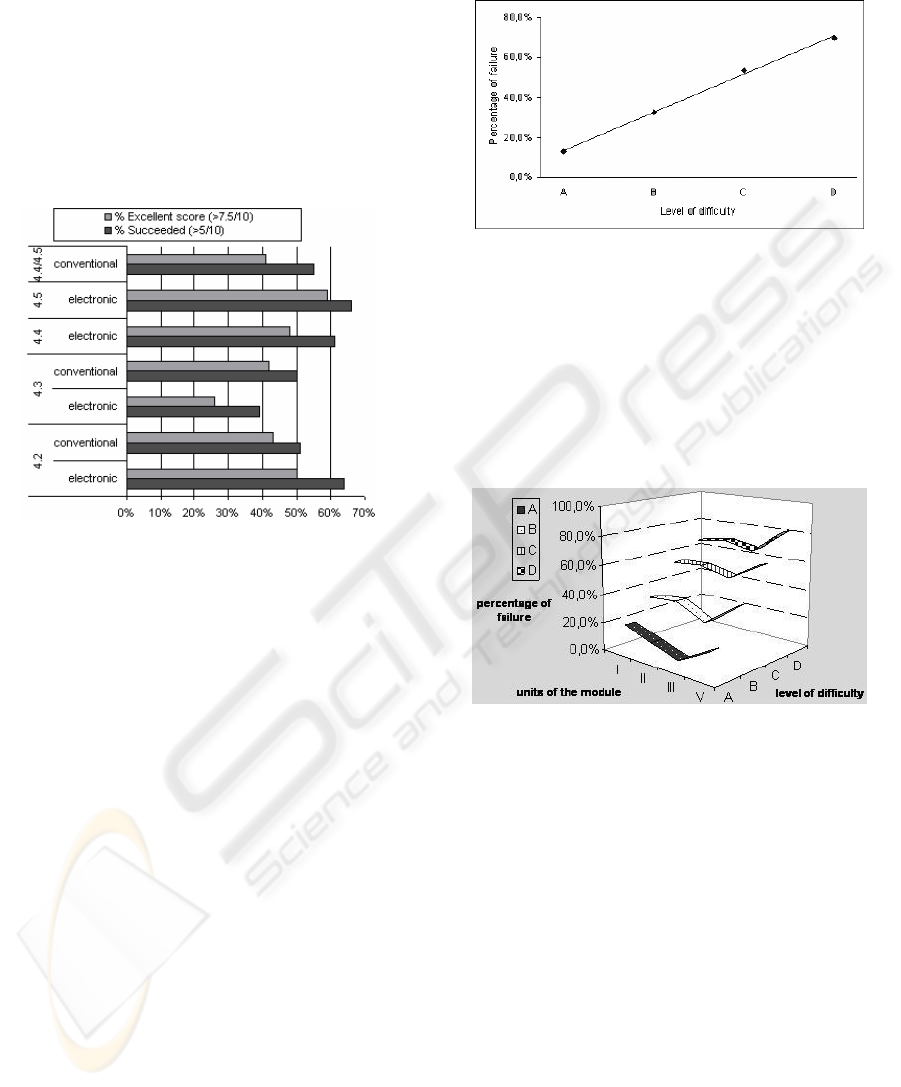

As far as the teachers are concerned, they can

check if the questions they have prepared are fairly

distributed in groups and properly assigned to the

levels of difficulty. They can verify it by checking

the results of groups of questions in relation with

their difficulty level. For example, in Figure 6, this

relation is shown for the first variation of the

multiple path method (section 4.3). It is clear that

this relation is linear for the percentage of failure of

students concerning the difficulty level. In level D

this percentage is higher than the percentage of level

C and is almost four times higher than the

percentage of level A. It is clearly indicated that

groups A1-A4 include the easiest questions while

group D1 includes the most difficult ones.

Figure 6: Students’ performance related to the difficulty

level of questions for case 4.3.

Statistical processing of the results can also help

teachers locate topics of the module which students

failed to comprehend and focus their tutoring on

these subjects during the following semester. This is

clearly shown in Figure 7, where we can see for each

unit of the module, the corresponding percentage of

failure related to the difficulty level of questions of

the test.

Figure 7: The relation between the percentage of failure,

the units of the module and the difficulty level of

questions.

6 CONCLUSIONS

For the first time in the T.E.I. of Athens, a

coordinated and essential effort has been made in

order to incorporate new technologies in the

educational process. Electronic examinations have

been implemented as a pilot program for a certain

module. The results showed that students performed

better. Our goal was not to find out which method is

better, but to make sure that they are applicable,

feasible and effective.

Results demonstrated that electronic examination

methods can be as strict as teachers might wish

(Bigum, 1997). There are methods like the one of

negative score which makes it hard for students to

pass. There are also methods which can really make

weak students to pass the exams and consistent

WEBIST 2006 - E-LEARNING

310

students to perform even better (method of multiple

paths – second variation). Teachers are those who

will decide of which method they want to apply. The

nature of the module and its topics is also a

significant factor involved in this decision. Perhaps

there will be an ideal examination which can be a

combination of more than one method.

Unlike previous efforts which failed to stimulate

the interest of the academic community in the

institution, this time feedback from students and

teachers is positive and encouraging. The module of

informatics which is taught in the secondary level of

education and the use of computers in everyday life

creates a suitable background for students to adopt

the newly introduced tools.

Conclusively, every innovation in the field of

education attracts students’ interest (Bloom and

Hough, 2003). The reason is that students are

encouraged to develop initiative and pursue

knowledge, rather than merely react and absorb. The

right pace has to be found for the achievement of the

best possible results for education. Those results will

require an intense focus on the substance of what the

new technology can deliver, as much as on the

process (Fox, 2002, Howard et al, 2004). We will

still need libraries, seminars and tutorials, faculties,

books, laboratories and residential environments.

The role of new technologies is not to replace or

even degrade the traditional forms of teaching, but to

strengthen what already exists, and also extend our

capacities (Bigum, 1997). This will result to the

accomplishment of higher percentage of knowledge

assimilation and better efficiency during the

teaching process.

ACKNOWLEDGMENTS

This work is co-funded 75% by E.U. and 25% by the

Greek Government under the framework of the

Education and Initial Vocational Training Program –

“Reformation of Studies Programmes of

Technological Educational Institution of Athens”.

REFERENCES

Ali N.S., Hodson-Carlton K., and Ryan M., 2004,

Students’ perceptions of online learning: Implications

for teaching, Nurse Educator, 29, 111-115.

Bigum C., 1997, Teachers and computers. In control or

being controlled? Australian Journal of Education, 41

(3), 247-261.

Bloom K.C., and Hough M.C., 2003, Student satisfaction

with technology-enhanced learning. Computers,

Informatics, Nursing, 21, 241-246.

Buchanan T., 2002, Online assessment: Desirable or

dangerous? Professional Psychology: Research &

Practice, 33, 148-154.

Bull, J., Conole, G., Davis, H.C., White, S., Sclater, N.

2002, Rethinking assessment through learning

technologies. In: Proceedings of the 19th Annual

Conference of the Australasian Society for Computers

in Learning in Tertiary Education (ASCILITE2002)

Vol 1,, 8-11 Dec 2002, Auckland, New Zealand.

Auckland: UNITEC Institute of Technology

DeBord K.A., Aruguete M.S., and Muhlig J., 2004, Are

computer-assisted teaching methods effective?

Teaching of Psychology, 31, 65-68.

Dede C., 2000, Emerging Technologies and Distributed

Learning in Higher Education. In: D. Hanna, ed.,

Higher Education in an Era of Digital Competition:

Choices and Challenges, New York, 2000, New York:

Atwood, 71-92.

Epstein J.,Klinkenberg W.D., Wiley D., and McKinley

L., 2001, Insuring sample equivalence across Internet

and paper-and-pencil assessments, Computers in

Human Behavior, 17, 339-346.

Fox R. & Herrmann A., 1997, Designing study materials

in new times: Changing distance education. In: T.

Evans, V. Jakupec & D. Thompson, eds, Research in

Distance Education, 1997, Geelong. Geelong: Deakin

University Press, 34-44.

Fox R., 2002, Online technologies changing university

practices, In: A. Herrmann & M. M. Kulski, eds,

Flexible Futures in Tertiary Teaching, 2-4 February

2000, Curtin University of Technology, Perth, WA.

Perth: Curtin University of Technology, 235-41.

Howard C., Schenk K., & Discenza R., 2004, Distance

Learning and University Effectiveness: Changing

Educational Paradigms for Online learning, London:

Information Science Publishing (Idea Group Inc.).

Thomas P.G., 2003, Evaluation of Electronic Marking of

Examinations, In: Proceedings of the 8th Annual

Conference on Innovation and Technology in

Computer Science Education (ITiCSE 2003), 30 June –

2 July 2003, Thesaloniki, Greece. New York:

Association for Computing Machinery, 50-54.

Triantis D., Stavrakas I., Tsiakas P., Stergiopoulos C.,

Ninos D., 2004, A pilot application of electronic

examination applied to students of electronic

engineering: Preliminary results. WSEAS Transactions

on Advances in Engineering Education, 1, 26-30.

Tsiakas P., Stergiopoulos C., Kaitsa M., Triantis D., 2005,

New technologies applied in the educational process in

the T.E.I. of Athens. The case of “e-education”

platform and electronic examination of students.

Results. WSEAS Transactions on Advances in

Engineering Education, 2, 192-196.

METHODS OF ELECTRONIC EXAMINATION APPLIED TO STUDENTS OF ELECTRONICS - Comparison of

Results with the Conventional (Paper-and-pencil) Method

311