AN AUTHORING TOOL TO DEVELOP

ADAPTIVE ASSESSMENTS

Proposal Model to Construct Adaptive Assessment Items

Héctor Barbosa, Francisco García

Departamento de Informática y Automática, Universidad de Salamanca, Plaza de la Merced s/n, Salamanca, España

Keywords: Software tools for E-learning, E-learning standards, learning materials development.

Abstract: This paper presents the proposal for the development of an Authoring Tool for the definition of Assessment

items for E-learning platform that follows accepted and open standards like IMS an XML. The main goal of

this model is the definition and packaging of semantic learning objects that could be integrated and

deliberated to open e-learning platforms.

1 INTRODUCTION

In recent years, instructional and educational

institutions have been incorporating information and

communication technologies in learning and

teaching processes in order to increase the quality,

efficiency, and dissemination of education. As long

as those projects cover the needs of individuals in a

particular way, the success and transcendence of

such developments could be incremented by

performing adaptability to each user so the learning

experience can be enhanced.

However, we must ensure that those efforts do

not become groups of isolated isles, so we may

construct standardized tools so they can be

applicable, compatible, and interchangeable between

them. Also, the emerging of the Semantic Web has

allowed the development of systems that could

satisfy the requirements of open systems. One

accepted standard for the development of educative

objects is the IMS (IMS organization, 2004), a

global learning consortium that develops and

promotes the adoption of open technical

specifications for interoperable learning

technologies that become the standards for

delivering learning products worldwide. Among the

inherent importance of the developing of e-learning

platforms, we want to emphasise in the role of the

assessment activity inside the e-learning process. We

want to concentrate in this task, and see how it can

help to improve the e-learning process to all the

participants: students, teachers, and content

designers.

2 IMPORTANCE OF THE

ASSESSMENT ACTIVITY IN

THE EDUCATIVE PROCESS

Traditionally, assessment activity has been seen like

a task aside of the e-learning process and there is a

danger in focusing research on assessment

specifically (Booth, 2003), as this tends to isolate the

assessment process from teaching and learning in

general.

The results of the test made by the students could

allow an adecuation of the web site that reflects the

new knowledge topics or the new syllabus that will

be taken. According to the Australian Flexible

Learning Framework (Backroad Connections, 2003),

assessment, especially when is included within a real

learning task or exercises, could be an essential part

of the learning experience, giving to the entire Web

site the characteristic to adapt itself to the needs of

the users. This could be an interesting feature of an

educational Web site because the improvement of

the online teaching experience by giving to the

student a convenient feedback, re-adaptation of the

educative content to the new knowledge level,

setting user-tailored content information.

For the student, the assessment activity informs

progress and guide learning; also, it is essential for

the accreditation process and measures the success

of the student. Assessment tasks can be seen as the

active components of study, also assignments

provide learners with opportunities to discover

whether they understand and not, if they are able to

379

Barbosa H. and García F. (2006).

AN AUTHORING TOOL TO DEVELOP ADAPTIVE ASSESSMENTS - Proposal Model to Construct Adaptive Assessment Items.

In Proceedings of WEBIST 2006 - Second International Conference on Web Information Systems and Technologies - Society, e-Business and

e-Government / e-Learning, pages 379-382

DOI: 10.5220/0001242803790382

Copyright

c

SciTePress

perform competently and demonstrate what they

have learnt in their studies. Furthermore, the

feedback and grades that assessors communicate to

students serve to both teach and motivate (Thorpe,

2004).

From our point of view, the assessment activity

could be considered as an integrator step that help

the entire process to get self adecuation to the user

needs, giving feedback to both the student and the

instructors.

3 DESIRABLE

CHARACTERISTICS FOR AN

ASSESSMENT TOOL

Nowadays, it is necessary to produce educative

Internet-based systems that permit the dissemination

of the education, covering the needs of diverse

learning group profiles. To obtain this, it is desirable

that such systems perform automatic task to adapt

itself to each user, disconnecting the content from its

presentation by using a semantic approach rather

than a syntactical one, defining a meaningful web.

In consequence, learning systems must be

flexible and efficient, and one way to accomplish

that is to be an open and standardized system. We

want to focus on the following standards by giving

their general characteristics and their support

features for a learning system:

One aim is to make those systems to work in

adaptive learning systems and, given the fact that the

assessment activity is an important and integral part

of the e-learning process; it is desirable that this

evaluation activity could be adaptable as well. If we

want the assessment to be interoperable, compatible,

and shareable, we must have to develop a

standardized tool (Barbosa, 2005).

4 PROPOSAL FOR AN

AUTHORING TOOL FOR

ADAPTIVE ASSESSMENTS

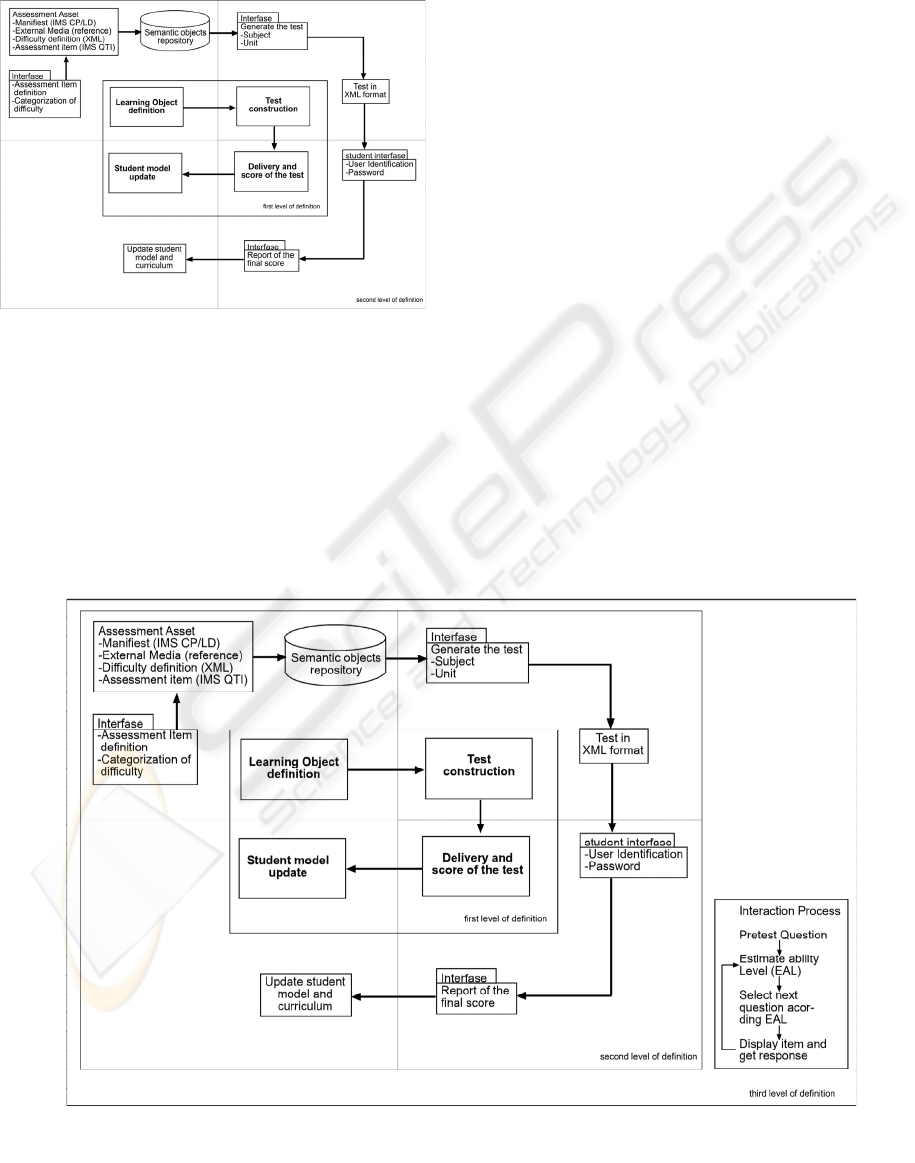

For the development of our Adaptive Assessment

Tool, we outlined a model that will help us in the

process. This model (figure 5), have 3 levels of

definition, starting with the first level which shows

the model in its most abstract definition, ending with

the third level which is the most concrete definition

of the same model.

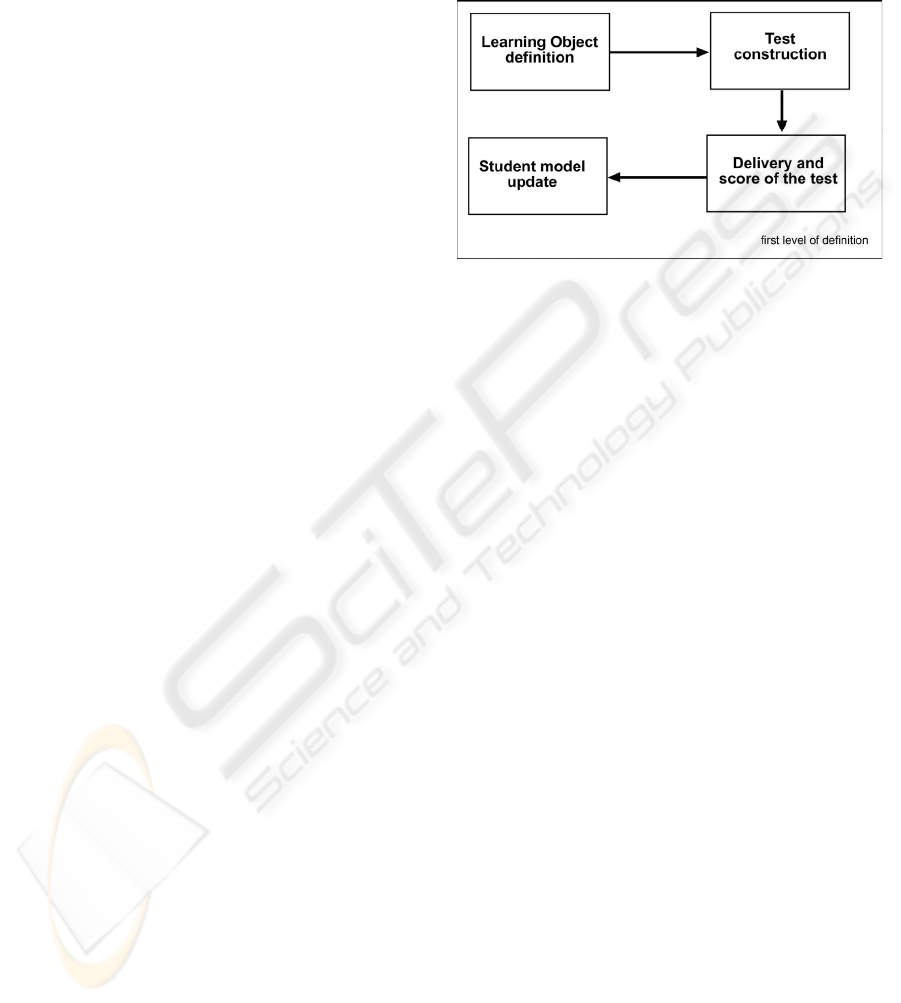

4.1 First Level of Definition

We will explain the first and the inner level of

definition (fig. 2), according to the complete figure

showed in the third level of definition (fig. 4):

Figure 1: First level of definition.

a) Learning Object Definition: the process starts

with the definition of the main component of a

test: the learning object that will contain a single

assessment item or question. It is convenient to

define single questions to ensure a convenient

granularity of the elements for the next step of

the process: the construction of the test itself. To

ensure the reusability, interoperability,

durability, and accessibility of these objects, they

will be defined using open standards, following

the definition of semantic objects using XML

and the IMS, IMS QTI specifications.

b) Test Construction: Here the assessment items are

selected from the repository to construct the test

that will be delivered to the students, selecting

the questions based on the subject and unit that

will be evaluated. At this point of the process,

the question items are packaged following the

SCORM specification.

c) Delivery and score of the test: Once we have a

group of assessment items to conform a

deliverable test, is time to present it to the

student through an LMS. In this process is where

the adaptation is made in an interaction process.

The responses to each question are saved to

obtain the final score for each student.

d) Student Model Update: In the LMS, the final

score is taken into account to update the student

model so the learning environment could adapt

to the student in the next interaction process.

WEBIST 2006 - E-LEARNING

380

4.2 Second Level of Definition

Following the four quadrants of the first level of

definition, we will explain them in the second level

(fig.3), in a more concrete definition:

Figure 2: Second level of definition.

a) Learning Object Definition: In this level we are

defining the first interface of the system, that

allow to the educational instructor or teacher to

capture each assessment item directly or by

make a relation to external sources, assigning a

categorization of difficulty that will help in the

adaptation process. After that, the assessment

item will be packaged with a manifest (using

IMS CP/LD specification), links to external

sources, the assessment item itself (using IMS

QTI) and the definition of difficulty for the

assessment item (using XML), and stored in a

semantic object repository.

b) Test Construction: We use the second interface of

the system to allow the instructor or teacher to

generate the test by capturing the subject and unit

of that subject that will be evaluated. This process

constructs the deliverable test by selecting all the

assessment assets from the semantic objects

repository and transforming all the assessment

items to a XML format. By doing this, we have a

single file in XML format containing all the

assessment items to construct single tests,

allowing an adaptation process.

c) Delivery and score of the test: In the ALE, the

final XML file containing all the assessment

items, the test is presented to the student. A third

interface is used that allows to the student to

identify her/himself. In this phase, the students

answer the questions as they are presented by the

system. At the end of the exam, the final score

could be showed to the student.

d) Student Model Update: The ALE, according to

its own process could update the student model

and the curriculum to save the final score of the

test already performed by the student.

4.3 Third Level of Definition

In this level (fig. 4), we outline the interaction and

adaptation process. In the event that the ALE do not

Figure 3: Third level of definition.

AN AUTHORING TOOL TO DEVELOP ADAPTIVE ASSESSMENTS - Proposal Model to Construct Adaptive

Assessment Items

381

conform an adaptation process for external assets,

we could suggest one, following the logic (Eri-CAE

Network, 2003) given below:

a) All items that have not yet been administered are

evaluated to determine which will be the best

one to administer next, given the currently

estimated ability level.

b) The “best” next item is administered and the

student responds.

c) A new ability level is computed based on the

responses of the student.

d) Steps 1 to 3 are repeated until a stopping

criterion is met.

Questions would be given randomly to eliminate

cheating. The new ability level is computed based on

the equations (Mia, 1998), used in the MANIC

system (op. cit.) during the quiz sessions to compute

the new estimated ability level (see table 1).

To determine the first OldValue at the beginning

of the test, the student is given with a pre-test

question, after that, for every question, this value

will be updated, based on student’s response.

This could be an automatic and independent

process from the ALE, which could be performed by

a software agent. If the test will be able to use

external multimedia assets, it is also possible to use

an external agent stored in the same server, which

starts the adaptation process in the client’s machine.

5 CONCLUSIONS AND FUTURE

WORK

Online assessment is an important step inside the e-

learning process because gives convenient feedback

to all participants in the process, helping to improve

the learning and teaching experience.

In this paper, we wanted to emphasize the role of

the assessment inside the e-learning process and

defining the factors of importance to the main

elements that participate in this process: the

educative content and adaptation process, the users

or students and the teachers and assessors. We think

that the assessment activity takes place in a specific

point of the process as we show it in the figure I, and

we conceptualized the activity as the link that closes

the chain of the e-learning process.

Adaptability is another key factor in assessment.

Given the fact that assessment is an important

element of the e-learning process and that this

process looks to be interoperable, then we can think

that the assessment tool could be used with different

educative content administrators with different

conceptualizations and ways to design and apply a

test for their students. To face this situation it is

necessary to develop an assessment tool that give

several ways to design an test with different types of

resources, different kind of assessments, group of

students, kind of questions, managing schedules, etc.

Under this conceptualization, we want to create

an Adaptive Assessment Tool (AAT) that could take

into account the specific characteristic of the HyCo

system and be intrinsically part of it.

ACKNOWLEDGMENTS

We want to thank to the group GRIAL (Research

Group in Interaction and eLearning) of the

University of Salamanca for their contributions and

ideas for the development of this work.

In addition, we want to thank to the Education

and Science Ministry of Spain, National Program in

Technologies and Services for the Information

Society, Ref.: TSI2005-00960.

Héctor Barbosa thanks the National System of

Technological Institutes (SNIT – Mexico) for its

financial support.

REFERENCES

Backroad Connections Pty Ltd. (2003). Assessment and

Online Teaching. Australian Flexible Learning

Framework, Quick Guides Series. 3 (1), 20.

Barbosa, H., Garcia F.. (2005). Importance of the online

assessment in the e-learning process. 6th International

Conference on Information Technology-based Higher

Education and Training ITHET 2005. CD version.

Booth, R., Berwyn, C.. (2003). The development of

quality online assessment in vocational education and

training. Australian Flexible Learning Framework. 1

(1), 17.

Eri-CAE. (2003). Computer adaptive testing tutorial.

Available: http://www.ericae.net. Last accessed 17 Sep

2005.

IMS Global Learning Consortium. (2002). IMS Question

& Test Interopeability: ASI Information Model

Specification. Available: http://www.imsproject.org/

question/qtiv1p2/imsqti_asi_infov1p2.html. Last

accessed 20 Nov 2005.

Mia, K., Stern & B.P. Wolf. (1998). Curriculum

sequencing in a Web-based tutor. Lecture notes in

Computer Science. 1452 (1), 574-583.

Thorpe, M.. (2004). Assessment and ‘thirth generation’

distance education. Exploring assessment in flexible

delivery of vocational education and training program,

Australian National Training Authority. 2 (1) 20.

WEBIST 2006 - E-LEARNING

382