A NOVEL HAPTIC INTERFACE FOR FREE LOCOMOTION

IN EXTENDED RANGE TELEPRESENCE SCENARIOS

Patrick R

¨

oßler, Timothy Armstrong, Oliver Hessel, Michael Mende and Uwe D. Hanebeck

Intelligent Sensor-Actuator-Systems Laboratory

Institute of Computer Science and Engineering

Universit

¨

at Karlsruhe (TH)

Karlsruhe, Germany

Keywords:

Extended range telepresence, haptic interface, motion compression.

Abstract:

Telepresence gives a user the impression of actually being present in a distant environment. A mobile teleop-

erator acts as a proxy in this target environment, replicates the user’s motion, and records sensory information,

which is transferred to the user and displayed in real-time. As a result the user is immersed in the target envi-

ronment. The user can then control the teleoperator by walking naturally. Motion Compression, a nonlinear

mapping between the user’s and the robot’s motion, allows exploration of large target environments even from

small user environments.

For manipulation tasks haptic feedback is important. However, current haptic displays do not allow wide-area

motion. In this work we present our design of a novel haptic display for simultaneous wide area motion and

haptic interaction.

1 INTRODUCTION

Telepresence aims at giving a human user the impres-

sion of actually being present in a remote environ-

ment, the target environment. In order to achieve this,

a robot, the teleoperator, typically a wheel based plat-

form equipped with a camera head, is placed in the

target environment. Optionally, the teleoperator may

be equipped with a manipulator arm.

In order to control the robot, the user’s head mo-

tion is tracked and transferred to the teleoperator that

replicates this motion. The teleoperator records sen-

sory information and sends it to the human user in

real time. This information is displayed to the user on

immersive displays, e. g. a head-mounted display for

video and headphones for audio, in such a way that

the user only perceives the target environment. As a

result, the user is immersed in the target environment,

i. e., he feels present.

The more of the user’s senses are telepresent the

deeper he gets immersed in the target environment.

Fig. 1 shows the senses most important for telepres-

ence. Since proprioception, the sense of self mo-

tion, is especially important for human navigation

and wayfinding (Bakker et al., 1998), extended range

telepresence allows the user to navigate the tele-

operator by natural walking, rather than using de-

visual

h

aptic

proprioceptive

auditive

Figure 1: Senses of interest in telepresence. The photo

shows the setup used in (R

¨

oßler et al., 2005b).

vices like joysticks (Stemmer et al., 2004) or steering

wheels (Bunz et al., 2004).

The target environment may be of arbitrary size,

but the user’s real environment, the user environment,

is typically limited, for example by the range of the

tracking system or the available space. In order to al-

low the user to explore target environments that are

larger than the user environment, additional process-

ing of his motion data is needed. Motion Compres-

sion (Nitzsche et al., 2004) provides a nonlinear map-

148

Rößler P., Armstrong T., Hessel O., Mende M. and D. Hanebeck U. (2006).

A NOVEL HAPTIC INTERFACE FOR FREE LOCOMOTION IN EXTENDED RANGE TELEPRESENCE SCENARIOS.

In Proceedings of the Third International Conference on Informatics in Control, Automation and Robotics, pages 148-153

DOI: 10.5220/0001214101480153

Copyright

c

SciTePress

ping between the path in the user environment and the

path in the target environment. It guarantees a high

degree of immersion by providing almost consistent

proprioceptive and visual feedback.

A system such as the one described above al-

lows telepresent exploration of large target environ-

ments (R

¨

oßler et al., 2005b). However, in order to

be able to perform manipulation tasks in the target

environment, haptic feedback is needed.

Haptic feedback can be achieved by implement-

ing a robotic system, that applies forces on the hu-

man user, e. g. his hand. Most current haptic inter-

faces like the commercially available Phantom (Sens-

Able Technologies, 1996) use classic serial kinemat-

ics. Even industrial robots are sometimes used as

haptic interfaces (Hoogen and Schmidt, 2001).

Besides these classical approaches there is also

a group of interfaces based on completely differ-

ent principals, like for example magnetic levita-

tion (Unger et al., 2004). Common to all such sys-

tems is a very limited work space. Therefore, they are

not suited for extended range telepresence, where the

user needs to move freely in the user environment.

One approach to solving the problem of simulta-

neous haptic interaction and wide are motion are un-

grounded haptic displays, e. g. exoskeletons (Berga-

masco et al., 1994), which are carried along by the

user. However, with these devices haptic rendering

is of significantly lower quality than with grounded

displays (Richard and Cutkosky, 1997).

As various applications need large haptic inter-

faces, there are a number of different approaches fit-

ted for each application. Some of these displays how-

ever, only extend the height of the work space (Borro

et al., 2004). Some translatory motion in the user en-

vironment is allowed by a system based on a wire

pull mechanism (Bouguila et al., 2000). However,

rotational motion is highly restricted as the user gets

caught in the wires. Hyper-redundant serial kinemat-

ics (Ueberle et al., 2003) feature a large work space

that allows translatory and rotational motion, but still

restrict free motion in the user environment.

The only group of systems that allows haptic in-

teraction during wide area motion are mobile haptic

interfaces (Nitzsche and Schmidt, 2004). These are

typically small conventional haptic devices mounted

on mobile platforms. However, they are hard to

control and display quality is heavily dependent on

localization of the mobile platform.

In this paper we present our patent pending design

of a large haptic interface that allows simultaneous

haptic interaction and wide area motion. The design

of the system provides a high degree of stiffness and

makes it fairly easy to control. Although especially

designed for use in systems with Motion Compres-

sion, it is more general and opens a wide variety of ap-

plications besides robot teleoperation, for example in

virtual reality or entertainment (R

¨

oßler et al., 2005a).

The remainder of this paper is structured as fol-

lows. In Sec. 2 we review Motion Compression, as

this algorithm heavily affects the requirements to the

haptic display described in Sec. 3. The mechanical

setup of the display is given in Sec. 4 along with

its kinematic properties. Sec. 5 describes the dis-

tributed electronic control system, which was devel-

oped to control this large robotic system with many

sensor/actuator subsystems. The safety architecture

necessary for safe operation of such a system is pre-

sented in Sec. 6. Finally, Sec. 7 draws conclusions

and discusses future work.

2 MOTION COMPRESSION

Motion Compression is an algorithmic framework

that provides a nonlinear transformation between the

user’s path in the user environment and the mo-

bile teleoperator’s path in the target environment. It

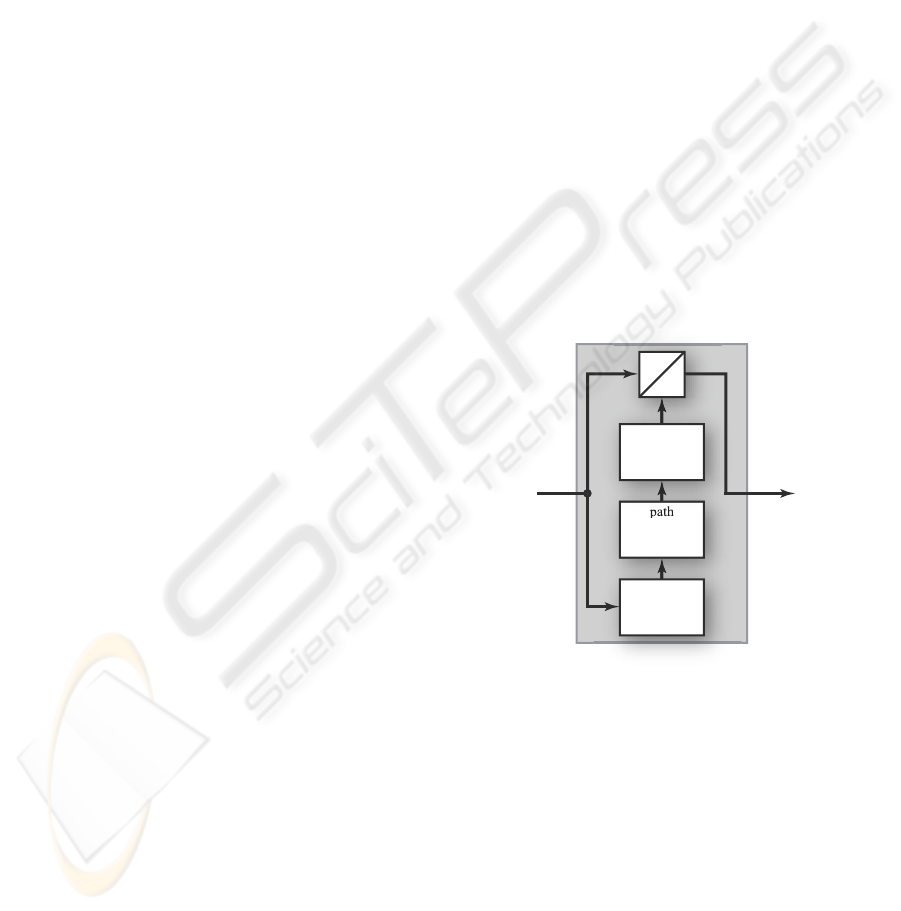

consists of three modules as shown in Fig. 2.

path

trans-

formation

user

guidance

path

prediction

U

T

user

p

osition

transforme

d

position

Figure 2: Overview of the Motion Compression Frame-

work.

Path prediction tries to predict the desired path of

the user in the target environment. This prediction is

based on tracking data and, if available, information

on the target environment. The resulting path is called

target path.

In the next step, path transformation, the target

path is transformed in such a way that it fits in the user

environment. The transformed path features the same

length and turning angles, while path curvature dif-

fers. The resulting user path minimizes this path cur-

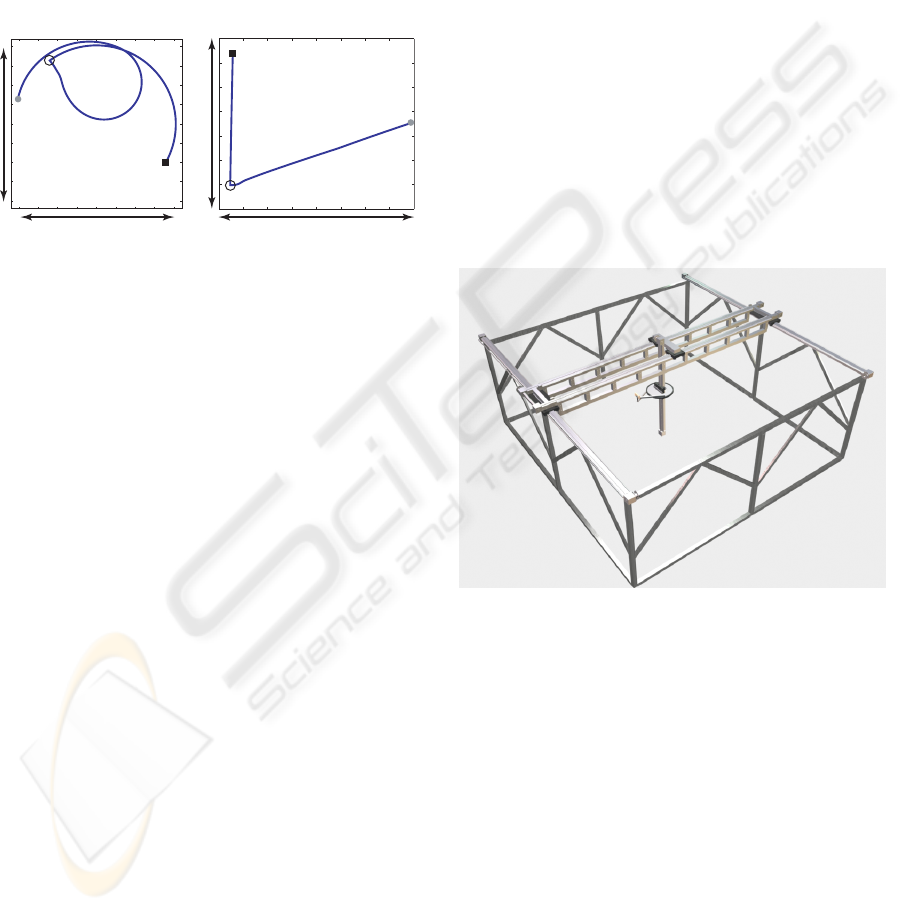

vature difference. A target path and a corresponding

user path are shown in Fig. 3.

A NOVEL HAPTIC INTERFACE FOR FREE LOCOMOTION IN EXTENDED RANGE TELEPRESENCE

SCENARIOS

149

Finally, the user guidance module guides the user

on the transformed path, while he has the impression

of walking on the original path. User guidance ex-

ploits some properties of natural human wayfinding.

The result of these three modules is a linear trans-

formation between the user’s position in the user en-

vironment and the teleoperator’s position in the target

environment at any position and time. This transfor-

mation can also be used to transform the user’s hand

position, or to transform force vectors recorded by the

teleoperator back into the user environment.

4 m

4 m

8 m

7 m

(a)

(b)

user environment target environment

Figure 3: The corresponding paths in both environments.

(a) User Path in the user environment. (b) Target path in the

target environment.

3 REQUIREMENTS

As a result of using Motion Compression, there arise

requirements for the kinematics of the haptic display.

In order to control the robot by natural walking, the

user needs a work space that covers the whole user

environment of 4 × 4 m

2

, in which he may move with

a natural speed of up to 2 m/s. Especially the rota-

tional motion around the vertical axis must be uncon-

strained and indefinite. To give the user the possibility

of manipulation, the work space should have a height

of at least 2 m.

As this work does focus on haptic sensation during

wide motion and not on precise haptic manipulation,

only forces have to be displayed, the moments are of

lesser importance. Thus the end-effector of the dis-

play needs four degrees of freedom, three translatory

and one rotational around the vertical axis.

A robotic system of the size described above fea-

tures long distances between the sensor/actuator units.

Thus a distributed electronic control system is needed

that allows, for example, decentralized pre-processing

of sensor data. These decentralized units have to be

connected to a central control unit by means of a bus

system that allows high bandwidth and a high number

of sensor/actuator subsystems.

As the user will be in direct contact with a robotic

system moving at high speeds, a safety architecture

that reduces the danger of physical harm to a min-

imum is needed. This is even more important, as

the user is immersed in the remote environment and

does not see the haptic display itself. In case of

malfunction of any part of the system, all subsys-

tems, especially all moving parts, immediately have

to stop.

4 MECHANICAL SETUP

Fine haptic rendering and wide area motion require

very different characteristics regarding mechanics as

well as control. This is why we decided to follow the

idea of mobile haptic interfaces and separate the hap-

tic display device from wide area motion. The motion

subsystem is realized as portal carrier system with

three linear degrees of freedom, that moves the hap-

tic end-effector along with the user. The haptic end-

effector is realized as a parallel SCARA manipulator.

Fig. 4 gives an impression of the complete setup.

Figure 4: An impression of the complete setup with portal

carrier and parallel SCARA manipulator.

4.1 Portal Carrier

In order to provide the desired work space, the portal

carrier has a size of approximately 5 × 5 × 2 m

3

. It

has three translatory degrees of freedom, which are

realized by three independent linear drives.

These linear drives are built using a commercially

available carriage-on-rail system. The carriages are

driven by a toothed belt. While the x- and y-axis con-

sist of two parallel rails each for stability reasons, the

z-axis is only a single rail. As a result, the system

is driven by five three-phase AC-motors, that allow

a maximum speed of 2 m/s and an acceleration of

2 m/s

2

. As the configuration space equals cartesian

space, forward kinematics can be expressed by means

ICINCO 2006 - ROBOTICS AND AUTOMATION

150

of an identity matrix. Thus control is extremely easy

to handle and very robust.

4.2 Parallel SCARA

The acceleration of the human hand is typically much

higher than the acceleration of the portal carrier.

In order to allow natural hand motion, a fast and

lightweight kinematic is attached to the carriage of the

z-axis. A two degree of freedom SCARA kinematic

parallel to the x-y-plane is sufficient, as motion in z-

direction is of minor importance. A SCARA kine-

matic covers planar motion, which can be described

by means of polar coordinates.

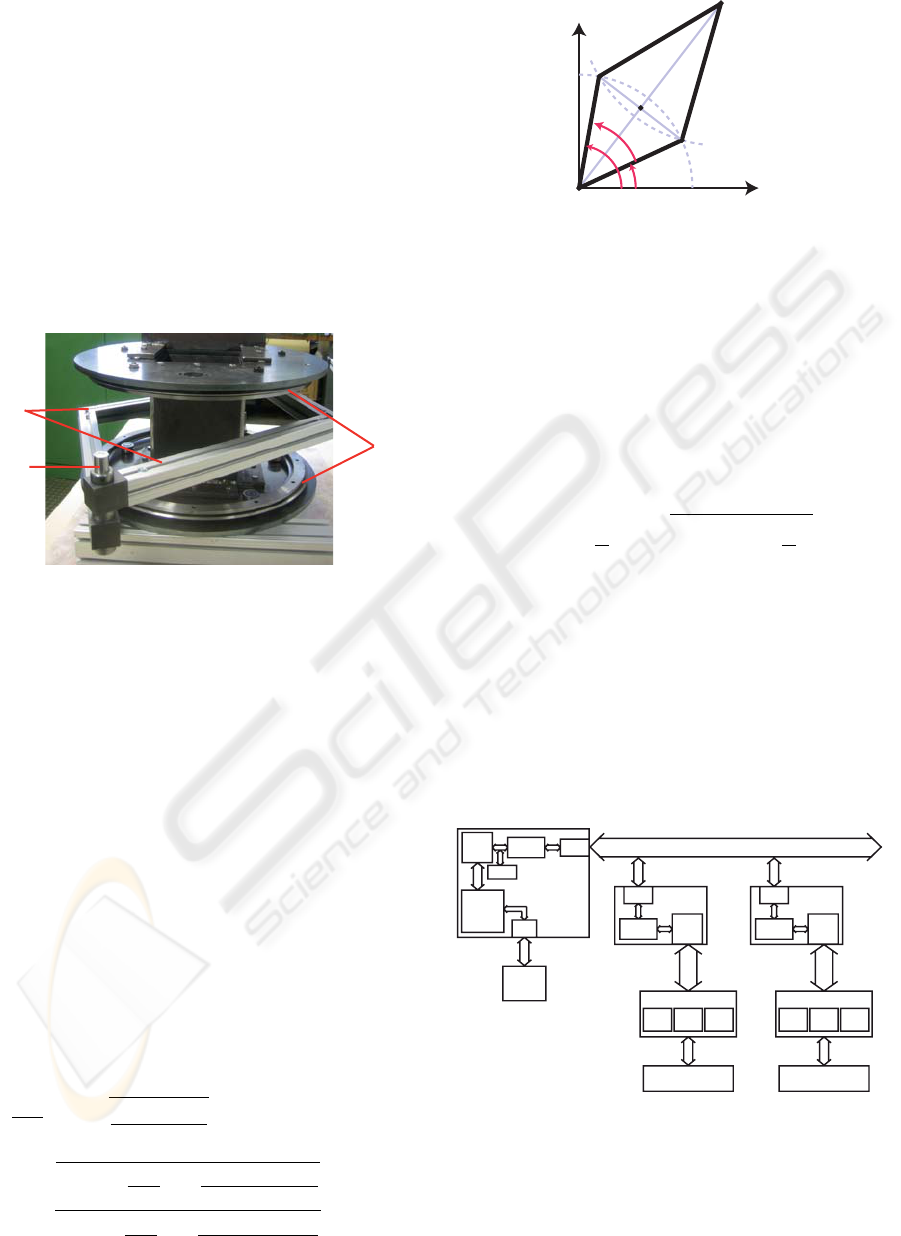

outer

segments

end

effector

circula

r

drives

Figure 5: The parallel SCARA. The inner segments are re-

placed by circular drives. Work space was optimized by

adding an additional angle to the outer segments.

We designed a parallel SCARA manipulator, which

has the advantage, that both motors needed to drive

the system can be integrated into the base. As a result,

all moving joints are passive. This leads to a mini-

mum of moving mass and thus better dynamics. In

order to allow infinite circular motion, the inner seg-

ments have been replaced by circular drives, as shown

in Fig. 5.

Although parallel SCARA devices have been used

as haptic interfaces before (Campion et al., 2005), our

approach is novel as the circular drives significantly

reduce the complexity of the forward kinematics as

both inner segments have their origin in exactly the

same location.

The forward kinematics of the parallel SCARA are

given as in Fig. 6, A, B and C are passive joints.

Only the angles α and β are actively driven. The

end-effector position C is given by

OC =

"

l

1

cos(α)+cos(β)

2

l

1

sin(α)+sin(β)

2

#

+

r

l

2

2

cos

2

α+β

2

−

(sin(α)+sin(β))

2

4

r

l

2

2

sin

2

α+β

2

−

(cos(α)+cos(β))

2

4

,

(1)

α

ψ

β

O

A

C

B

x

y

Figure 6: Kinematics of the parallel SCARA.

where l

1

< l

2

are the lengths of the inner and outer

segments, respectively.

When attaching the parallel SCARA to the portal

carrier, there is a redundancy that may be resolved

by optimizing manipulability of the SCARA robot.

The SCARA robot’s manipulability is optimal when

its radial travel is in center position. The SCARA

robot’s radial travel R is only dependent on the angle

ψ = β − α and is given as

R = l

1

cos

ψ

2

+

s

l

2

2

− l

2

1

sin

2

ψ

2

. (2)

5 DISTRIBUTED ELECTRONICS

In order to control the haptic display at a high update

rate, we designed a distributed electronic control sys-

tem. This electronic system is not limited to control

of the haptic display described in this paper, but it is

modular and reconfigurable and can thus be fitted to

any robotic system.

PC

Profibus

Master

µC

DSP

USB

SPI

ASPC2 RS485

SRAM

Slave

µC

SPC3

Extension

ADC

OP

...

Sensor/Actuator

RS485

Slave

µC

SPC3

Extension

ADC

OP

...

Sensor/Actuator

RS485

...

Figure 7: Structure of the control system.

As shown in Fig. 7 the control system consists

of one master control node equipped with high pro-

cessing power and a number of slave nodes, one for

each sensor/actuator subsystem. These nodes are

A NOVEL HAPTIC INTERFACE FOR FREE LOCOMOTION IN EXTENDED RANGE TELEPRESENCE

SCENARIOS

151

connected with PROFIBUS

1

, which provides high

bandwidth over long distances.

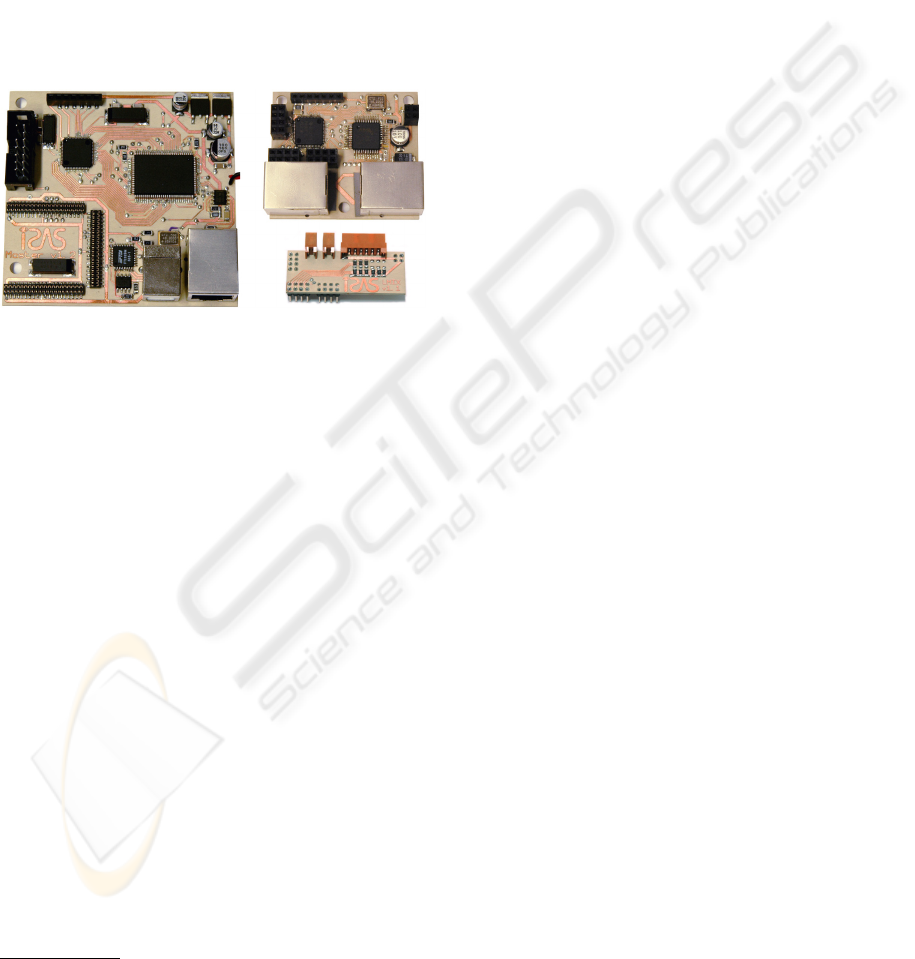

The master node shown in Fig. 8 on the left is

equipped with an AD Blackfin DSP

2

header board

(not shown) to provide enough processing power for

control. On this DSP all sensor data is gathered and

control outputs for all actuators are generated. The

DSP is connected via the integrated serial port inter-

face to a TI MSP 430 micro controller

3

, that handles

communication with the slave nodes. To communi-

cate with the core of the telepresence system running

on a standard PC, it also features a USB connection.

Figure 8: The master node (left), a slave node (top right)

and the header board for one sensor (bottom right).

The slave node is a standardized unit, that is com-

pletely the same for all sensor/actuator subsystems.

They are equipped with a TI MSP 430 micro con-

troller, that has enough processing power to pre-

process sensor data or to generate control signals for

the actuator units.

The slave nodes are fitted to their specific task by

adding an extension board, that provides the inter-

faces to the sensors and actuators, and by uploading

the appropriate software. The right hand side of Fig. 8

shows a slave node and one extension board for a

sensor system.

In general, each sensor and each actuator has its

own slave node, because the distances between the

subsystems are very large. However, if a sensor and

the appropriate actuator are close to each other, it is

possible to integrate both sensor pre-processing and

actuator control on one slave node. This is the case

for each of the SCARA robot’s motors. Thus, it

is possible to run inner control loops on these slave

nodes.

1

http://www.profibus.com/pb/

2

http://www.analog.com/processors/processors/blackfin/

3

http://www.ti.com/

6 SAFETY ARCHITECTURE

In order to prevent accidents when using the haptic

interface a safety architecture was designed and im-

plemented. This safety architecture consists of three

levels to include various sources of failure.

The highest level is the external level. This level al-

lows user inputs by means of emergency buttons and

a dead-man-switch at the end-effector. This level also

includes mechanical stop switches that prevent the

system from destruction in case of a failure. If the ex-

ternal level detects a failure, it triggers the emergency

stop program of all actuators and cycles down power.

As the motors of the portal carrier have integrated

power-off brakes, the system stops immediately.

On the inter-node level the nodes of the control sys-

tem monitor each other. The master node constantly

checks the alive status of all slave nodes based on

timeouts. If any one of the nodes does not respond to

the queries in time, an emergency stop is initiated. In

order to also guarantee an emergency stop in case of

a master failure, the actuator nodes stop the actuators

immediately if the master node does not constantly

refresh its commands.

On the intra-node level the components of each

node monitor themselves and each other using their

internal watchdog timers. In case of a malfunction,

the node is stopped. This leads to a time-out on

the inter-node level and eventually to an emergency

stop.

7 CONCLUSIONS

In this paper we have presented our patent pending

design of a novel haptic interface. This interface al-

lows simultaneous wide area motion and haptic inter-

action. It consists of a parallel SCARA manipulator

for precise haptic rendering and a portal carrier sys-

tem that enlarges the work space to 4 × 4 × 2 m

3

by

pre-positioning the end-effector. As the portal carrier

is driven by three linear drives, description of the sys-

tems kinematics is very simple. This makes it easy

and robust to control.

We designed a distributed electronic control sys-

tem based on Profibus that allows decentralized pre-

processing of sensor data and control of actuators. A

safety architecture that prevents accidents in case of

malfunctions was implemented on the control system.

Although all components were developed specifi-

cally as extension to our extended range telepresence

system, the concepts presented here are much more

general and are not limited to the given application.

Future work will, of course, include finishing the

setup of the hardware. While all components already

exist, there is still some integration to be done. To

ICINCO 2006 - ROBOTICS AND AUTOMATION

152

prove the suitability of the concept, we will imple-

ment an impedance controller that allows haptic in-

teraction with virtual environments. The envisaged

controller will be based on decoupling haptic render-

ing and wide area motion by optimizing manipulabil-

ity of the the haptic interface (Formaglio et al., 2005).

However, in order to achieve the goal of telepresent

manipulation with a real robotic teleoperator, more

sophisticated control schemes might be necessary.

By adding haptics to our extended range telepres-

ence system, all senses of interest will finally be telep-

resent. While users see and hear in the target environ-

ment, they receive proprioceptive feedback of their

motion and have the possibility of haptic interaction

during wide area motion. Thus the user is deeply

immersed in the target environment and eventually

identifies with the teleoperator.

This will lead to a new quality in robot teleopera-

tion as users now have a truly intuitive interface to the

robot and can fully focus on their task in the target

environment.

ACKNOWLEDGEMENTS

This work was supported in part by the German Re-

search Foundation (DFG) within the Collaborative

Research Center SFB 588 on “Humanoid robots–

learning and cooperating multimodal robots”.

REFERENCES

Bakker, N. H., Werkhoven, P. J., and Passenier, P. O. (1998).

Aiding Orientation Performance in Virtual Environ-

ments with Proprioceptive Feedback. In Proceedings

of the IEEE Virtual Reality Annual Intl. Symposium,

pages 28–33, Atlanta, GA, USA.

Bergamasco, M., Allotta, B., Bosio, L., Ferretti, L., Per-

rini, G., Prisco, G. M., Salsedo, F., and Sartini, G.

(1994). An Arm Exoskeleton System for Teleoper-

ation and Virtual Environment Applications. In Pro-

ceedings of the IEEE Intl. Conference on Robotics and

Automation (ICRA’94), pages 1449–1454, San Diego,

CA, USA.

Borro, D., Savall, J., Amundarain, A., Gil, J. J., Garc

´

ıa-

Alonso, A., and Matey, L. (2004). A Large Hap-

tic Device for Aircraft Engine Maintainability. IEEE

Computer Graphics and Applications, 24(6):70–74.

Bouguila, L., Ishii, M., and Sato, M. (2000). Multi-Modal

Haptic Device for Large-Scale Virtual Environment.

In Proceedings of the 8th ACM Intl. Conference on

Multimedia, pages 277–283, Los Angeles, CA, USA.

Bunz, C., Deflorian, M., Hofer, C., Laquai, F., Rungger, M.,

Freyberger, F., and Buss, M. (2004). Development

of an Affordable Mobile Robot for Teleexploration.

In Proceedings of IEEE Mechatronics & Robotics

(MechRob’04), pages 865–870, Aachen, Germany.

Campion, G., Wang, Q., and Hayward, V. (2005). The Pan-

tograph Mk-II: A Haptic Instrument. In Proceedings

of the IEEE Intl. Conference on Intelligent Robots and

Systems (IROS’05), pages 723–728, Edmonton, AB,

Canada.

Formaglio, A., Giannitrapani, A., Barbagli, F., Franzini, M.,

and Prattichizzo, D. (2005). Performance of Mobile

Haptic Interfaces. In Proc. of the 44th IEEE Con-

ference on Decision and Control and the European

Control Conference 2005, pages 8343–8348, Seville,

Spain.

Hoogen, J. and Schmidt, G. (2001). Experimental Results in

Control of an Industrial Robot Used as a Haptic Inter-

face. In IFAC Telematics Applications in Automation

and Robotics, pages 169–174, Weingarten, Germany.

Nitzsche, N., Hanebeck, U. D., and Schmidt, G. (2004).

Motion Compression for Telepresent Walking in

Large Target Environments. Presence, 13(1):44–60.

Nitzsche, N. and Schmidt, G. (2004). A Mobile H aptic

Interface Mastering a Mobile Teleoperator. In Pro-

ceedings of IEEE/RSJ Intl. Conference on Intelligent

Robots and Systems, Sendai, Japan.

Richard, C. and Cutkosky, M. R. (1997). Contact Force

Perception with an Ungrounded Haptic Interface. In

ASME IMECE 6th Annual Symposium on Haptic In-

terfaces, Dallas, TX, USA.

R

¨

oßler, P., Beutler, F., and Hanebeck, U. D. (2005a). A

Framework for Telepresent Game-Play in Large Vir-

tual Environments. In 2nd Intl. Conference on Infor-

matics in Control, Automation and Robotics (ICINCO

2005), volume 3, pages 150–155, Barcelona, Spain.

R

¨

oßler, P., Beutler, F., Hanebeck, U. D., and Nitzsche,

N. (2005b). Motion Compression Applied to Guid-

ance of a Mobile Teleoperator. In Proceedings of the

IEEE Intl. Conference on Intelligent Robots and Sys-

tems (IROS’05), pages 2495–2500, Edmonton, AB,

Canada.

SensAble Technologies (1996). PHANTOM Hap tic De-

vices. http://www.sensable.com/

products/phantom ghost/phantom.asp.

Stemmer, R., Brockers, R., Dr

¨

ue, S., and Thiem, J. (2004).

Comprehensive Data Acquisition for a Telepresence

Application. In Proceedings of Systems, Man, and Cy-

bernetics, pages 5344–5349, The Hague, The Nether-

lands.

Ueberle, M., Mock, N., and Buss, M. (2003). Towards a

Hyper-Redundant Haptic Display. In Proceedings of

the International Workshop on High-Fidelity Telepres-

ence and Teleaction, jointly with the IEEE Conference

on Humanoid Robots (HUMANOIDS2003), Munich,

Germany.

Unger, B. J., Klatzky, R. L., and Hollis, R. L. (2004). Tele-

operation Mediated through Magnetic Levitation: Re-

cent Results. In Proceedings of IEEE Mechatronics

& Robotics (MechRob’04), Special Session on Telep-

resence and Teleaction, pages 1458–1462, Aachen,

Germany.

A NOVEL HAPTIC INTERFACE FOR FREE LOCOMOTION IN EXTENDED RANGE TELEPRESENCE

SCENARIOS

153