CONTEXT ANALYSIS FOR SEMANTIC MAPPING OF DATA

SOURCES USING A MULTI-STRATEGY MACHINE LEARNING

APPROACH

Youssef Bououlid Idrissi and Julie Vachon

DIRO, University of Montreal

Montreal (Quebec), Canada

Keywords:

context analysis, semantic mapping, data sources alignment, machine learning, multi-strategy, semantic web.

Abstract:

Be it on a webwide or inter-entreprise scale, data integration has become a major necessity urged by the

expansion of the Internet and of its widespread use for communication between business actors. However,

since data sources are often heterogeneous, their integration remains an expensive procedure. Indeed, this

task requires prior semantic alignment of all the data sources concepts. Doing this alignment manually is quite

laborious especially if there is a large number of concepts to be matched. Various solutions have been proposed

attempting to automatize this step. This paper introduces a new framework for data sources alignment which

integrates context analysis to multi-strategy machine learning. Although their adaptability and extensibility

are appreciated, actual machine learning systems often suffer from the low quality and the lack of diversity of

training data sets. To overcome this limitation, we introduce a new notion called “informational context” of

data sources. We therefore briefly explain the architecture of a context analyser to be integrated into a learning

system combining multiple strategies to achieve data source mapping.

1 INTRODUCTION

Machine learning systems using a multi-strategy ap-

proach are composed of a set of basic learners. Al-

though independent, these learners are coordinated

by a special unit called meta-learner. These ma-

chine learning systems are adaptive since they can de-

ploy learners able to specialize in the processing of a

specific type of information (e.g. field names, data

types, etc.). These systems are also easily extensi-

ble since new learners, developed independently, can

naturally be integrated under the control of a meta-

learner. Each basic learner is responsible for auto-

matically mapping the elements of two given data sets

(source data onto target data) according to its specific

knowledge. The main tasks of basic learners are the

following:

• learning data mappings from training examples

provided by a user.

• generating mappings between new data sets by us-

ing the classification model settled during the train-

ing stage.

As for the meta-learner, its task is to combine all the

mapping proposals issued by the basic learners and to

compute a final matching for each concept present in

the source data set.

It is well-known that the precision of the mapping

directly depends on the quantity and quality of the in-

formation used in the training set. Most of the time,

this training set is composed of data and their corre-

sponding data scheme (XSD, DTD, RDFS, etc.). This

selection appears to be too thin and restrictive if one

hopes to unveil semantic ambiguities which makes it

hard to identify implicit relations between concepts.

Achieving accurate semantic analysis is thus a major

challenge. Here are some examples of problems one

can meet:

• The attribute date1 of an entity Command does

not indicate if it represents the invoicing date, the

delivery date or the reception date.

• The attribute name of an entity Employee does

not specify if the content is about the first name or

the full name of the employee.

• The attribute amount of an entity Invoice does

not allow one to know if indicated values include

tax or not, neither does it specify the used currency

type.

• The attribute cl of a entity Command uses an ab-

445

Bououlid Idrissi Y. and Vachon J. (2005).

CONTEXT ANALYSIS FOR SEMANTIC MAPPING OF DATA SOURCES USING A MULTI-STRATEGY MACHINE LEARNING APPROACH.

In Proceedings of the Seventh International Conference on Enterprise Information Systems, pages 445-448

DOI: 10.5220/0002539804450448

Copyright

c

SciTePress

breviated form which makes it hard to automati-

cally identify that it denotes a c

ommand line.

Taken alone, a specialized learner can prove inef-

ficient or inadequate. For example, a learner special-

ized in the mapping of field names does not prove par-

ticularly outstanding when it comes to match names

which are not well-known synonyms (e.g. "‘com-

ment"’ and "‘outline"’), or names which abbrevi-

ate a concept (e.g. the name "‘home"’ used instead

of "‘telephone at home"’) or names whose broad

meaning would allow them to be matched with al-

most everything (e.g. "‘thing"’ or "‘entity"’). Sim-

ilarly, a content learner, that bases its semantic de-

duction on the frequency of lexical units appearing

in fields, would prove quite inadequate to analyze

numerical fields! Moreover, a learner relying on a

naive bayesian approach (Berlin and Motro, 2002; Pe-

dro Domingos, 1997; Kohavi, 1996) would not be

profitable for analyzing fields accepting values of nu-

merical or enumerated types (e.g. "‘gender"’).

Hence, this article proposes to broaden and diver-

sify both data sets and training sets by extending them

with documents coming from, what we call, the in-

formational context of data sources. Indeed, isolat-

ing a data source from its context (as it is the case

when solely considering its XML schema) reduces

beforehand the set of usable cognitive information

which underlies the conceptualization of the repre-

sented data. In practice, the context within which the

data source lies, constitutes a precious fount of infor-

mation calling for a more systematic exploration so as

to better define the semantics of concepts.

In the sequel, the notion of informational context is

defined and the architecture of a context analyzer is

presented.

2 INFORMATIONAL CONTEXT

The informational context of a data source is com-

posed of all the information, saved in electronic for-

mat, which belongs to the data source’s environment

and shares the same domain.

1. The descriptive context of a data source gathers all

the specification files describing the data or their

application environment. These files document the

data according to various abstraction levels. For

example, if the data source is a database the de-

scriptive context could be composed of the follow-

ing documents:

• A requirements document describing data and

services which the user calls for in applications

using the database. A test plan for instance,

might be practical to establish the link between

input and output data what could hide relevant

complex concepts.

• Analysis and design specifications including the

various formal and semi-formal models elabo-

rated for applications relying on the database.

Data dictionnaries are worth citing under this

category. It describes in a formal fashion, among

others, data flows, data structures et data de-

posits. As an example, consider a structure de-

scription of the concept "Order", using regular

expressions:

Order = O_Header + O_Item

∗

+ O_F ooter

O_Header = O_Number + Date + CustAdress

O_Item = ItemN um + Descr + Qty + P rice

O_F ooter = T axAmount + T otalAmount

This provides relevant information about compo-

sitions and dependencies of "Order" and "Items"

concepts. Furthermore, detailed description of

each data element can be obtained from a data

description deposit.

• User manuals. In the same way as for dictio-

naries, one can think of the numerous formula

linking concepts present in a such resource.

2. The operational context of a data source is com-

posed of all the data management and processing

files. Among others, these files can be

• programs written in any known programming

paradigm and language. The way concepts

are manipulated could hide valuable information

about how they are linked to each other.

• Files containing SQL-type requests.

For each data source, the important is to list all the

documents which may compose the descriptive and

operational contexts of this data set. The analysis of

these documents (in addition to the analysis of the

data themselves and their schema definition) will help

enhancing the knowledge required by the learners to

deduce the best semantic mapping between the given

data sources.

3 CONTEXT ANALYSIS

The main objective of context analysis consists in ex-

panding data sources with semantic information and

hints drawn from the context. Among other, this in-

formation is intented to be used by learners during

their training stage to increase the precision of the

mapping they are asked to compute.

In particular, context analysis offers an interesting

opportunity for resolving complex mappings which

are pairing combinations of concepts (e.g.(street,

zip code, city) → employee_address).

To the best of our knowledge, there is still no

satisfactory solution addressing this problem al-

though it is frequently encountered. For example,

ICEIS 2005 - DATABASES AND INFORMATION SYSTEMS INTEGRATION

446

we can imagine a context analyzer detecting the

presence of a function call "‘concat(address,

zip_code, city)"’ in a program file. The ana-

lyzer should therefore be able to add the definition

of a new concept complete_address to the con-

cerned data source and present it as a new candidate

to be mapped (hence if complete_address →

employee_address then our problem is solved

by transitivity). As mentioned earlier, learners spe-

cialized in field name analysis may often prove in-

effective when processing, for example, abbreviated

names or names with a very broad meaning. Mak-

ing the most of the context, it is hence possible to

tag field names with additional information to make

them more significant. From this viewpoint, a simple

documentation text file may turn out to be a valuable

source of information to analyze if it contains a com-

plete data description table (with two columns: one

for data names, the other for their description).

Furthermore, the analysis of formal annotations in

models may also contribute to refine mapping solu-

tions. Among others, OCL is a formal language (Bot-

ting, 2004) which allows the expression of constraints

in object-oriented models. In fact, OCL is a standard

of the OMG

1

and can be used together with the UML

to constrain models. Let’s consider the UML class

diagram shown on Figure 1.

University Student

1...*1

context

University

inv:

Student.allInstances ->forAll(s1,s2 |

s1<>s2 implies s1.name <> s2.name

Figure 1: OCL invariant

Using OCL invariant constraints, one can easily

specify that there cannot be two students with the

same name within the same university. This OCL in-

variant (specified in the attached note) must be veri-

fied by all data sets constructed on this model. One

must therefore ensure that no computed mapping pro-

duces target data violating the invariant. The analysis

of such invariants contributes de facto to the accuracy

of computed mappings.

To summarize, context analysis (c.f. Figure 2) is a

process applied to a data source and its context and

producing:

• an enriched data source, which can be used by

learners as a training set or as a candidate data set

for mapping.

1

Object Management Group

Context

analyser

data source

informational

context

enriched

data source

integrity

constraints

Figure 2: Context analysis

• a set of integrity constraints, that computed map-

pings must ensure.

Data sources enhancement consists, among others,

in replacing ambiguous or vaguely defined concept

names with more significant ones. It may also en-

rich the source with new names to define composite

concepts.

4 ARCHITECTURE OF THE

CONTEXT ANALYZER

The informational context of a data source may in-

clude a wide range of document types. As mentioned,

they can differ in their style, which can either be de-

scriptive or operational. They can also differ in the

formality degree of their contents. Some may have

a formal content (e.g. formal models such as tran-

sition systems, programs, etc.), some may rely on a

semi-formal notation(e.g. decision tables, UML dia-

grams, etc.), while others are written without any for-

mality consideration (e.g. textual documents, draw-

ings, etc.). Of course, informal documents are the

most demanding regarding analysis. For efficient re-

sults, it is important that the analysis addresses each

type of contents individually, taking into account its

specificities.

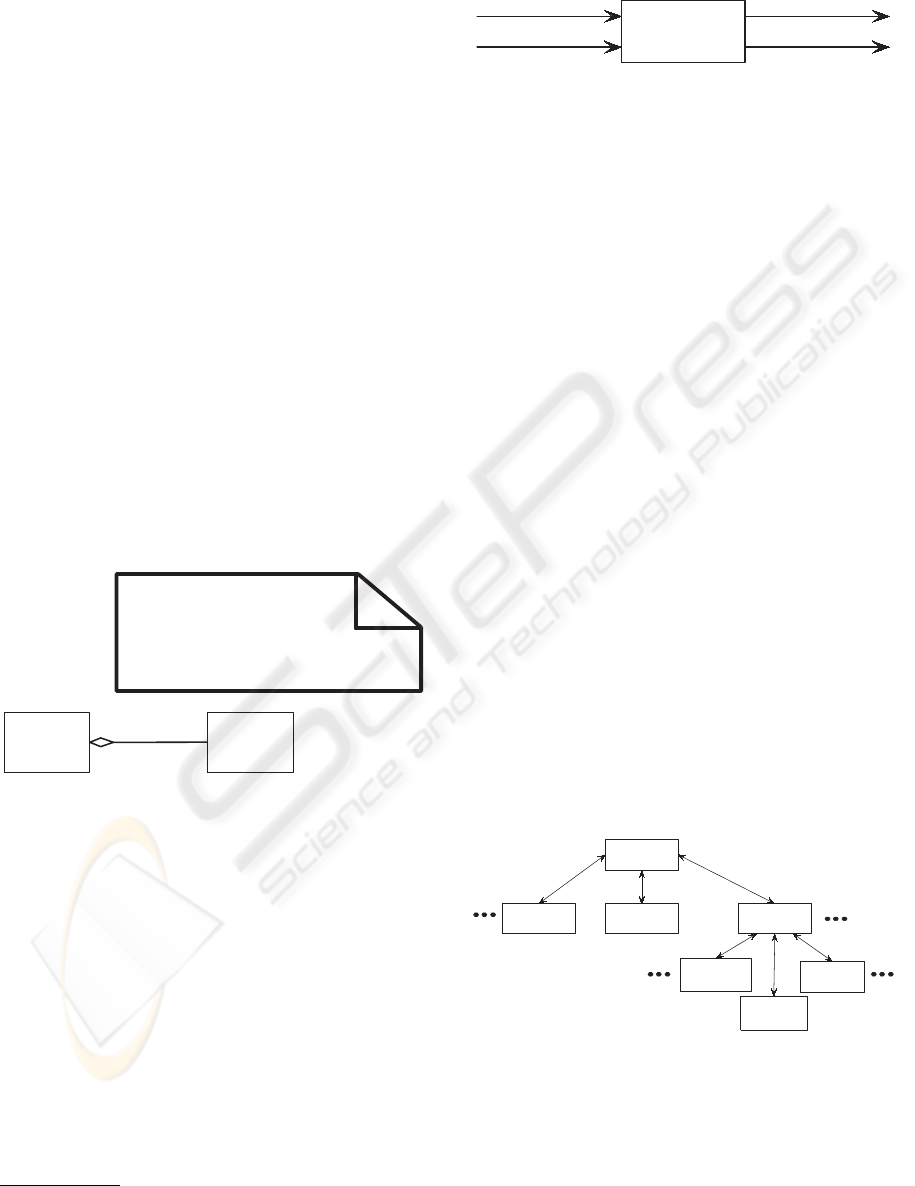

Program

Analyzer

C++ program

Analyzer

Java program

Analyzer

Fortran program

Analyzer

SQL

Analyzer

Table

Analyzer

Main

Meta-Analyzer

Figure 3: Architecture of a context analyzer

Hence, each type of contents is treated by a ana-

lyzer trained for this specific kind of information. Fol-

lowing a multi-strategy approach, we advocate an ar-

chitecture (c.f. Figure 3) composed of many special-

ized analyzers coordinated by a main meta-analyzer.

CONTEXT ANALYSIS FOR SEMANTIC MAPPING OF DATA SOURCES USING A MULTI-STRATEGY MACHINE

LEARNING APPROACH

447

The role of the meta-analyzer consists in running

through the context documents, identifying the var-

ious contents types and determining which special-

ized analyzer is the most appropriate to take care of it.

Results returned by specialized analyzers are then fil-

tered (redundancy elimination) and combined by the

meta-analyzer. The context analyzer has a hierarchi-

cal architecture of arbitrary depth. Indeed, a child an-

alyzer can itself supervise, as a meta-analyzer, a set

of more specialized analyzers. As illustrated on Fig-

ure 3, a program analyzer can itself coordinate the

processing of many specialized analyzers, each one

being an expert in the parsing of a specific program-

ming language.

Finally, it is possible for analyzers to collaborate

with each other without being related in the coordi-

nation hierarchy. For example, a program analyzer

could resort to a SQL analyzer for the analysis of a

request inserted in the program it is parsing.

5 CONCLUSIONS

Exploitation of contextual resources is a promising

approach to solve the problem of semantic alignment

of data sources (Tierney and Jackson, 2004). Docu-

ments composing the context of a data source offer

valuable information which can help resolving major

problems such as the identification of complex map-

pings (e.g. mapping a combinination of source con-

cepts onto a single target concept).

As an extension to machine learning architectures

using a multi-strategy approach for semanctic map-

ping (Doan et al., 2003; Berlin and Motro, 2002;

Doan et al., 2002; Kurgan et al., 2002), we propose

a context analyzer based on multiple specialized an-

alyzers which are themselves coordinated by a meta-

analyzer. This architecture has both the advantage of

being adaptative and extensible. The hierarchical or-

ganization of analyzers allows a more efficient repar-

tition of tasks between units. It also facilitates ana-

lyzing the context according to different abstraction

levels. Hence, the context analyzer can enhance data

sources with a range of details going from the most

general (with low time cost) to the most specific (with

higher time cost).

Moreover, this way of exploiting the context can

prove to pay off for the effort put into data documen-

tation. It also reduces the importance of user inter-

vention in the mapping, which can be tedious, costly

and error prone.

Although only two enrichment types have been

brought up in this paper (i.e. expliciting abbrevi-

ated field names and identifying composite concepts),

many other types of data source enrichment can be

achieved from context analysis. Among others, our

project aims at identifying and experimenting various

enrichment strategies (based on context information)

which could help improve the quality of data source

mappings.

REFERENCES

Berlin, J. and Motro, A. (2002). Database schema matching

using machine learning with feature selection. In Pro-

ceedings of the 14th International Conference on Ad-

vanced Information Systems Engineering, pages 452–

466.

Botting, R. J. (2004). The object

constraint language. Web site.

http://www.csci.csusb.edu/dick/samples/ocl.html.

Doan, A., Domingos, P., and Halevy, A. (2003). Learning

to match the schemas of databases- a multistrategy ap-

proach. Journal of Machine Learning, 50(3):279–301.

Doan, A., Madhavan, J., Domingos, P., and Halevy, A.

(2002). Learning to map between ontologies on the se-

mantic web. In Proceedings of the 11th international

conference on World Wide Web, pages 662–673.

Kohavi, R. (1996). Scaling up the accuracy of naive-bayes

classifiers: A decision-tree hybrid. In Press, A., ed-

itor, Proceedings of the Second International Con-

ference on Knowledge Discovery and Data Mining,

pages 202–207, Portland, OR.

Kurgan, L., Swiercz, W., and Cios, K. J. (2002). Se-

mantic mapping of xml tags using inductive machine

learning. In Proceedings of the 2002 International

Conference on Machine Learning and Applications

(ICMLA’02), pages 99–109, Las Vegas, NV.

Pedro Domingos, M. P. (1997). On the optimality of the

simple bayesian classifier under zero-one loss. Ma-

chine Learning, 29:103–130.

Tierney, B. and Jackson, M. (2004). Contextual semantic

integration for ontologies. In Doctoral Consortium

of the 21rst Annual British National Conference on

Databases.

ICEIS 2005 - DATABASES AND INFORMATION SYSTEMS INTEGRATION

448