MODELLING HYBRID CONTROL SYSTEMS WITH

BEHAVIOUR NETWORKS

Pierangelo Dell’Acqua, Anna Lombardi

Department of Science and Technology (ITN) - Link

¨

oping University

601 74 Norrk

¨

oping, Sweden

Lu

´

ıs Moniz Pereira

Centro de Intelig

ˆ

encia Artificial (CENTRIA) - Departamento de Inform

´

atica, Universidade Nova de Lisboa

2829-516 Caparica, Portugal

Keywords:

Behaviour networks, hybrid control systems, action selection, control architectures for autonomous agents.

Abstract:

We present an approach to model adaptive, dynamic hybrid control systems based on behaviour networks. We

extend and modify the approach to behaviour networks with integrity constraints, non-ground rules, internal

actions, and modules to make it self-adaptive and dynamic. The proposed approach is general, reconfigurable,

robust, and suitable for environments that are dynamic and too complex to be entirely predictable, the control-

ling system having limited computational and time resources.

1 INTRODUCTION

The term hybrid is accepted nowadays to denote sys-

tems whose behaviour is defined by processes of di-

verse characteristics. A survey on hybrid systems can

be found in (Antsaklis and Nerode, 1998). In the con-

trol area, this signifies the combination of continuous

and discrete dynamics, e.g. systems with signals that

can take values from a continuous (real numbers) and,

respectively, discrete (integer numbers) set. Some

of the signals, can also be discrete-event driven, in

an asynchronous way. Control systems of this kind,

where continuous and discrete dynamics are modelled

together, have proved effective in the computer con-

trol of continuous processes. They, in fact, represent

the typical situation when controlling real systems,

that is the system to be controlled is continuous-time

while the controller works in a discrete way as it needs

a time interval in order to compute the next system

control input. Hybrid systems have always been stud-

ied both by computer science and control communi-

ties. Initially the work has been carried out separately

and only recently the efforts have been put together,

resulting in formal methods used to design intelligent

control systems (Davoren and Nerode, 2000).

Behaviour networks were introduced by Pattie

Maes (Maes, 1989) and (Maes, 1991) to address the

problem of action selection in environments that are

dynamic and too complex to be entirely predictable,

and where the system has limited computational re-

sources and time resources

1

. Therefore, the action

1

See pp. 244-255 in (Franklin, 1995) for a summary in-

selection problem cannot be completely rational and

optimal.

Maes adopted the stance suggestive of building in-

telligent systems as a society of interacting, mind-

less agents, each having its own specific competence

(Minsky, 1986) and (Brooks, 1986). The idea is that

competence modules cooperate in such a way that the

society as a whole functions properly. Such an ar-

chitecture is very attractive because of its distributed-

ness, modular structure, emergent global functional-

ity and robustness (Maes, 1989). The problem is how

to determine whether a competence module should

become active (i.e., selected for execution) at a certain

moment. Behaviour networks addressed this problem

by creating a network of competence modules and by

letting them activate and inhibit each other along the

links of the network. Global parameters were intro-

duced to guide the activation/inhibition dynamics of

the network. Behaviour networks combine character-

istics of traditional AI and of the connectionist ap-

proach by using a connectionist computational model

on a symbolic, structured representation.

In this paper we adapt the formalism of behaviour

networks to make it possible to model hybrid control

systems. In particular, we extend the language of be-

haviour networks to allow the competence modules

contain variables. This feature makes it possible for

the controller to receive the value of the parameters

from a dynamic environment. Further, we introduce

internal actions, and modules (sets of atoms and rules)

in such a way that the network can test, and mod-

troduction.

98

Dell’Acqua P., Lombardi A. and Moniz Pereira L. (2005).

MODELLING HYBRID CONTROL SYSTEMS WITH BEHAVIOUR NETWORKS.

In Proceedings of the Second International Conference on Informatics in Control, Automation and Robotics, pages 98-105

DOI: 10.5220/0001188300980105

Copyright

c

SciTePress

ify its global parameters (self-tuning) together with

those of the competence modules defining its behav-

iour. Moreover, we introduce integrity constraints to

prevent a network in a safe state to enter into an un-

safe state (by executing some competence module).

The paper is structured as follows: Section 2 intro-

duces the notion of hybrid control systems, Section 3

presents the language of extended behaviour networks

and, for space limitation reasons, only sketches the

idea of the algorithm for action selection. Section 4

describes how to model hybrid control systems by ex-

tended behaviour networks and provides a few exam-

ples. Finally, Section 5 discusses some future work.

2 HYBRID CONTROL SYSTEM

Several approaches to hybrid control systems have

been defined in the literature, see e.g. (Antsaklis

and Nerode, 1998) where examples of the most com-

mon structures are given. A hybrid control system

can be seen as a switching system where the dynamics

are described by a finite number of dynamical models

(given in terms of differential or difference equations)

together with a set of rules for switching among these

models. These switching rules can be represented

by logic expressions. A general hybrid control sys-

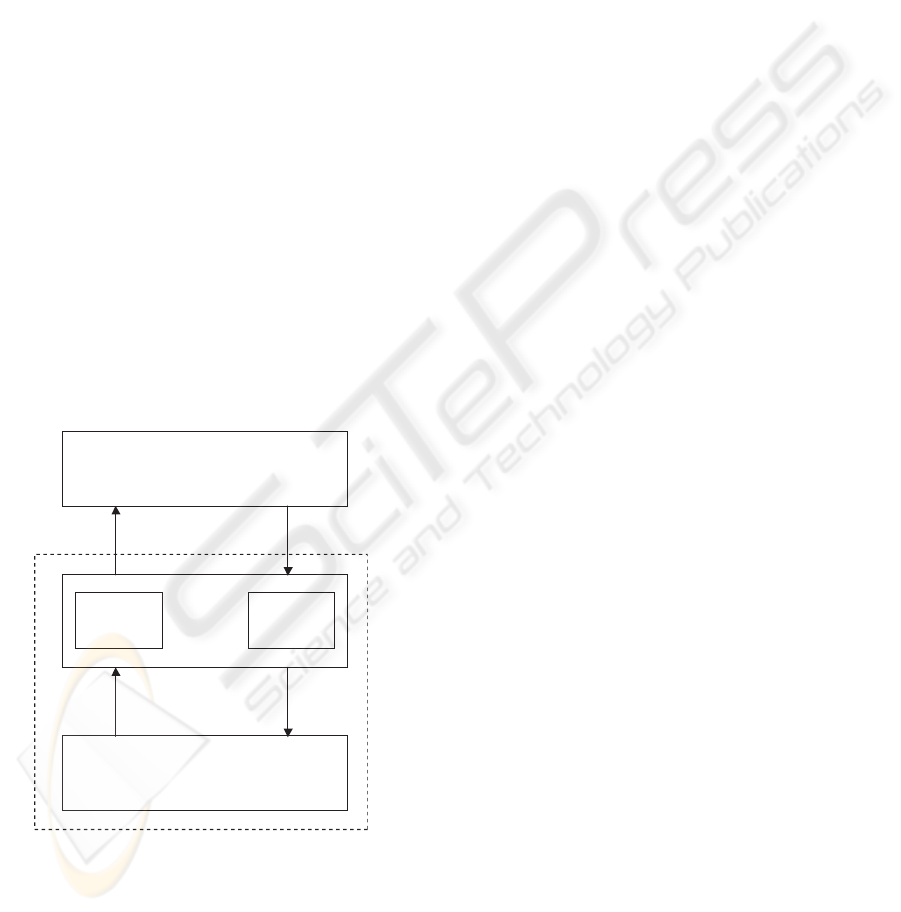

tem is represented in Fig. 1 (Koutsoukos et al., 2000),

(Antsaklis and Nerode, 1998).

Controller

Plant

Generator Actuator

)(

~

k

tx )(

~

k

tr

)

(t

x

)

(t

r

Figure 1: Hybrid control system.

The plant contains all the continuous dynamics

including possible traditional controllers. The con-

troller consists of a discrete-decision process de-

scribed by a finite automaton.

The hybrid control system used in this work con-

sists of a continuous system to be controlled - the

plant - and a logic-based controller connected to the

plant via an interface, in a feedback configuration.

A simple example of hybrid control system of this

kind is the thermostat/furnace system (Franklin et al.,

2002). The thermostat regulates the temperature in

a room. The furnace and heat flow represent the

continuous-time system to be controlled. The con-

troller interacts with the continuous dynamics of the

furnace to counteract the heat losses in order to keep

the temperature within a desirable range. The ther-

mostat is, therefore, an asynchronous discrete-event

driven system which responds to the symbols: {too

hot, too cold, normal}. The room temperature is

translated into one of these symbols and the thermo-

stat responds by sending electrical signals to the fur-

nace. The voltage of the furnace is controlled and

room temperature is increased or decreased, accord-

ingly.

2.1 Plant

The plant is, in general, a nonlinear time-continuous

system that can be described by a set of ordinary dif-

ferential equations

˙x(t) = f (x(t), r(t)) (1)

where x(t) ∈ X ⊂ ℜ

n

denotes the state vector

and r(t) ∈ R ⊂ ℜ

m

the input vector. For each fixed

r(t) ∈ R, the function f(·, r(t)) : X → X is con-

tinuous in X and satisfies the conditions for existence

and uniqueness of solutions for initial states x

0

∈ X.

In the thermostat/furnace hybrid control system in-

troduced above, the plant consists of the furnace and

the room. If x(t) denotes the room temperature at

time t in degrees Celsius, the plant equation (1) be-

comes (in a simplified model) (van Beek et al., 2003),

(Koutsoukos et al., 2000)

˙x(t) = −0.1x(t) + 0.4r(t)

where the control input r(t) represents the voltage

on the furnace control circuit (0 or 12V).

2.2 Controller

The controller is a discrete-time dynamic system de-

scribed by a set of the difference equations

˜s(t

k+1

) = δ(˜s(t

k

), ˜x(t

k

))

˜r(t

k

) = φ(˜s(t

k

))

(2)

Tilde denotes representational symbols: plant events

that go to the controller and actions sent from the con-

troller to the plant. The functions δ and φ are defined

in terms of a logic formalism that will be described in

detail in Sec. 3.

Let the desired room temperature (T ) in the ther-

mostat/furnace example be set to 20 degrees. Then

the plant event symbols sent to the controller are:

MODELLING HYBRID CONTROL SYSTEMS WITH BEHAVIOUR NETWORKS

99

if room temperature is lower than 20 → too cold

if room temperature is higher than 20 → too hot

Each of the above event symbols will activate a rule

of the controller that generates an action to be sent to

the plant

• too cold: switch on the furnace. A voltage is ap-

plied to the furnace control circuit: r(t) = 12V

• too hot: switch off the furnace

The controller in this case has two states, i.e. the vec-

tor ˜s(t

k

) has two components

˜s(t

k

) =

˜s

1

(t

k

)

˜s

2

(t

k

)

The output equation in (2) becomes

φ(˜s

1

(t

k

)) = ˜r

1

(t

k

)

φ(˜s

2

(t

k

)) = ˜r

2

(t

k

)

where

˜r

1

⇔ on

˜r

2

⇔ off

The controller is assumed to be adaptive in the

sense that the parameters can change in response to

variations of the environment.

2.3 Interface

Signals in the plant and in the controller are of dif-

ferent kind and therefore the plant and the controller

need an interface to communicate with each other.

The task of the interface is to translate the output

of the plant (plant here includes also sensors used to

measure quantities of interest) into symbols that can

be understood by the controller and vice-versa. The

conversion of the continuous-time output of the plant

into symbols is performed by a generator (see Fig. 1)

x(t) ⇒ ˜x(t

k

)

The controller receives a symbol from the plant

through the interface. In the example of thermo-

stat/furnace, let the room temperature be measured

and the value be 15 degrees. If the desired tempera-

ture is set to 20 degrees, the controller will determine

the state too cold as active and will send a control sig-

nal to the furnace in order to switch on. The actuator

will carry out this communication by performing

˜r(t

k

) ⇒ r(t)

3 BEHAVIOUR NETWORKS

EXTENDED

In this section we extend the approach to behaviour

networks proposed by Pattie Maes (Maes, 1991) and

(Maes, 1989) to allow rules containing variables, in-

ternal actions, integrity constraints, and modules (sets

of atoms and rules). This will allow us to model hy-

brid control systems.

A behaviour network is characterized by five mod-

ules: R, P, H, C, and G. The module R is a set of

rules formalizing the behaviour of the network, P is

a set containing the global parameters, H is the inter-

nal memory of the network, C its integrity constraints

and G its goals/motivations. We call the state of the

network the tuple S=(R, P, H, C, G). We assume given

a module Math containing the axioms of elementary

mathematics.

3.1 Language L

Let c be a constant, q a predicate symbol of arity n,

and x a variable. Then, terms and atoms in L are de-

fined as follows:

term := x | c

atom := q(term

1

,..., term

n

)

When the arity of q is 0, we write the atom as q. To

express in L that an atom belongs to a module, we

introduce the notion of indexed atoms. We name (in

L ) the modules R, P, H, C, G, E and Math as r, p, h,

c, g, e and math. Let α > 0 be a real number.

iAtom* := h :atom | p : atom | g :goal |

c: ic | r :rule

iAtom := iAtom* | e : atom | math :atom

niAtom := h ÷ atom | p ÷ atom | g ÷ goal |

c÷ ic | r ÷ rule

iAtomSeq := iAtom* | iAtom*, iAtomSeq

niAtomSeq := iAtom | iAtom, niAtomSeq |

niAtom | niAtom, niAtomSeq

goal := niAtomSeq

ic := niAtomSeq

rule := hprec; del; add; action; α i

prec := ε | niAtomSeq

del := ε | iAtomSeq

add := ε | iAtomSeq

action := atom | noaction

An iAtom of the form m:X states that X belongs to

the module M whose name is m, while an niAtom

m÷X states that X does not belong to M. An ni-

AtomSeq is a sequence of iAtoms and niAtoms sep-

arated by the symbol ’,’. Note that a niAtomSeq

may contain e:atom or math:atom while an iAtom-

Seq cannot. The reason for this is that the modules E

and Math cannot be updated. Both goals and integrity

constraints (ic) are niAtomSeq. A goal (motivation)

expresses some condition to be achieved, while an in-

tegrity constraint represents a list of conditions that

must not hold. A rule

2

is a tuple of the form:

hprec; del; add; action; αi

2

In (Maes, 1991) rules are called competence modules.

ICINCO 2005 - INTELLIGENT CONTROL SYSTEMS AND OPTIMIZATION

100

where prec is a sequence of preconditions (possibly,

the empty sequence ε) that have to be fulfilled before

the rule can become executable. del and add represent

the internal effect of the rule in terms of a delete and

add sequence of indexed atoms. When both del and

add are ε, then the rule has no internal effects. The

atom action represents the external effect of the rule:

an action that must be executed. We employ ’noac-

tion’ to indicate that the rule does not have any exter-

nal effect. Finally, each rule has a level α of strength

3

.

Variables in a rule are universally quantified over the

entire rule.

The following is an example of a rule. E and H

are the modules representing the environment and the

internal memory of the network.

h h÷on,e:temp(x),math:x<20; ε; h:on;

heating(on); 0.5i

The rule states that if the heating is off (not on)

and the temperature x is less than 20, then the heating

must be turned on. This is achieved by adding on to

H (since h:on belongs to the add list of the rule), and

by executing the action heating(on). The strength

of the rule is 0.5.

A substitution σ is a finite set of bindings of the

form variable/term. A substitution can be applied

to any expression X in L (written as X

σ

) by si-

multaneously replacing any variable v in X with t

for every binding v/t in σ. For example, if X is

h:q(x,y,z,c) and σ = {x/y, z/d}, then X

σ

is

h:q(y,y,d,c). A ground expression is one not con-

taining variables. Two expressions X and Y in L are

unifiable (written as X ≈ Y ) iff there exists a substi-

tution σ such that X

σ

= Y

σ

, where = denotes syn-

tactic equality.

3.2 Rule Selection

At every state, a rule in R must be selected for exe-

cution. To do so, one needs to find all the rules that

are executable and select one. To determine whether

a rule is executable, one needs to verify whether its

preconditions (prec) are true at S. An niAtomSeq l is

true at a state S iff:

- for every m:X in l it holds that X ∈ M , and

- for every m÷X in l there exists no substitution σ

for which X

σ

∈ M .

A state S=(R,P,H,C,G) is safe if there exists no sub-

stitution σ that makes an integrity constraint in C true

at S. Applying a rule r = hprec; del; add; action; αi

to a state S = (M

1

, . . . , M

5

) makes the system move

to a new state S

′

= (M

′

1

, . . . , M

′

5

) obtained as fol-

lows. Every M

′

i

is obtained from M

i

by removing X,

if present, for every m

i

:X in del, and by adding Y

for every m

i

:Y in add. We write r(S) to denote the

3

This value is used to calculate the activation level of the

rules in Sect. 3.2.

state obtained by applying a rule r to a state S. A rule

r = hprec; del; add; action; αi is executable at state

S iff:

- prec is true at S,

- r(S) is a safe state, and

- action is a ground atom.

An executable rule may be selected for execution.

To select a rule we extend/modify the algorithm pro-

posed in (Maes, 1989) to take into consideration vari-

ables (for space limitation reasons we only sketch the

idea). We start by linking the rules in a network

through three types of links: successor links, prede-

cessor links, and conflicter links. Let x and y be rules.

- There is a successor link from x to y (x has y as

successor) for every iAtom* m:X in the add list of

x and iAtom* m:Y in the prec list of y such that

X ≈ Y .

- A predecessor link from x to y exists for every suc-

cessor link from y to x.

- There is a conflict link from x to y for every iAtom*

m:X in the prec list of x and iAtom* m:Y in the

del list of y such that X ≈ Y .

Rules use these links to activate and inhibit each other.

Both the state S of the behaviour network together

with the environment E, and the goals can spread ac-

tivation among the rules through links. The basic idea

is that there is input of activation energy coming from

the state towards rules (forward propagation) whose

preconditions partially match the current state, and

from the goals towards rules (backward propagation)

whose add lists partially match the goals. Further-

more, there is an inhibition by the goals that have al-

ready been achieved (protected goals). These goals

remove some activation energy from the rules that

would undo them.

Besides the activation energy from the state and

goals, rules also inhibit and activate each other along

the links in the network. The mathematical model for

computing the activation level of rules is based on the

local strength α of a rule and on several global para-

meters that are used to tune the spreading of activation

energy through the network. There exists a parameter

θ specifying the threshold of rules for becoming ac-

tive, φ the amount of energy that a proposition that is

true injects into the network, ψ the amount of energy

that a goal injects into the network, and δ the amount

of energy that a protected goal takes away from the

network.

Let r ∈ R be a rule and σ a substitution. The rule

r

σ

becomes active when: it is executable, its level of

strength overcomes θ, and its activation level is higher

than the activation level of all other executable rules.

Note that only one rule can become active. In case

there are several executable rules with the same acti-

vation level, then one is randomly selected to become

active. When an active rule has been executed, then

its activation level is reinitialized to 0. If none of the

MODELLING HYBRID CONTROL SYSTEMS WITH BEHAVIOUR NETWORKS

101

rules becomes active, then the threshold θ is lowered

by a certain factor.

4 MODELLING HYBRID

CONTROLLERS

An adaptive, dynamic hybrid controller can be de-

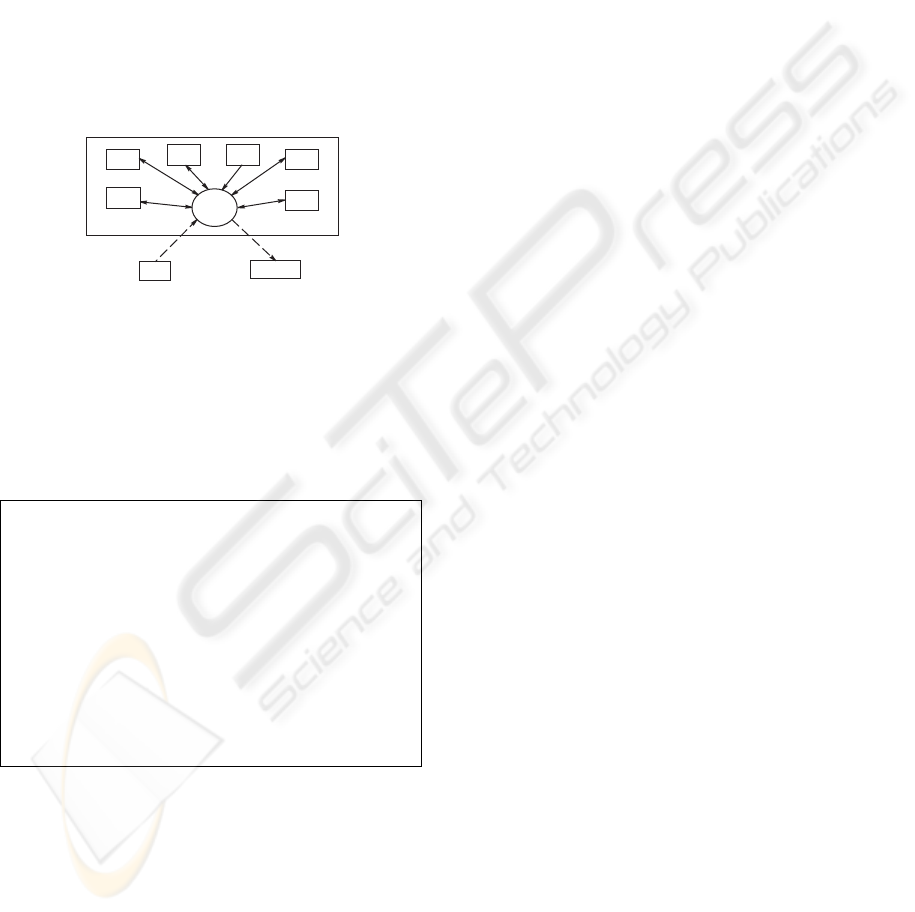

scribed by an extended behaviour network. Figure 2

illustrates an hybrid controller consisting of a control

unit (CU) and the modules R, P, H, C, G and Math.

Besides the modules, the CU of the controller is con-

nected to an external module E containing discrete

values from the environment, and to the Actuator.

CU

R

P

H

C

G

Actuator

E

Math

Figure 2: Hybrid controller.

4.1 Control Unit Engine

The basic engine of CU can be described via the fol-

lowing cycle:

Cycle(n, R, P, H, C, G)

1. Load the rules of R into CU and calculate their acti-

vation level wrt. the global parameters in P .

2. If one rule becomes active, then execute its internal

effect, and send its external effect (its action) to the

actuator. Let R’, P’, H’, C’, G’ be the modules after

the execution of the rule.

Cycle with (n+1, R’, P’, H’, C’, G’).

3. If no rule becomes active, then lower the level of θ in

P .

Cycle with (n+1, R, P’, H, C, G).

Initially, given the modules R, P, H, C and G, the

cycle starts with (1, R, P, H, C, G). We assume that the

initial state S=(R, P, H, C, G) is safe. First, the rules of

R are loaded into CU and the activation level of each

rule is calculated. Then, the rule that becomes active

is executed. Its internal effect makes the state of the

behaviour network to change, and its external effect

(i.e., its action) is sent to the Actuator to be executed.

If no rule is active, then the controller cycles by letting

all the modules unchanged except P whose value θ

(the threshold of rule activation) is lowered.

4.2 Artificial Fish

Consider a scenario where we have a virtual ma-

rine world inhabited by a variety of fish. They au-

tonomously explore their dynamic world in search for

food. Hungry predator fish stalk smaller fish who

scatter in terror. Fish are situated within the environ-

ment, and sense and act over it. For simplicity, the

behaviour of a fish is reduced to eating food, escap-

ing and sleeping, and is determined by the motivation

of it being safe and satiated. The following extended

behaviour network models an artificial fish. We em-

ploy the module E to represent the stimuli of the fish.

The stimuli of hunger and fear are variables with val-

ues in the range [0 1] with higher values indicating a

stronger desire to eat or to avoid predators (Tu, 1999):

• hungry : it expresses how hungry the fish is and it

is approximated by

hungry(t) = min {1 − f(t)r(∆T /n

α

, 1}

where f denotes the amount of food consumed,

∆T the time since the last meal and n

α

indicates

the appetite of the fish;

• fear : it quantifies the fear of the fish by taking

into account the distance d(t) of the fish to visible

predators

fear(t) = min {D

0

/d(t), 1}

• tired : it contains information on whether the fish is

tired. It is a boolean variable that becomes T=true

every 3 hours.

The input vector to the controller is

˜x(t

k

) =

"

hungry(t

k

)

fear(t

k

)

tired(t

k

)

#

The actions the fish can make are:

• searchFor(food): it searches for food when it is

hungry;

• eat(food): once it has found food, it eats the food;

• sleep: when it is tired;

• escape: when it is in danger.

The module H represents the internal state of the fish.

The fish can have food, can be satiated or can be safe.

˜s(t

k

) =

"

food(t

k

)

satiated(t

k

)

safe(t

k

)

#

Assume that there exist no constraints, therefore

C={}. The module G is {h:safe, h:satiated},

and R consists of:

h e:hungry(x), math:x>0.5, h÷food; ε;

h:food; searchFor(food); 0.5i

ICINCO 2005 - INTELLIGENT CONTROL SYSTEMS AND OPTIMIZATION

102

h e:hungry(x), math:x>0.5, h:food; ε;

h:satiated; eat(food); 0.5i

h e:tired; ε; ε; sleep; 0.5i

h e:fear(x), math:x>0.5; ε; h:safe;

escape; 0.7i

The first rule states that if the fish is hungry and it

does not have food, then it will search for it. Note

that when the fish receives only one stimulus, its be-

haviour is completely determined. When it is hungry,

then it will search for food or it will eat, depending on

whether or not it has food. Things change if the fish

receives several stimuli simultaneously, e.g., tired and

hungry. Then the fish has a competing alternative ac-

tion, to sleep. The activation levels of the rules deter-

mine which one will become active. Suppose that the

fish does not have food. In this case, it is likely that

the first rule will become active since it will receive

energy of G via backward propagation. In fact, the

second rule will receive energy of G since its add list

partially matches G, this rule in turn will propagate

backward energy to the first rule since the add list of

the first rule and the precondition of the second one

both contain the iAtom h:food.

Finally, suppose that, besides the first two stimuli,

the fish receive also the stimulus for fear. In this case,

G cannot determine which rule will become active

since all rules (except for the third one) receive energy

backward from G. Now, to increase the chance that

the last rule will become active, one can give more

local strength to it.

4.3 Home Environment

Development in home automation has made people

dream for long of a smart home where household de-

vices behave in an intelligent way. Technologies have

developed since the first ideas appeared and the smart

home is becoming a reality. Ambient intelligence is

an area of study where the environment is aware of

the presence of people and is adaptive, proactive, and

responsive to their needs. It is very easy to figure

out such a scenario: inhabitants can ring home and

program the heating to start at a given time so that

they find it warm when they return. Great attention

is devoted to ambient intelligence systems nowadays;

in a project supported by the European Community,

ISTAG,

4

a formal definition of ambient intelligence is

provided. Ambient Intelligence should provide tech-

nologies to support human interactions and to sur-

round users with intelligent sensors and interfaces.

One of the main reasons that has prevented the im-

plementation of ambient intelligence on a large scale

is the high cost in programming. The system should

be adjusted to the needs of each home and the inhabi-

tants are not willing to learn to program it themselves.

4

see http://www.cordis.lu/ist/istag.htm

for further details.

They would like simply a system capable to respond

to their needs. Many approaches have been proposed

aiming at developing such systems. In (Mozer, 1998)

the goal is that the home programs itself on the basis

of a neural network learning method. It observes the

lifestyle and desires of the inhabitants and it learns

to anticipate and accommodate their needs. (Hagras

et al., 2004) focuses, instead, on developing learning

and adaptation techniques for embedded agents. Em-

bedded agents are capable of reasoning, planning, and

learning and they can communicate with each other.

In this context each embedded agent is connected to

sensors and actuators so they can modify actuators on

the basis of input vectors. In (Davidsson and Boman,

2000), (Rutishauser et al., 2005) different categories

of agents are defined resulting in a multi-agent system

with agents toiling in a concurrent way. Each cate-

gory relates to applications in the environment being

monitored and controlled: personal comfort, environ-

mental parameters, and so on.

Let us now apply the approach proposed in the pre-

vious sections to a particular case of ambient intelli-

gence. Assume we have a hybrid control system of

the kind described in Sec. 2 and represented in Fig. 1.

The controller is implemented as extended behaviour

networks, as defined in Sec. 3. The goal is to keep

the home comfortable with a temperature set at a de-

sired value T , and safe by monitoring the fire detec-

tor. If fire is detected then the sprinkler system is acti-

vated. The water will flow through the sprinkler heads

into the rooms only after the power supply has been

switched off. The generator in the interface receives

information from the sensors and sends the input vec-

tor to the controller with the following symbols:

• temp: room temperature at time t

k

;

• people: detection if there are people at home at

time t

k

; it is a boolean variable assuming the values

T=true if there are people and F=false otherwise;

• fire: fire detection at time t

k

; it is a boolean variable

assuming the values T=true if fire is detected and

F=false otherwise.

• alarm: burglary alarm detection at time t

k

; it is a

boolean variable assuming the values T=true if an

intruder is detected and F=false otherwise.

The input vector of the controller is given by

˜x(t

k

) =

temp(t

k

)

people(t

k

)

fire(t

k

)

alarm(t

k

)

The controller will act on the heating system by

switching it on/off depending on the value of the room

temperature and the desired temperature. The con-

troller will also switch off the power supply in case

of fire and subsequently the sprinkler system will be

activated. In case an intruder breaks in, the controller

will switch the alarm bell on if there are people at

MODELLING HYBRID CONTROL SYSTEMS WITH BEHAVIOUR NETWORKS

103

home. Otherwise, the controller will load a library of

rules that specifically handles the event (e.g., handling

the call to a Security Center). The actions produced

by the actuators in the interface can be summarized

as:

• heating(on/off): set the voltage of the furnace con-

trol system to 12V or 0V;

• power(off): switch off the power supply;

• sprink(on/off): activate/disactivate the sprinkler

system;

• alarmBell(on/off): activate/disactivate the burglary

alarm bell.

The module H represents the state vector of the con-

troller:

˜s(t

k

) =

heat(t

k

)

el(t

k

)

w(t

k

)

bell(t

k

)

where heat indicates whether the heating is on or off,

el gives information on the power supply, w on the

sprinkler system, and bell on the alarm system. The

state with power supply on and the sprinkler system

activated is not safe and therefore must be avoided.

Initially, it is assumed that the heating is on, the power

supply is on while the sprinkler system is disactivated,

and the alarm bell is off. The state at initial time t

0

is

˜s

0

= ˜s(t

0

) =

h:heat(on)

h:el(on)

h÷h(on)

h÷bell(on)

Note that the initial state is safe. The con-

troller behaviour is determined by the set of

modules defined in Sec. 3. The integrity con-

straint module C contains the following sequence

C={ h:el(on),h:w(on)}

Meaning it is not allowed to have both the power sup-

ply on and the sprinkler system activated, as this en-

genders a state that is not safe. The following rules

are contained in the module R:

h h÷heat(on),e:temp(x),math:x<T ,

e:people; ε; h:heat(on); heating(on);

0.7 i

h h:heat(on),e:temp(x),math:x>T ;

h:heat(on); ε; heating(off); · i

h e:fire; ε; h:w(on); sprink(on); 0.9 i

h e:fire; h:el(on); ε; power(off); 0.9 i

h e÷fire,h:w(on); h:w(on); ε;

sprink(off); 0.7 i

The first two rules decide whether the heating should

be switched on. This depends on whether the tem-

perature measured by the sensor is smaller or greater

than the desired temperature T . The last three rules,

instead, decide what should be done if fire is detected.

The actions to be taken in case of fire are of high im-

portance and therefore these three rules have a high

strength level (set to 0.9). The third rule says that if

fire is detected then the sprinkler system must be ac-

tivated. This is not allowed by the integrity constraint

module C. In this case the fourth rule is necessary,

which switches off the power supply in case of fire.

Finally, the fifth rule states to disactivate the sprinkler

system once the fire has been switched off.

The following rules illustrate a situation where the

controller can dynamically change its own rules in R.

h e:alarm,e:people; ε; h:alarm(on);

alarmBell(on); 0.8 i

h e:alarm,e÷people; ε;

r:load(alarmLib); noaction; 0.8 i

The last rule says to load a library of rules into R to

handle a burglary alarm situation in case no one is at

home. Those rules will be loaded into CU and used at

the next CU cycle.

5 DISCUSSION

In this paper we have extended and modified the for-

malism of behaviour networks to make it suitable

to model adaptive, dynamic, hybrid control systems.

Because of space limitations, we have only sketched

the mathematical model for calculating the activation

level of rules to address the problem of rule selection.

Currently, we are developing an implementation for

the extended behaviour networks by using XSB Pro-

log (XSB-Prolog, 2004) to implement the part based

on the unification algorithm, and Java (Java Technol-

ogy, 2004) to implement the part calculating the acti-

vation level of rules. We are going to integrate them

via Interprolog (InterProlog, 2004). Finally, we are

going to field test the system, in particular the model

for calculating the activation level of rules, on a num-

ber of computer simulations, and to compute com-

plexity results.

An interesting extension to the language L of the

behaviour network would be to allow variables to oc-

cur in the strength levels of rules. This will allow

defining the strength of a rule as a function of state.

Clearly, this will be computationally more expensive.

h h÷on,e:temp(x),math:x<20; ε; h:on;

heating(on); 0.5

*

(20-x)i

This rule states that if the heating is not on and

the temperature is below 20 degrees, then we need to

switch the heating on. The strength level of the rule

depends on current temperature x. The bigger the dif-

ference 20-x, the bigger the strength level of the rule,

and consequently the better is the chance of the rule

to become active.

We are also considering the possibility to include

preference rules into a behaviour network with the

ICINCO 2005 - INTELLIGENT CONTROL SYSTEMS AND OPTIMIZATION

104

aim of contributing to the action selection process.

The use of preference rules has been extensively stud-

ied in Logic Programming, both on the theoretical and

practical side. The idea is to compute all the exe-

cutable rules whose activation level is above a certain

threshold, and then to use preference reasoning to se-

lect the one that becomes active (and not just the one

with greatest activation). This allows for more flexi-

bility, with a new candidate threshold parameter, and

an extra level of control with context sensitive prefer-

ences. Moreover, these could be updated by the sys-

tem (by allowing preference rules in R).

Other techniques developed by the Logic Program-

ming community could be applied here. For example,

belief revision techniques could be used to resolve

cases of conflicting rules when more than one are al-

lowed to become active (at the moment only one rule

can become active and consequently only one action

at a time can be sent to the actuator); and rule up-

date techniques in the spirit of EVOLP (Alferes et al.,

2002). The generalization of the language L to full

EVOLP would allow for non-deterministic evolutions

(chose one arbitrarily or according to some proba-

bility). Further, genetic algorithms could be used to

tune the global parameters of the network to select the

most effective action selection from a population. In

this way, a set of parameters can be evolved instead

of being tuned by hand (see (Singleton, 2002) for a

discussion).

Finally, to tackle the problem of modelling very

complex environments we may design and construct

networks of behaviour networks, either with hierar-

chical or distributed structure, or even behaviour net-

works that fight on another to acquire control.

For example, in a scenario where we need to con-

trol a complex building consisting of several floors,

we may employ a number of behaviour networks,

each controlling a different apartment at every floor,

and then organize them in a hierarchical network

where the behaviour networks higher up in the hierar-

chy have the role of supervising those at lower levels.

REFERENCES

Alferes, J. J., Brogi, A., Leite, J. A., and Pereira, L. M.

(2002). Evolving logic programs. In Proceedings of

the 8th European Conf. on Logics in Artificial Intelli-

gence (JELIA’02), LNCS 2424, pp. 50–61.

Antsaklis, P. J. and Nerode, A. (1998). Hybrid control sys-

tems: An introductory discussion to the special issue.

IEEE Trans. on Automatic Control, 43(4):457–460.

Guest Editorial.

Brooks, R. A. (1986). A robust layered control system for

a mobile robot. IEEE J. of Robotics and Automation,

2(1):14–23.

Davidsson, P. and Boman, M. (2000). A multi-agent

system for controlling intelligent buildings. Proc.

4th Int. Conf. on MultiAgent Systems, pp. 377–378.

Davoren, J. M. and Nerode, A. (2000). Logic for hybrid sys-

tems. Proc. of IEEE Special Issue on Hybrid Systems,

88(7):985–1010.

Franklin, G. F., Powell, J. D., and Emami-Naeini, A. (2002).

Feedback Control of Dynamic Systems. Prentice hall.

Franklin, S. (1995). Artificial Minds. MIT Press.

Hagras, H., Callaghan, V., Colley, M., Clarke, G., Pounds-

Cornish, A., and Duman, H. (2004). Creating

an ambient-intelligence environment using embedded

agents. IEEE Intelligent Systems and Their Applica-

tions, 19(6):12–20.

InterProlog (2004). Declarativa. Available at

www.declarativa.com/InterProlog/

default.htm.

Java Technology (2004). Sun microsystems. Available at

http://java.sun.com.

Koutsoukos, X. D., Antsaklis, P. J., Stiver, J. A., and Lem-

mon, M. D. (2000). Supervisory control of hybrid sys-

tems. Proc. of IEEE, Special Issue on Hybrid Systems,

88(7):1026–1049.

Maes, P. (1989). How to do the right thing. Connection

Science Journal, Special Issue on Hybrid Systems,

1(3):291–323.

Maes, P. (1991). A bottom-up mechanism for behavior se-

lection in an artificial creature. In Meyer, J. A. and

Wilson, S. (eds.), Proc. of the first Int. Conf. on Simu-

lation of Adaptive Behavior. MIT Press.

Minsky, M. (1986). The Society of Mind. Simon and Schus-

ter, New York.

Mozer, M. M. (1998). The neural network house. an en-

vironment that adapts to its inhabitants. In Coen, M.

(ed.), Proc. of the Ameriacan Association for Artificial

Intelligence Spring Symposium on Intelligent Environ-

ments, pp. 110–114.

Rutishauser, U., Joller, J., and Douglas, R. (2005). Control

and learning of ambience by an intelligent building.

IEEE Trans. on Systems, Man and Cybernetics, Part

A, 35(1):121–132. Special Issue on Ambient Intelli-

gence.

Singleton, D. (2002). An Evolvable Approach to

the Maes Action Selection Mechanism. Mas-

ter Thesis, University of Sussex. Available at

http://www.informatics.susx.ac.uk/

easy/Publications.

Tu, X. (1999). Artificial Animals for Computer Animation:

Biomechanics, Locomotion, Perception, and Behav-

ior. PhD thesis, ACM Distinguished Ph.D Disserta-

tion Series, LNCS 1635.

van Beek, B., Jansen, N. G., Schiffelers, R. R. H., Man,

K. L., and Reniers, M. A. (2003). Relating chi to hy-

brid automata. In S. Chick, P.J. S

´

anchez, D. F. and

Morrice, D. (eds.), Proc. of the 2003 Winter Simula-

tion Conference, pp. 632–640.

XSB-Prolog (2004). XSB Inc. Available at xsb.

sourceforge.net.

MODELLING HYBRID CONTROL SYSTEMS WITH BEHAVIOUR NETWORKS

105