FIELD GEOLOGY WITH A WEARABLE COMPUTER:

FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST SYSTEM

Patrick C. McGuire

∗

, Javier G

´

omez-Elvira, Jos

´

e Antonio Rodr

´

ıguez-Manfredi, Eduardo Sebasti

´

an-Mart

´

ınez

Robotics & Planetary Exploration Laboratory, Centro de Astrobiolog

´

ıa (INTA/CSIC), Instituto Nacional T

´

ecnica Aeroespacial

Jens Orm

¨

o, Enrique D

´

ıaz-Mart

´

ınez

†

Planetary Geology Laboratory, Centro de Astrobiolog

´

ıa (INTA/CSIC), Instituto Nacional T

´

ecnica Aeroespacial

Markus Oesker, Robert Haschke, J

¨

org Ontrup, Helge Ritter

Neuroinformatics Group, Computer Science Department, Technische Fakult

¨

at, University of Bielefeld

Bielefeld, Germany

Keywords:

computer vision, image segmentation, interest map, field geology on Mars, wearable computers.

Abstract:

We present results from the first geological field tests of the ‘Cyborg Astrobiologist’, which is a wearable

computer and video camcorder system that we are using to test and train a computer-vision system towards

having some of the autonomous decision-making capabilities of a field-geologist. The Cyborg Astrobiologist

platform has thus far been used for testing and development of these algorithms and systems: robotic acqui-

sition of quasi-mosaics of images, real-time image segmentation, and real-time determination of interesting

points in the image mosaics. This work is more of a test of the whole system, rather than of any one part of the

system. However, beyond the concept of the system itself, the uncommon map (despite its simplicity) is the

main innovative part of the system. The uncommon map helps to determine interest-points in a context-free

manner. Overall, the hardware and software systems function reliably, and the computer-vision algorithms are

adequate for the first field tests. In addition to the proof-of-concept aspect of these field tests, the main result

of these field tests is the enumeration of those issues that we can improve in the future, including: dealing with

structural shadow and microtexture, and also, controlling the camera’s zoom lens in an intelligent manner.

Nonetheless, despite these and other technical inadequacies, this Cyborg Astrobiologist system, consisting

of a camera-equipped wearable-computer and its computer-vision algorithms, has demonstrated its ability of

finding genuinely interesting points in real-time in the geological scenery, and then gathering more informa-

tion about these interest points in an automated manner. We use these capabilities for autonomous guidance

towards geological points-of-interest.

1 INTRODUCTION

Outside of the Mars robotics community, it is com-

monly presumed that the robotic rovers on Mars are

controlled in a time-delayed joystick manner, wherein

commands are sent to the rovers several if not many

times per day, as new information is acquired from

the rovers’ sensors. However, inside the Mars robot-

ics community, they have learned that such a brute

∗

New address (after October 3, 2005): McDonnell Cen-

ter for the Space Sciences; Department of Earth & Planetary

Sciences, and the Department of Physics; Washington Uni-

versity; Campus Box 1169; 1 Brookings Dr.; Saint Louis,

MO 63130-4862, USA

†

Currently at: Direcci

´

on de Geolog

´

ıa y Geof

´

ısica; Insti-

tuto Geol

´

ogico y Minero de Espa

˜

na; Calera 1; Tres Cantos,

Madrid, Spain 28760

force joystick-control process is rather cumbersome,

and they have developed much more elegant methods

for robotic control of the rovers on Mars, with highly

significant degrees of robotic autonomy.

Particularly, the Mars Exploration Rover (MER)

team has demonstrated autonomy for the two robotic

rovers Spirit & Opportunity to the level that: practi-

cally all commands for a given Martian day (1 ‘sol’

= 24.6 hours) are delivered to each rover from Earth

before the robot wakens from its power-conserving

nighttime resting mode (Crisp et al., 2003; Squyres

et al., 2004). Each rover then follows the commanded

sequence of moves for the entire sol, moving to de-

sired locations, articulating its arm with its sensors to

desired points in the workspace of the robot, and ac-

quiring data from the cameras and chemical sensors.

From an outsider’s point of view, these capabilities

283

C. McGuire P., Gómez-Elvira J., Antonio Rodríguez-Manfredi J., Sebastián-Mar tínez E., Ormö J., Díaz-Martínez E., Oesker M., Haschke R., Ontrup J.

and Ritter H. (2005).

FIELD GEOLOGY WITH A WEARABLE COMPUTER: FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST SYSTEM.

In Proceedings of the Second International Conference on Informatics in Control, Automation and Robotics - Robotics and Automation, pages 283-291

DOI: 10.5220/0001161002830291

Copyright

c

SciTePress

may not seem to be significantly autonomous, in that

all the commands are being sent from Earth, and the

MER rovers are merely executing those commands.

But the following facts/feats deserve emphasis before

judgement is made of the quality of the MER auton-

omy: this robot is on another planet with a complex

surface to navigate and study; and all of the complex

command sequence is sent to the robot the previous

night for autonomous operation the next day. Sophis-

ticated software and control systems are also part of

the system, including the MER autonomous obstacle

avoidance system and the MER visual odometry & lo-

calization software.

3

One should remember that there

is a large team of human roboticists and geologists

working here on the Earth in support of the MER mis-

sions, to determine science targets and robotic com-

mand sequences on a daily basis; after the sun sets for

an MER rover, the rover mission team can determine

the science priorities and the command sequence for

the next sol in less than 4-5 hours.

4

One future mission deserves special discussion for

the technology developments described in this paper:

the Mars Science Laboratory, planned for launch in

2009 (MSL’2009). A particular capability desired

for this MSL’2009 mission will be to rapidly traverse

to up to three geologically-different scientific points-

of-interest within the landing ellipse. These three

geologically-different sites will be chosen from Earth

by analysis of relevant satellite imagery. Possible de-

sired maximal traversal rates could range from 300-

2000 meters/sol in order to reach each of the three

points-of-interest in the landing ellipse in minimum

time.

Given these substantial expected traversal rates of

the MSL’2009 rover, autonomous obstacle avoidance

(Goldberg et al., 2002) and autonomous visual odom-

etry & localization (Olson et al., 2003) will be es-

sential to achieve these rates, since otherwise, rover

damage and slow science-target approach would be

the results. Given such autonomy in the rapid tra-

verses, it behooves us to enable the autonomous

rover with sufficient scientific responsibility. Other-

wise, the robotic rover exploration system might drive

right past an important scientific target-of-opportunity

along the way to the human-chosen scientific point-

of-interest. Crawford & Tamppari (Crawford and

Tamppari, 2002) and their NASA/Ames team summa-

rize possible ‘autonomous traverse science’, in which

every 20-30 meters during a 300 meter traverse (in

their example), science pancam and Mini-TES (Ther-

mal Emission Spectrometer) image mosaics are au-

3

This visual odometry and localization software was

added to the systems after the rovers had been on Mars for

several months (Squyres, 2004).

4

Right after landing, this command sequencing took

about 17 hours (Squyres, 2004).

tonomously obtained. They state that “there may be

onboard analysis of the science data from the pancam

and the mini-TES, which compares this data to prede-

fined signatures of carbonates or other targets of inter-

est. If detected, traverse may be halted and informa-

tion relayed back to Earth.” This onboard analysis of

the science data is precisely the technology issue that

we have been working towards solving. This paper

is the first report to the general robotics community

describing our progress towards giving a robotic as-

trobiologist some aspects of autonomous recognition

of scientific targets-of-opportunity. This technology

development may not be sufficiently mature nor suf-

ficiently necessary for deployment on the MSL’2009

mission, but it should find utility in missions beyond

MSL’2009.

Before proceeding, we first note here two of the re-

lated efforts in the development of autonomous recog-

nition of scientific targets-of-opportunity for astrobi-

ological exploration: firstly, the work on developing a

Nomad robot to search for meteorites in Antartica led

by the Carnegie Mellon University Robotics Institute

(Apostolopoulos et al., 2000; Pedersen, 2001), and

secondly, the work by a group at NASA/Ames on de-

veloping a Geological Field Assistant (GFA) (Gulick

et al., 2001; Gulick et al., 2002; Gulick et al., 2004).

From an algorithmic point-of-view, the uncommon-

mapping technique presented in this paper attempts

to identify interest points in a context-free, unbiased

manner. In related work, (Heidemann, 2004) has

studied the use of spatial symmetry of color pixel val-

ues to identify focus points in a context-free, unbiased

manner.

Figure 1: D

´

ıaz Mart

´

ınez & McGuire with the Cyborg As-

trobiologist System on 3 March 2004, 10 meters from the

outcrop cliff that is being studied during the first geologi-

cal field mission to near Rivas Vaciamadrid . We are taking

notes prior to acquiring one of our last-of-the-day mosaics

and its set of interest-point image chips. This is the tripod

position #2 shown in Fig. 6, nearest the cliffs.

ICINCO 2005 - ROBOTICS AND AUTOMATION

284

2 THE CYBORG GEOLOGIST &

ASTROBIOLOGIST SYSTEM

Our ongoing effort in this area of autonomous recog-

nition of scientific targets-of-opportunity for field ge-

ology and field astrobiology is beginning to mature as

well. To date, we have developed and field-tested a

GFA-like “Cyborg Astrobiologist” system (McGuire

et al., 2004a; McGuire et al., 2004b; McGuire et al.,

2005a; McGuire et al., 2005b) that now can:

• Use human mobility to maneuver to and within a ge-

ological site and to follow suggestions from the com-

puter as to how to approach a geological outcrop;

• Use a portable robotic camera system to obtain a

mosaic of color images;

• Use a ‘wearable’ computer to search in real-time for

the most uncommon regions of these mosaic images;

• Use the robotic camera system to re-point at several

of the most uncommon areas of the mosaic images, in

order to obtain much more detailed information about

these ‘interesting’ uncommon areas;

• Use human intelligence to choose between the wear-

able computer’s different options for interesting areas

in the panorama for closer approach; and

• Repeat the process as often as desired, sometimes

retracing a step of geological approach.

In the Mars Exploration Workshop in Madrid in

November 2003, we demonstrated some of the early

capabilities of our ‘Cyborg’ Geologist/Astrobiologist

System (McGuire et al., 2004b). We have been us-

ing this Cyborg system as a platform to develop

computer-vision algorithms for recognizing interest-

ing geological and astrobiological features, and for

testing these algorithms in the field here on the Earth.

The half-human/half-machine ‘Cyborg’ approach

(Fig. 1) uses human locomotion and human-geologist

intuition/intelligence for taking the computer vision-

algorithms to the field for teaching and testing, using

a wearable computer. This is advantageous because

we can therefore concentrate on developing the ‘sci-

entific’ aspects for autonomous discovery of features

in computer imagery, as opposed to the more ‘engi-

neering’ aspects of using computer vision to guide

the locomotion of a robot through treacherous terrain.

This means the development of the scientific vision

system for the robot is effectively decoupled from the

development of the locomotion system for the robot.

After the maturation and optimization of the

computer-vision algorithms, we hope to transplant

these algorithms from the Cyborg computer to the on-

board computer of a semi-autonomous robot that will

be bound for Mars or one of the interesting moons

in our solar system. Field tests of such a robot have

already begun with the Cyborg Astrobiologist’s soft-

ware for scientific autonomy. Our software has been

delivered to the robotic borehole inspection system of

the MARTE project

5

.

Figure 2: An image segmentation made by human geolo-

gist D

´

ıaz Mart

´

ınez of the outcrop during the first mission to

Rivas Vaciamadrid. Region 1 has a tan color and a blocky

texture; Region 2 is subdivided by a vertical fault and has

more red color and a more layered texture than Region 1;

Region 3 is dominated by white and tan layering; and Re-

gion 4 is covered by vegetation. The dark & wet spots in

Region 3 were only observed during the second mission, 3

months later. The Cyborg Geologist/Astrobiologist made

its own image segmentations for portions of the cliff face

that included the area of the white layering at the bottom of

the cliff (Fig. 7).

Both of the field geologists on our team, D

´

ıaz

Mart

´

ınez and Orm

¨

o, have independently stressed the

importance to field geologists of geological ‘contacts’

and the differences between the geological units that

are separated by the geological contact. For this rea-

son, in March 2003, we decided that the most impor-

tant tool to develop for the beginning of our computer

vision algorithm development was that of ‘image seg-

mentation’. Such image segmentation algorithms

would allow the computer to break down a panoramic

image into different regions (Fig. 2 for an example),

based upon similarity, and to find the boundaries or

contacts between the different regions in the image,

based upon difference. Much of the remainder of this

paper discusses the first geological field trials with

the wearable computer of the segmentation algorithm

and the associated uncommon map algorithm that we

have implemented and developed. In the near future,

we hope to use the Cyborg Astrobiologist system to

test more advanced image-segmentation algorithms,

capable of simultaneous color and texture image seg-

mentation (Freixenet et al., 2004), as well as novelty-

detection algorithms (Bogacz et al., 1999)

5

MARTE is a practice mission in the summer of 2005

for tele-operated robotic drilling and tele-operated scientific

studies in a Mars-like environment near the Rio Tinto, in

Andalucia in southern Spain.

FIELD GEOLOGY WITH A WEARABLE COMPUTER: FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST

SYSTEM

285

2.1 Image Segmentation,

Uncommon Maps, Interest

Maps, and Interest Points

With human vision, a geologist:

• Firstly, tends to pay attention to those areas of a

scene which are most unlike the other areas of the

scene; and then,

• Secondly, attempts to find the relation between the

different areas of the scene, in order to understand the

geological history of the outcrop.

The first step in this prototypical thought process

of a geologist was our motivation for inventing the

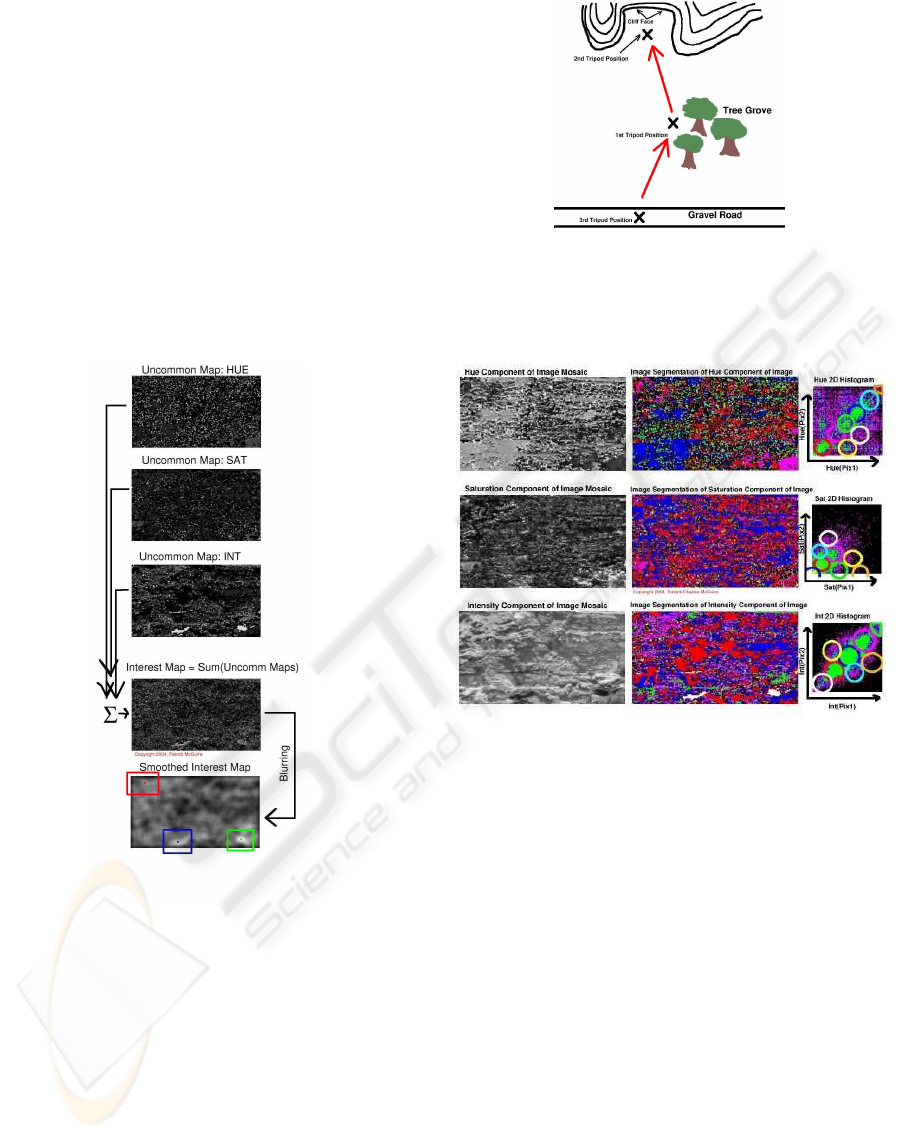

concept of uncommon maps. See Fig. 3 for a sim-

ple illustration of the concept of an uncommon map.

We have not yet attempted to solve the second step in

this prototypical thought process of a geologist, but

it is evident from the formulation of the second step,

that human geologists do not immediately ignore the

common areas of the scene. Instead, human geolo-

gists catalog the common areas and put them in the

back of their minds for “higher-level analysis of the

scene”, or in other words, for determining explana-

tions for the relations of the uncommon areas of the

scene with the common areas of the scene.

Figure 3: For the simple, idealized image on the left, we

show the corresponding uncommon map on the right. The

whiter areas in the uncommon map are more uncommon

than the darker areas in this map.

Prior to implementing the ‘uncommon map’, the

first step of the prototypical geologist’s thought

process, we needed a segmentation algorithm, in or-

der to produce pixel-class maps to serve as input to

the uncommon map algorithm. We have implemented

the classic co-occurrence histogram algorithm (Haral-

ick et al., 1973; Haddon and Boyce, 1990). For this

work, we have not included texture information in ei-

ther the segmentation algorithm or in the uncommon

map algorithm. Currently, each of the three bands of

HSI color information is segmented separately, and

later merged in the interest map by summing three

independent uncommon maps. In future work, ad-

vanced image-segmentation algorithms that simulta-

neously use color & texture could be developed for

and tested on the Cyborg Astrobiologist System (i.e.,

the algorithms of Freixenet et al., 2004).

The concept of an ‘uncommon map’ is our inven-

tion, though it probably has been independently in-

vented by other authors, since it is somewhat use-

ful. In our implementation, the uncommon map algo-

rithm takes the top 8 pixel classes determined by the

image segmentation algorithm, and ranks each pixel

class according to how many pixels there are in each

class. The pixels in the pixel class with the greatest

number of pixel members are numerically labelled as

‘common’, and the pixels in the pixel class with the

least number of pixel members are numerically la-

belled as ’uncommon’. The ‘uncommonness’ hence

ranges from 1 for a common pixel to 8 for an uncom-

mon pixel, and we can therefore construct an uncom-

mon map given any image segmentation map. In our

work, we construct several uncommon maps from the

color image mosaic, and then we sum these uncom-

mon maps together, in order to arrive at a final interest

map.

In this paper, we develop and test a simple, high-

level concept of interest points of an image, which is

based upon finding the centroids of the smallest (most

uncommon) regions of the image. Such a ‘global’

high-level concept of interest points differs from the

lower-level ‘local’ concept of F

¨

orstner interest points

based upon corners and centers of circular features.

However, this latter technique with local interest

points is used by the MER team for their stereo-vision

image matching and for their visual-odometry and

visual-localization image matching (Goldberg et al.,

2002; Olson et al., 2003; Nesnas et al., 1999). Our

interest point method bears somewhat more relation

to the higher-level wavelet-based salient points tech-

nique (Sebe et al., 2003), in that they search first

at coarse resolution for the image regions with the

largest gradient, and then they use wavelets in order

to zoom in towards the salient point within that region

that has the highest gradient. Their salient point tech-

nique is edge-based, whereas our interest point is cur-

rently region-based. Since in the long-term, we have

an interest in geological contacts, this edge-based &

wavelet-based salient point technique could be a rea-

sonable future interest-point algorithm to incorporate

into our Cyborg Astrobiologist system for testing.

2.2 Hardware & Software for the

Cyborg Astrobiologist

The non-human hardware of the Cyborg Astrobiolo-

gist system consists of:

• a 667 MHz wearable computer (from ViA Com-

puter Systems) with a ‘power-saving’ Transmeta

‘Crusoe’ CPU and 112 MB of physical memory,

• an SV-6 Head Mounted VGA Display (from Tekgear

, via the Spanish supplier Decom) that works well in

bright sunlight,

• a SONY ‘Handycam’ color video camera (model

ICINCO 2005 - ROBOTICS AND AUTOMATION

286

DCR-TRV620E-PAL), with a Firewire/IEEE1394 ca-

ble to the computer,

• a thumb-operated USB finger trackball from 3G

Green Green Globe Co., resupplied by ViA Computer

Systems, and by Decom,

• a small keyboard attached to the human’s arm,

• a tripod for the camera, and

• a Pan-Tilt Unit (model PTU-46-70W) from Directed

Perception with a bag of associated power and signal

converters.

The wearable computer processes the images ac-

quired by the color digital video camera, to compute

a map of interesting areas. The computations include:

simple mosaicking by image-butting, as well as two-

dimensional histogramming for image segmentation

(Haralick et al., 1973; Haddon and Boyce, 1990).

This image segmentation is independently computed

for each of the Hue, Saturation, and Intensity (H,S,I)

image planes, resulting in three different image-

segmentation maps. These image-segmentation maps

were used to compute ‘uncommon’ maps (one for

each of the three (H,S,I) image-segmentation maps):

each of the three resulting uncommon maps gives

highest weight to those regions of smallest area for

the respective (H,S,I) image planes. Finally, the three

(H,S,I) uncommon maps are added together into an

interest map, which is used by the Cyborg system for

subsequent interest-guided pointing of the camera.

After segmenting the mosaic image (Fig. 7), it be-

comes obvious that a very simple method to find in-

teresting regions in an image is to look for those re-

gions in the image that have a significant number of

uncommon pixels. We accomplish this by (Fig. 5):

first, creating an uncommon map based upon a linear

reversal of the segment area ranking; second, adding

the 3 uncommon maps (for H, S, & I ) together to

form an interest map; and third, blurring this interest

map

6

.

Based upon the three largest peaks in the

blurred/smoothed interest map, the Cyborg system

then guides the Pan-Tilt Unit to point the camera

at each of these three positions to acquire high-

resolution color images of the three interest points

(Fig. 4). By extending a simple image-acquisition

and image-processing system to include robotic and

mosaicking elements, we were able to conclusively

demonstrate that the system can make reasonable de-

cisions by itself in the field for robotic pointing of the

camera.

6

with a gaussian smoothing kernel of width B = 10

pixels.

3 DESCRIPTIVE SUMMARIES

OF THE FIELD SITE AND OF

THE EXPEDITIONS

On March 3rd and June 11th, 2004, three of the

authors, McGuire, D

´

ıaz Mart

´

ınez & Orm

¨

o, tested

the “Cyborg Astrobiologist” system for the first time

at a geological site, the gypsum-bearing southward-

facing stratified cliffs near the “El Campillo” lake of

Madrid’s Southeast Regional Park, outside the suburb

of Rivas Vaciamadrid. Due to the significant storms in

the 3 months between the two missions, there were 2

dark & wet areas in the gypsum cliffs that were visible

only during the second mission. In Fig. 2, we show

the segmentation of the outcrop (during the first mis-

sion), according to the human geologist, D

´

ıaz Mar-

tinez, for reference.

The computer was worn on McGuire’s belt, and

typically took 3-5 minutes to acquire and compose a

mosaic image composed of M × N subimages. Typ-

ical values of M × N used were 3 × 9 and 11 × 4.

The sub-images were downsampled in both directions

by a factor of 4-8 during these tests; the original sub-

image dimensions were 360 × 288.

Several mosaics were acquired of the cliff face

from a distance of about 300 meters, and the com-

puter automatically determined the three most inter-

esting points in each mosaic. Then, the wearable

computer automatically repointed the camera towards

each of the three interest points, in order to acquire

non-downsampled color images of the region around

each interest point in the image. All the original

mosaics, all the derived mosaics and all the interest-

point subimages were then saved to hard disk for post-

mission study.

Two other tripod positions were chosen for acquir-

ing mosaics and interest-point image-chip sets. At

each of the three tripod positions, 2-3 mosaic images

and interest-point image-chip sets were acquired. One

of the chosen tripod locations was about 60 meters

from the cliff’s face; the other was about 10 meters

(Fig. 1) from the cliff face.

During the 2nd mission at distances of 300 meters

and 60 meters, the system most often determined the

wet spots (Fig. 4) to be the most interesting regions on

the cliff face. This was encouraging to us, because we

also found these wet spots to be the most interesting

regions.

7

7

These dark & wet regions were interesting to us partly

because they give information about the development of

the outcrop. Even if the relatively small spots were only

dark, and not wet (i.e., dark dolerite blocks, or a brecciated

basalt), their uniqueness in the otherwise white & tan out-

crop would have drawn our immediate attention. Addition-

ally, even if this had been our first trip to the site, and if the

dark spots had been present during this first trip, these dark

FIELD GEOLOGY WITH A WEARABLE COMPUTER: FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST

SYSTEM

287

After the tripod position at 60 meters distance, we

chose the next tripod position to be about 10 meters

from the cliff face (Fig. 1). During this ‘close-up’

study of the cliff face, we intended to focus the Cy-

borg Astrobiologist exploration system upon the two

points that it found most interesting when it was in the

more distant tree grove, namely the two wet and dark

regions of the lower part of the cliff face. By moving

from 60 meters distance to 10 meters distance and by

focusing at the closer distance on the interest points

determined at the larger distance, we wished to sim-

ulate how a truly autonomous robotic system would

approach the cliff face (see the map in Fig. 6). Un-

fortunately, due to a combination of a lack of human

foresight in the choice of tripod position and a lack

of more advanced software algorithms to mask out

the surrounding & less interesting region (see discus-

sion in Section 4), for one of the two dark spots, the

Cyborg system only found interesting points on the

undarkened periphery of the dark & wet stains. Fur-

thermore, for the other dark spot, the dark spot was

spatially complex, being subdivided into several re-

gions, with some green and brown foliage covering

part of the mosaic. Therefore, in both close-up cases

the value of the interest mapping is debatable. This

interest mapping could be improved in the future, as

we discuss in Section 4.2.

4 RESULTS

4.1 Results from the First Geological

Field Test

As first observed during the first mission to Rivas on

March 3rd, the characteristics of the southward-facing

cliffs at at Rivas Vaciamadrid consist of mostly tan-

colored surfaces, with some white veins or layers, and

with significant shadow-causing three-dimensional

structure. The computer vision algorithms performed

adequately for a first visit to a geological site, but they

need to be improved in the future. As decided at the

end of the first mission by the mission team, the im-

provements include: shadow-detection and shadow-

interpretation algorithms, and segmentation of the im-

ages based upon microtexture.

In the last case, we decided that due to the very

monochromatic & slightly-shadowy nature of the

imagery, the Cortical Interest Map algorithm non-

regions would have captured our attention for the same rea-

sons. The fact that these dark spots had appeared after our

first trip and before the second trip was not of paramount

importance to grab our interest (but the ‘sudden’ appear-

ance of the dark spots between the two missions did arouse

our higher-order curiosity).

intuitively decided to concentrate its interest on dif-

ferences in intensity, and it tended to ignore hue and

saturation.

After the first geological field test, we spent sev-

eral months studying the imagery obtained during this

mission, and fixing various further problems that were

only discovered after the first mission. Though we

had hoped that the first mission to Rivas would have

been more like a science mission, in reality it was

more of an engineering mission.

4.2 Results from the Second

Geological Field Test

In Fig. 4, from the tree grove at a distance of 60 me-

ters, the Cyborg Astrobiologist system found the dark

& wet spot on the right side to be the most interest-

ing, the dark & wet spot on the left side to be the

second most interesting, and the small dark shadow

in the upper left hand corner to be the 3rd most in-

teresting. For the first two interest points (the dark &

wet spots), it is apparent from the uncommon map for

intensity pixels in Fig. 5 that these points are interest-

ing due to their relatively remarkable intensity values.

By inspection of Fig. 7, we see that these pixels which

reside in the white segment of the intensity segmenta-

tion mosaic are unusual because they are a cluster of

very dim pixels (relative to the brighter red, blue and

green segments). Within the dark wet spots, we ob-

serve that these particular points in the white segment

of the intensity segmentation in Fig. 7 are interesting

because they reside in the shadowy areas of the dark

& wet spots. We interpret the interest in the 3rd in-

terest point to be due to the juxtaposition of the small

green plant with the shadowing in this region; the in-

terest in this point is significantly smaller than for the

2 other interest points.

More advanced software could be developed to

handle better the close-up real-time interest-map

analysis of the imagery acquired at the close-up tripod

position (10 meter distance from the cliff; not shown

here). Here are some options to be included in such

software development:

• Add hardware & software to the Cyborg Astrobiolo-

gist so that it can make intelligent use of its zoom lens.

We plan to use the camera’s LANC communication

interface to control the zoom lens with the wearable

computer. With such software for intelligent zoom-

ing, the system could have corrected the human’s mis-

take in tripod placement and decided to zoom further

in, to focus only on the shadowy part of the dark &

wet spot (which was determined to be the most inter-

esting point at a distance of 60 meters), rather than the

periphery of the entire dark & wet spot.

• Enhance the Cyborg Astrobiologist system so that it

has a memory of the image segmentations performed

ICINCO 2005 - ROBOTICS AND AUTOMATION

288

at a greater distance or at a lower magnification of

the zoom lens. Then, when moving to a closer tripod

position or a higher level of zoom-magnification, reg-

ister the new imagery or the new segmentation maps

with the coarser resolution imagery and segmentation

maps. Finally, tell the system to mask out or ignore or

deemphasize those parts of the higher resolution im-

agery which were part of the low-interest segments of

the coarser, more distant segmentation maps, so that it

concentrates on those features that it determined to be

interesting at coarse resolution and higher distance.

Figure 4: Mosaic image of a three-by-four set of grayscale

sub-images acquired by the Cyborg Astrobiologist at the be-

ginning of its second expedition. The three most interesting

points were subsequently revisited by the camera in order to

acquire full-color higher-resolution images of these points-

of-interest. The colored points and rectangles represent the

points that the Cyborg Astrobiologist determined (on loca-

tion) to be most interesting; green is most interesting, blue

is second most interesting, and red is third most interesting.

The images were taken and processed in real-time between

1:25PM and 1:35PM local time on 11 June 2004 about 60

meters from some gypsum-bearing southward-facing cliffs

near the “El Campillo” lake of the Madrid southeast re-

gional park outside of Rivas Vaciamadrid . See Figs. 5 & 7

for some details about the real-time image processing that

was done in order to determine the location of the interest

points in this figure.

5 DISCUSSION & CONCLUSIONS

Both the human geologists on our team concur with

the judgement of the Cyborg Astrobiologist software

system, that the two dark & wet spots on the cliff wall

were the most interesting spots during the second mis-

sion. However, the two geologists also state that this

largely depends on the aims of study for the geolog-

ical field trip; if the aim of the study is to search for

hydrological features, then these two dark & wet spots

are certainly interesting. One question which we have

thus far left unstudied is “What would the Cyborg As-

trobiologist system have found interesting during the

second mission if the two dark & wet spots had not

been present during the second mission?” It is possi-

ble that it would again have found some dark shadow

particularly interesting, but with the improvements

made to the system between the first and second mis-

sion, it is also possible that it could have found a dif-

ferent feature of the cliff wall more interesting.

5.1 Outlook

The NEO programming for this Cyborg Geologist

project was initiated with the SONY Handycam in

April 2002. The wearable computer arrived in June

2003, and the head mounted display arrived in No-

vember 2003. We now have a reliably functioning

human and hardware and software Cyborg Geologist

system, which is partly robotic with its Pan-Tilt cam-

era mount. This robotic extension allows the camera

to be pointed repeatedly, precisely & automatically in

different directions.

Based upon the significantly-improved perfor-

mance of the Cyborg Astrobiologist system during the

2nd mission to Rivas in June 2004, we conclude that

the system now is debugged sufficiently so as to be

able to produce studies of the utility of particular com-

puter vision algorithms for geological deployment in

the field.

8

We have outlined some possibilities for im-

provement of the system based upon the second field

trip, particularly in the improvement in the systems-

level algorithms needed in order to more intelligently

drive the approach of the Cyborg or robotic system

towards a complex geological outcrop. These possi-

ble systems-level improvements include: hardware &

software for intelligent use of the camera’s zoom lens

and a memory of the image segmentation performed

at greater distance or lower magnification of the zoom

lens.

ACKNOWLEDGEMENTS

P. McGuire, J. Orm

¨

o and E. D

´

ıaz Mart

´

ınez would

all like to thank the Ramon y Cajal Fellowship pro-

gram of the Spanish Ministry of Education and Sci-

8

NOTE IN PROOFS: After this paper was originally

written, we did some tests at a second field site (in Guadala-

jara, Spain) with the same algorithm and the same parame-

ter settings. Despite the change in character of the geolog-

ical imagery from the first field site (in Rivas Vaciamadrid,

discussed below) to the second field site, the uncommon-

mapping technique again performed rather well, giving an

agreement with post-mission human-geologist assessment

68% of the time (with a 32% false positive rate and a 32%

false negative rate), see (McGuire et al., 2005b) for more

detail. This success rate is qualitiatively comparable to the

results from the first mission in Rivas. This is evidence that

the system performs in a context-free, unbiased manner.

FIELD GEOLOGY WITH A WEARABLE COMPUTER: FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST

SYSTEM

289

ence. Many colleagues have made this project possi-

ble through their technical assistance, administrative

assistance, or scientific conversations. We give spe-

cial thanks to Kai Neuffer, Antonino Giaquinta, Fer-

nando Camps Mart

´

ınez, and Alain Lepinette Malvitte

for their technical support. We are indebted to Glo-

ria Gallego, Carmen Gonz

´

alez, Ramon Fern

´

andez,

Coronel Angel Santamaria, and Juan P

´

erez Mercader

for their administrative support. We acknowledge

conversations with Virginia Souza-Egipsy, Mar

´

ıa Paz

Zorzano Mier, Carmen C

´

ordoba Jabonero, Josefina

Torres Redondo, V

´

ıctor R. Ruiz, Irene Schneider,

Carol Stoker, Paula Grunthaner, Maxwell D. Walter,

Fernando Ayll

´

on Quevedo, Javier Mart

´

ın Soler, J

¨

org

Walter, Claudia Noelker, Gunther Heidemann, Robert

Rae, and Jonathan Lunine. The field work by J. Orm

¨

o

was partially supported by grants from the Spanish

Ministry of Education and Science (AYA2003-01203

and CGL2004-03215). The equipment used in this

work was purchased by grants to our Center for As-

trobiology from its sponsoring research organizations,

CSIC and INTA.

REFERENCES

Apostolopoulos, D., Wagner, M., Shamah, B., Pedersen, L.,

Shillcutt, K., and Whittaker, W. (2000). Technology

and field demonstration of robotic search for Antarc-

tic meteorites. International Journal of Robotics Re-

search, 19(11):1015–1032.

Bogacz, R., Brown, M. W., and Giraud-Carrier, C. (1999).

High capacity neural networks for familiarity discrim-

ination. In Proceedings of the Ninth International

Conference on Artificial Neural Networks (ICANN99),

pages 773–776.

Crawford, J. and Tamppari, L. (2002). Mars Science

Laboratory – autonomy requirements analysis. In-

telligent Data Understanding Seminar, available on-

line at: http://is.arc.nasa.gov/IDU/slides/reports02/-

Crawford

Aut02c.pdf.

Crisp, J., Adler, M., et al. (2003). Mars Exploration Rover

mission. Journal of Geophysical Research (Planets),

108(2):1.

Freixenet, J., Mu

˜

noz, X., Mart

´

ı, J., and Llad

´

o, X. (2004).

Color texture segmentation by region-boundary coop-

eration. In Computer Vision – ECCV 2004, Eighth

European Conference on Computer Vision, Proceed-

ings, Part II, Lecture Notes in Computer Science.

Prague, Czech Republic, volume 3022, pages 250–

261. Springer. Ed.: T. Pajdla and J. Matas., (Also

available in the CVonline archive).

Goldberg, S., Maimone, M., and Matthies, L. (2002). Stereo

vision and rover navigation software for planetary ex-

ploration. In 2002 IEEE Aerospace Conference Pro-

ceedings, pages 2025–2036.

Gulick, V. C., Hart, S. D., Shi, X., and Siegel, V. L. (2004).

Developing an automated science analysis system for

Mars surface exploration for MSL and beyond. In Lu-

nar and Planetary Science Conference Abstracts, vol-

ume 35, page 2121.

Gulick, V. C., Morris, R. L., Bishop, J., Gazis, P., Alena, R.,

and Sierhuis, M. (2002). Geologist’s Field Assistant:

developing image and spectral analyses algorithms for

remote science exploration. In Lunar and Planetary

Science Conference Abstracts, volume 33, page 1961.

Gulick, V. C., Morris, R. L., Ruzon, M. A., and Roush, T. L.

(2001). Autonomous image analyses during the 1999

Marsokhod rover field test. Journal of Geophysical

Research, 106:7745–7764.

Haddon, J. and Boyce, J. (1990). Image segmentation

by unifying region and boundary information. IEEE

Trans. Pattern Anal. Mach. Intell., 12(10):929–948.

Haralick, R., Shanmugan, K., and Dinstein, I. (1973). Tex-

ture features for image classification. IEEE SMC-3,

6:610–621.

Heidemann, G. (2004). Focus of attention from local color

symmetries. IEEE Trans. Pattern Anal. Mach. Intell.,

26(7):817–830.

McGuire, P., Orm

¨

o, J., G

´

omez-Elvira, J., Rodr

´

ıguez-

Manfredi, J., Sebasti

´

an-Mart

´

ınez, E., Ritter, H.,

Oesker, M., Haschke, R., Ontrup, J., and D

´

ıaz-

Mart

´

ınez, E. (2005a). The Cyborg Astrobiologist:

Algorithm development for autonomous planetary

(sub)surface exploration. Astrobiology, 5(2):230, oral

presentations. Special Issue: Abstracts of NAI’2005:

Biennial Meeting of the NASA Astrobiology Institute,

April 10-14, Boulder, Colorado.

McGuire, P. C., D

´

ıaz-Mart

´

ınez, E., Orm

¨

o, J., G

´

omez-Elvira,

J., Rodr

´

ıguez-Manfredi, J., Sebasti

´

an-Mart

´

ınez, E.,

Ritter, H., Haschke, R., Oesker, M., and Ontrup, J.

(2005b). The Cyborg Astrobiologist: Scouting red

beds for uncommon features with geological signif-

icance. International Journal of Astrobiology, 4:(in

press) http://arxiv.org/abs/cs.CV/0505058.

McGuire, P. C., Orm

¨

o, J., Diaz-Martinez, E., Rodr

´

ıguez-

Manfredi, J., G

´

omez-Elvira, J., Ritter, H., Oesker,

M., and Ontrup, J. (2004a). The Cyborg

Astrobiologist: first field experience. Inter-

national Journal of Astrobiology, 3(3):189–207,

http://arxiv.org/abs/cs.CV/0410071.

McGuire, P. C., Rodr

´

ıguez-Manfredi, J. A., et al. (2004b).

Cyborg systems as platforms for computer-vision

algorithm-development for astrobiology. In

Proc. of the Third European Workshop on Exo-

Astrobiology, 18 - 20 November 2003, Madrid,

Spain, volume ESA SP-545, pages 141–144,

http://arxiv.org/abs/cs.CV/0401004. Ed.: R. A. Harris

and L. Ouwehand. Noordwijk, Netherlands: ESA

Publications Division, ISBN 92-9092-856-5.

Nesnas, I., Maimone, M., and Das, H. (1999). Autonomous

vision-based manipulation from a rover platform. In

Proceedings of the CIRA Conference, Monterey, Cali-

fornia.

ICINCO 2005 - ROBOTICS AND AUTOMATION

290

Olson, C., Matthies, L., Schoppers, M., and Maimone, M.

(2003). Rover navigation using stereo ego-motion.

Robotics and Autonomous Systems, 43(4):215–229.

Pedersen, L. (2001). Autonomous characterization of un-

known environments. In 2001 IEEE International

Conference on Robotics and Automation, volume 1,

pages 277–284.

Sebe, N., Tian, Q., Loupias, E., Lew, M., and Huang, T.

(2003). Evaluation of salient points techniques. Im-

age and Vision Computing, Special Issue on Machine

Vision, 21:1087–1095.

Squyres, S. (2004). private communication.

Squyres, S., Arvidson, R., et al. (2004). The Spirit rover’s

Athena science investigation at Gusev Crater, Mars.

Science, 305:794–800.

Figure 5: These are the uncommon maps for the mosaic

shown in Fig. 4, based on the region sizes determined by the

image-segmentation algorithm shown in Fig. 7. Also shown

is the interest map, i.e., the unweighted sum of the three un-

common maps. We blur the original interest map before

determining the “most interesting” points. These “most in-

teresting” points are then sent to the camera’s Pan/Tilt mo-

tor in order to acquire and save-to-disk 3 higher-resolution

RGB color images of the small areas in the image around

the interest points (Fig. 4). Green is the most interesting

point. Blue is 2nd most interesting. And Red is 3rd most

interesting.

Figure 6: Map of the Cyborg Astrobiologist’s autonomous

geological approach. The image mosaic that we show in

Figures 4, 5 & 7 in this paper was acquired at the tripod

position near the tree grove.

Figure 7: In the middle column, we show the three image-

segmentation maps computed in real-time by the Cyborg

Astrobiologist system, based upon the original Hue, Satura-

tion & Intensity (H, S & I) mosaics in the left column and

the derived 2D co-occurrence histograms shown in the right

column. The wearable computer made this and all other

computations for the original 3 × 4 mosaic (108 × 192 pix-

els, shown in Fig. 4) in about 2 minutes after the initial ac-

quisition of the mosaic sub-images was completed. The col-

ored regions in each of the three image-segmentation maps

correspond to pixels & their neighbors in that map that have

similar statistical properties in their two-point correlation

values, as shown by the circles of corresponding colors in

the 2D histograms in the column on the right. The RED-

colored regions in the segmentation maps correspond to the

mono-statistical regions with the largest area in this mosaic

image; the RED regions are the least “uncommon” pixels

in the mosaic. The BLUE-colored regions correspond to

the mono-statistical regions with the 2nd largest area in this

mosaic image; the BLUE regions are the 2nd least “uncom-

mon” pixels in the mosaic. And similarily for the PURPLE,

GREEN, CYAN, YELLOW, WHITE, and ORANGE. The

pixels in the BLACK regions have failed to be segmented

by the segmentation algorithm.

FIELD GEOLOGY WITH A WEARABLE COMPUTER: FIRST RESULTS OF THE CYBORG ASTROBIOLOGIST

SYSTEM

291